One evening, I checked my wallet and saw a transaction stuck longer than usual. The delay itself didn’t annoy me—the unsettling part was the feeling that data was piling up before it could even translate into a real user experience.

After living through multiple market cycles, I’ve realized something uncomfortable: ecosystems rarely fail because they lack ambition. They fail because tiny inefficiencies accumulate quietly until everything feels fragile. That’s why I’m no longer impressed by claims like “ultra-fast” or “ultra-cheap.”

What matters to me now is how a network behaves under real pressure. Can developers predict costs? Does the architecture handle spikes gracefully? The real story isn’t in the marketing—it’s in how data is structured so execution doesn’t grind to a halt.

Dusk caught my attention precisely because it focuses on the unglamorous details—the engineering decisions that determine whether a system stays in sync or remains just a slick prototype. Ironically, after being disappointed by too many buzzword-driven projects, I trust technical choices more when they’re grounded in measurable, deployable reality.

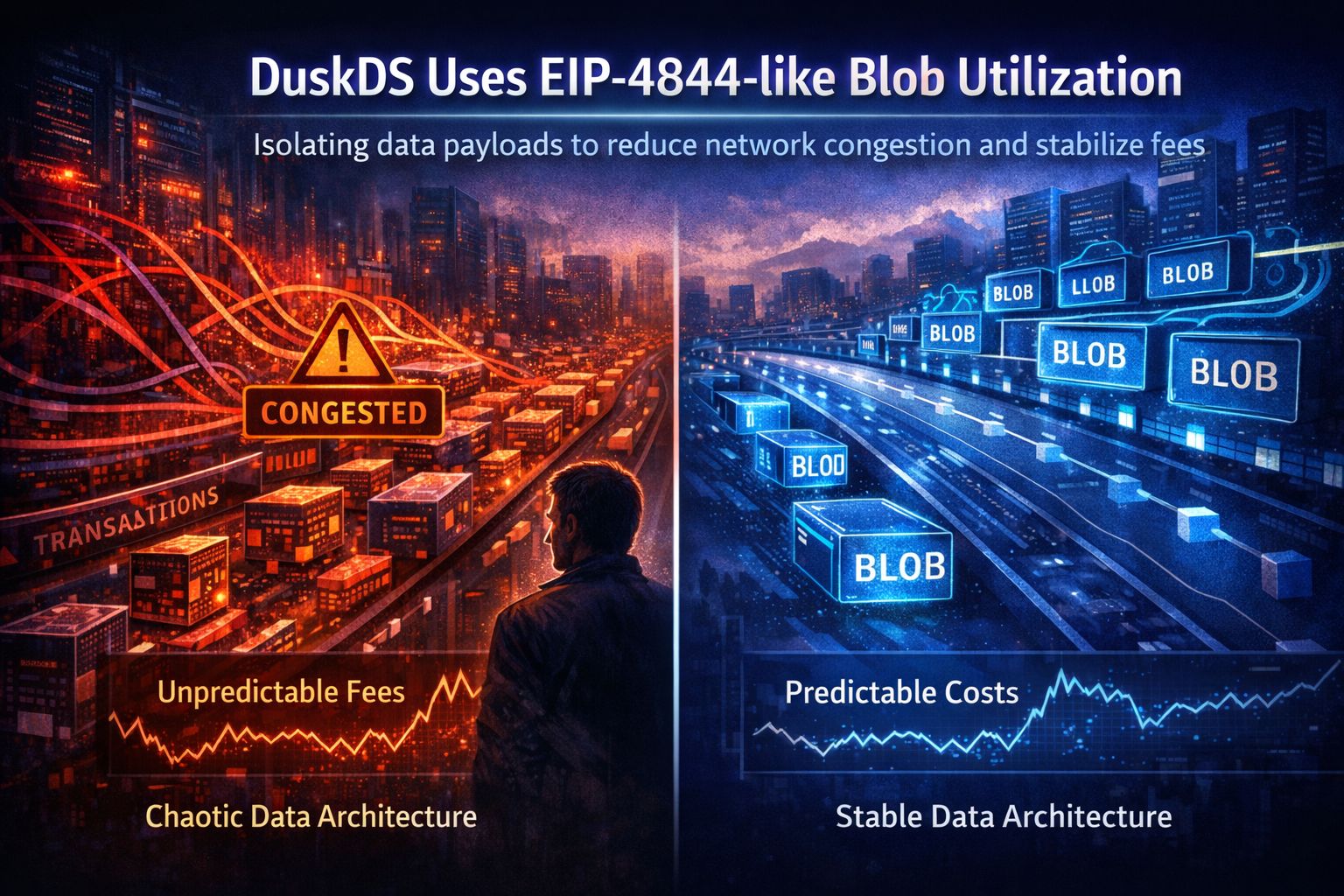

The part that stands out most is DuskDS adopting an EIP-4844-inspired blob approach. It directly addresses a developer’s biggest frustration: data shouldn’t compete with everything else for the same pipeline. By bundling heavy payloads into blobs, they move onto a separate data lane, easing pressure on normal transactions and smoothing out the unpredictable fee swings that feel almost random.

From the outside, this may sound boring. But anyone who’s deployed smart contracts, processed batch jobs, or pushed large state updates knows the pain: it’s not the occasional high cost—it’s the volatility. Today cheap, tomorrow expensive, and every release feels like rolling dice.

The industry keeps hyping speed, but stability is what actually scales. With blobs, applications can operate on a predictable data schedule instead of reacting to chaotic mempool conditions. This reduces constant optimization hacks, late-night deployments during low traffic windows, and the sense that you’re paying for congestion rather than utility.

There’s also a deeper psychological benefit for builders: control. When data limits, pricing models, and capacity are clearer, architectural decisions become rational instead of defensive. You can plan growth without fearing runaway costs, and you don’t have to design around network congestion tricks that compromise the product.

For someone building long-term, this makes a roadmap feel structural, not speculative. For an investor, it signals maturity. It answers the critical question: what happens when usage explodes, and does the system still remain economically sane?

Of course, blobs aren’t a silver bullet. Data availability, retention policies, infrastructure incentives, and stress-test edge cases still matter. But what I respect is the shift from narrative-driven marketing to mechanism-driven design. Instead of masking problems with UI polish and slogans, it tries to fix the underlying friction.

After years in this space, I’ve learned one thing: people stick with platforms that work consistently—not those that spike once and fade. If blob-based data routing on DuskDS truly stabilizes costs and reduces operational uncertainty, it’s more than optimization—it’s a bet on longevity.

So the real test is simple: when the network becomes crowded and noisy, will this architecture maintain its rhythm, allowing builders to focus on products instead of fighting infrastructure?