Vanar doesn’t fail when AI agents crash.

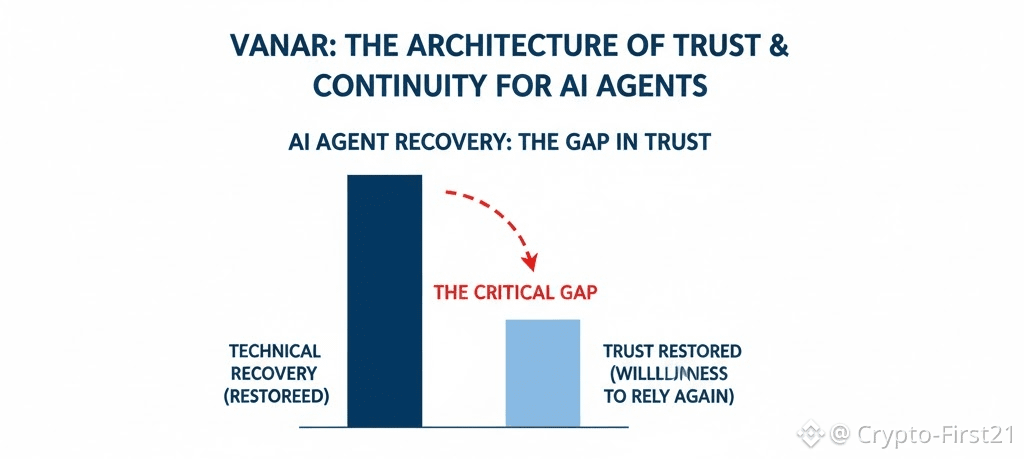

It fails when agents restart successfully, and nobody trusts them to continue.

The process completes. Context reloads. State syncs. Proofs validate. On paper, the agent is alive again. Execution resumes. Nothing is technically broken.

And still, nobody wants to rely on it.

Recovered becomes the most misleading word in the system.

Because it answers the wrong question.

The question teams quietly start asking instead is simpler and harder: should we let this agent keep operating?

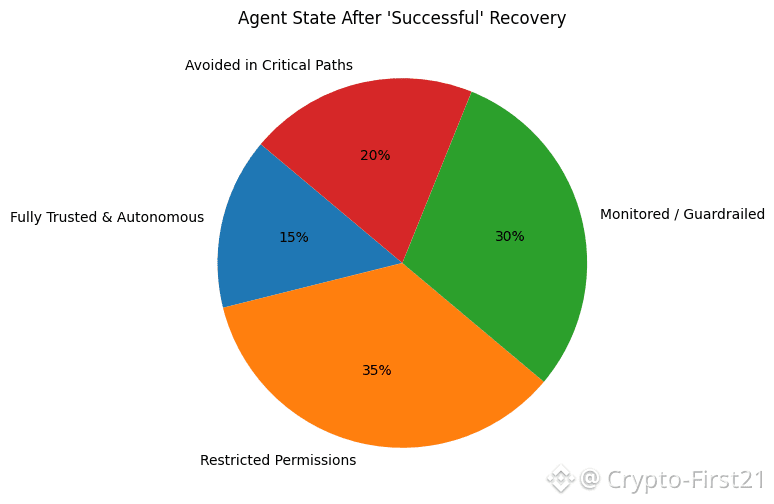

That shift never appears in metrics. It shows up in behavior.

Someone on the protocol side says the agent is back. No one wants to give it full permissions again. Engineers limit its scope. Product avoids putting it in charge of anything that matters. Infra adds guardrails without documenting them. The agent exists, but it stops being trusted.

Nothing is down. Something is missing.

Restart did its job, but it didn’t restore confidence.

Here’s the uncomfortable part: the chain is satisfied, but the team isn’t.

The agent survived, but predictability didn’t come back with it. And predictability is what real economic actors actually price into decisions.

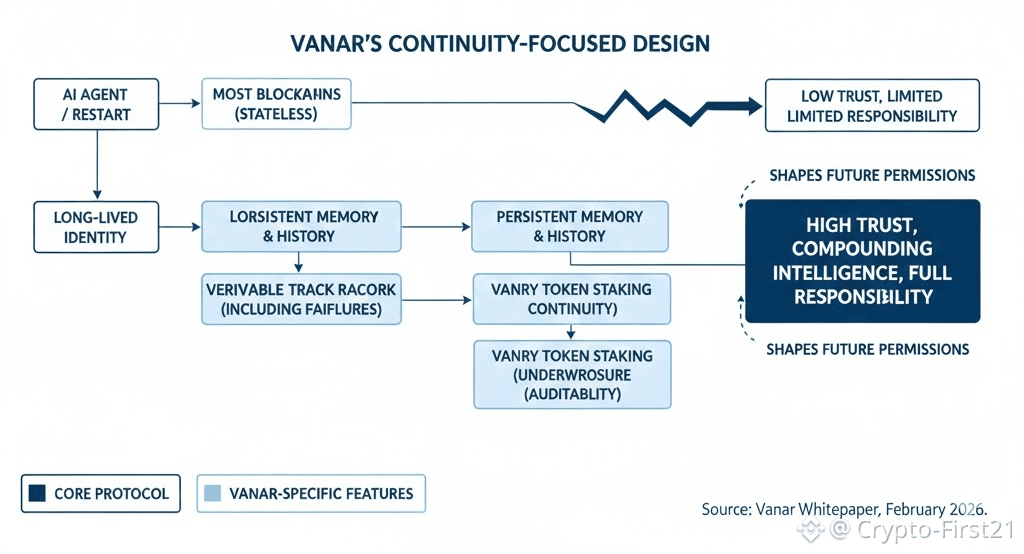

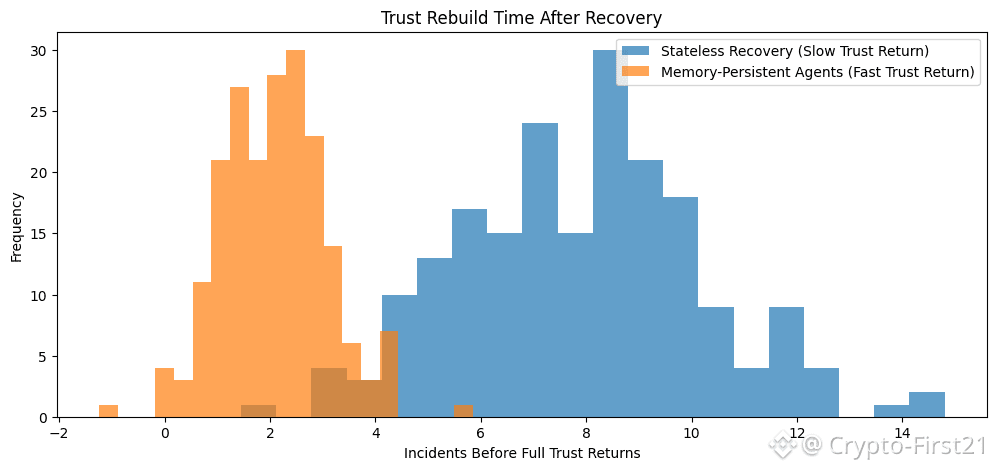

On Vanar, this distinction matters because stateless recovery preserves execution but erases learning. If an agent forgets why it failed yesterday, today’s success doesn’t feel like progress. The same workflows repeat. The same edge cases reappear. The same safeguards have to be rebuilt. From the system’s point of view, nothing went wrong. From the operator’s point of view, everything feels fragile.

Vanar’s core bet is that intelligence without continuity is not intelligence that compounds.

Most public chains don’t notice this problem because they collapse behavior into green or red. Either the transaction executed or it didn’t. Either the agent ran or it stopped. Recovery resets the story. But human teams don’t reset that way. They remember near-misses. They remember the moment they almost lost control. They remember how close they were to the edge.

Stateless systems erase that memory. Vanar keeps it.

By anchoring persistent memory and long-lived identity at the protocol layer, Vanar allows agents to carry their past forward. Failures don’t disappear after restart. They remain part of the agent’s history. That history shapes future permissions, risk limits, and trust. The agent doesn’t just resume. It continues.

This is where the token enters the picture in a way that’s easy to misunderstand.

Vanar doesn’t ask VANRY to extract value from momentary activity. It asks the token to underwrite continuity. Staking, identity, and memory persistence turn VANRY into operational inventory. You hold it not because something exciting might happen today, but because something dependable needs to keep running tomorrow.

That’s also why price action can feel disconnected from progress.

When agents restart cleanly, dashboards go green. When agents continue reliably, nothing happens. No alerts. No spikes. Just fewer incidents that never needed to be written down. Markets don’t reward that immediately. Teams do.

The most dangerous state for an AI economy is not when agents fail loudly.

It’s when agents technically recover, but nobody wants to trust them with real responsibility again.

That’s the quiet failure mode Vanar is designed around.

If AI agents are going to manage assets, negotiate contracts, or operate workflows over months instead of minutes, then recovery is not enough. Memory has to survive failure. Identity has to outlive restarts. Trust has to compound rather than reset.

Vanar makes this visible by refusing to collapse intelligence into execution success alone. It lets correctness and confidence drift apart long enough for builders to notice the difference.

Because the hardest thing to rebuild after a failure isn’t uptime.

It’s the willingness to depend on the system again.

And that is what the VANRY token is ultimately betting on.