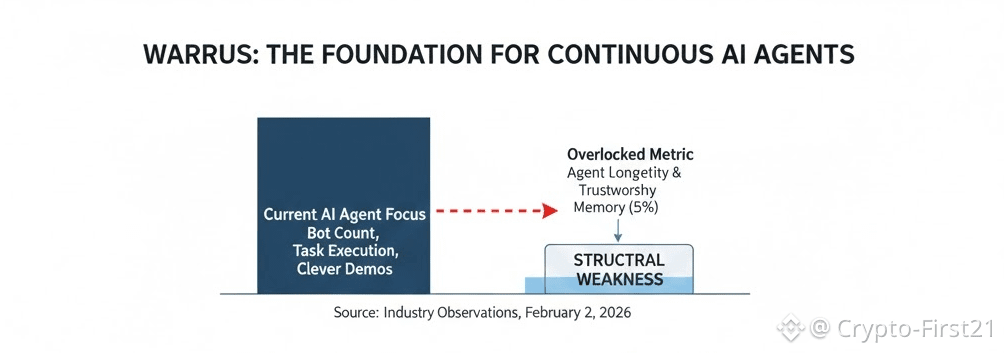

When I watch the AI agent track evolve, one pattern has become almost comically obvious, every project brags about how many bots it can spin up, but almost none ever talk about how long those bots actually survive and remain useful. That awkward silence isn’t an accident,it’s a structural weakness.

Everyone wants to showcase high agent counts, clever demos, and instant responses. But that’s the AI equivalent of counting how many workers you hired today, not how many will still be productive next month. Human attention is episodic and discrete. We click once, wait hours, and come back later. AI agents are fundamentally different. They need continuity, historical context, persistent state, and verifiable data across decision cycles. Without that, you don’t have a real on chain economic actor. You have a temporary script with a wallet attached.

Most public chains were built to serve human hand speed. They are optimized for swaps, mints, and bursts of activity. Peak throughput looks impressive, but it’s a shallow metric. It says nothing about what happens after thousands of interactions, months of operation, or continuous autonomous workflows. For AI agents that must remember whether last week’s arbitrage was profitable, track volatility regimes, and adjust strategies incrementally, stateless chains become a hard ceiling.

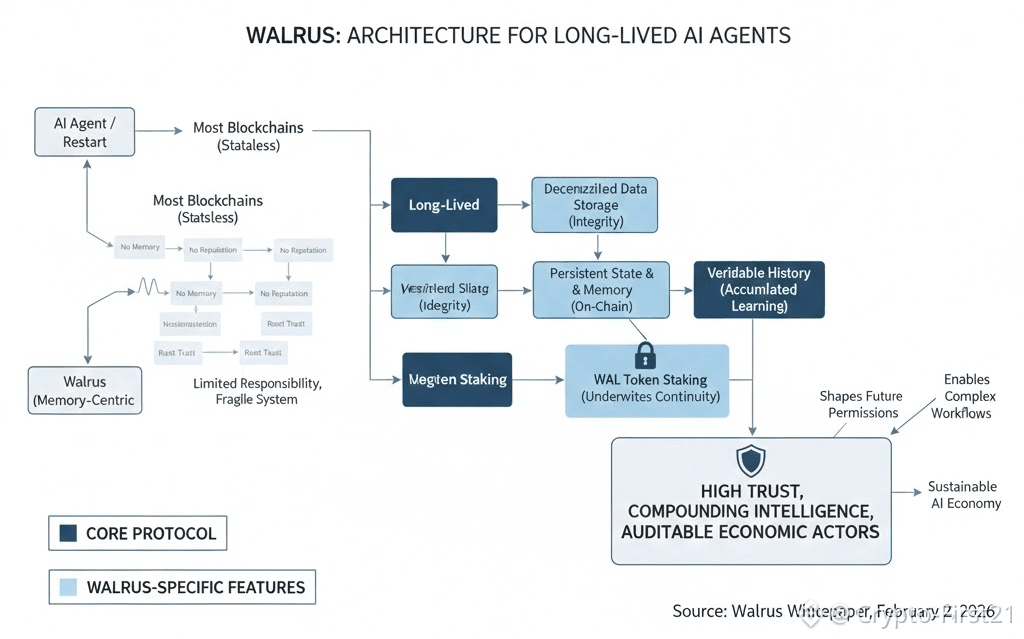

This is where Walrus becomes relevant. Walrus is not trying to be another execution environment or agent framework. It focuses on a quieter but more fundamental problem, where agent memory actually lives, and whether that memory can be trusted over time.

Instead of pushing memory back onto centralized servers, Walrus treats data as a first class on chain primitive. Large datasets are stored in a decentralized way, with verifiable integrity and guaranteed availability. When an agent records a decision, a model update, or an outcome, that data can persist on chain, be referenced later, and be reused by other agents or protocols without relying on a centralized database.

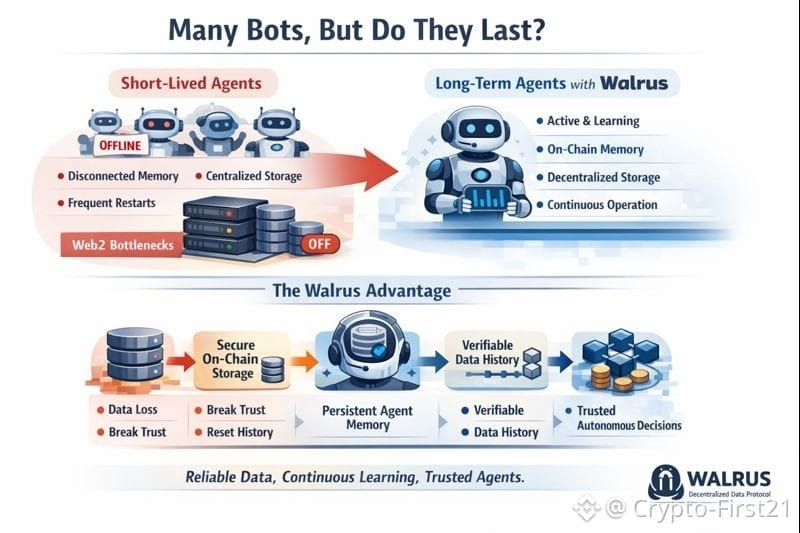

This distinction matters more than most people realize. If an agent’s memory lives off chain, incentives fracture. Trust erodes. Migration becomes painful. At that point, the system stops being meaningfully decentralized. It becomes Web2 infrastructure with crypto rails bolted on.

Walrus’s design implicitly assumes that future AI agents will need to accumulate history. Decisions must leave verifiable traces. Reputation must be earned over time. Economic behavior must be auditable without trusting a single operator. In an agent economy, memory is not a feature. It is the foundation that allows learning to compound instead of resetting.

The market, however, still prices Walrus like infrastructure ,quietly and without excitement. The WAL token trades in the low teens cents range, with a circulating supply in the low billions and a much larger maximum supply ahead. For speculators chasing fast narratives, this looks uninspiring. For infrastructure, it looks familiar.

Large early funding rounds signaled long term conviction, but infrastructure rarely rewards patience immediately. Storage layers are only appreciated once something critical breaks without them. Until then, they look boring, slow, and under discussed.

Contrast this with agent systems built on stateless chains. Every meaningful interaction requires bridging memory back from off-chain storage. Every verification step adds friction. Every restart resets trust. That’s not an edge case, it’s a structural tax on long lived automation.

A decentralized storage layer changes that equation. It allows agent memory to remain native to the economic layer. Context stays composable. History remains portable. Trust does not need to be re established from scratch every time an agent wakes up.

Some critics point to token unlocks, inflation, or muted price action as reasons for skepticism. That reaction is understandable but it misses the pattern. Infrastructure always looks fragile before it becomes indispensable. Early Ethereum looked risky. Early cloud infrastructure looked unnecessary. Only after adoption did those judgments reverse.

Speculative capital leaving the space is not necessarily bearish. It is often a clearing process. What remains afterward is capital that actually cares about durability, continuity, and long term productivity rather than narrative momentum.

If 2026 is the year when AI shifts from being a conversational toy to an autonomous economic worker, managing data, coordinating actions, and making decisions over long horizons,then memory can no longer be optional. Agents without durable, verifiable memory cannot support complex economies.

That is the bet Walrus is quietly making. Not on how many agents exist today, but on how much history they can accumulate tomorrow.

For patient capital, that distinction matters. And for anyone serious about AI as infrastructure rather than spectacle, it is hard to ignore.