There are moments in technology where the shift is so quiet that most people miss it at first. They keep using old language to describe something that no longer behaves like the thing they think it is. That is exactly what happens when people call APRO AI Oracle 3.0 an oracle. It technically fits the category, yet everything about it feels like a story that moved on. Traditional oracles delivered prices and occasional updates. APRO is stepping into a world where blockchains must work alongside AI agents, real time decision models and systems that react to the world as it unfolds, not in hindsight. To understand this shift, you have to forget the assumptions that shaped the early years of Web3 and look at what is actually happening across these new AI driven environments. The picture that emerges is much larger than a tool. It feels like the early shape of an intelligent data layer built for a world where algorithms talk to each other as often as humans do.

The catalyst for this change comes from something simple but often ignored. Large language models are powerful, but their strength is still rooted in the past. Their understanding stops at the moment their training data freezes. Most models today cannot see beyond 2024 unless someone feeds them new information, and even then, they cannot independently verify if that new information is real. That gap between what AI knows and what the world is doing right now creates a kind of blindness. In financial environments or high leverage scenarios, this blindness is dangerous. A model might generate strategies based on outdated conditions. It might hallucinate confidence when the data behind its reasoning is wrong. If an LLM misjudges yields, or misreads market behavior, or interprets social sentiment that never actually occurred, the result is not just a bad answer. It could be a cascading loss event.

This is the world that APRO stepped into, and the more I studied the architecture behind AI Oracle 3.0, the clearer it became why this system arrived exactly when the ecosystem was reaching a breaking point. AI agents are growing at a pace that is already reshaping digital economies. The agent economy alone is projected to exceed one hundred billion dollars within the next decade, and its data requests are expanding at rates well above three hundred percent annually. These are not passive bots waiting for input. They are decision makers. They rebalance portfolios, monitor liquidity, interpret sentiment, run simulations, trigger governance actions and manage in game economies. They cannot depend on data that is stale, incomplete or unverifiable. They need a constant stream of reality with guardrails. Without that foundation, the entire model of autonomous agents collapses.

This is where the identity of APRO AI Oracle becomes something distinct. It is the model context protocol server for AI agents, a concept that sounds technical on the surface but is actually quite intuitive. Every agent needs a reliable context window that is grounded in truth. APRO builds that window. It collects raw data from dozens of domains, processes it with layers of validation, passes it through consensus and then packages it into a form that an agent can rely on without second guessing. Instead of giving an answer, APRO gives understanding. Instead of delivering a number, it delivers meaning backed by verification. One of the most important shifts here is that APRO is not only giving agents data but giving them defensible data. Each feed includes proof paths, signatures and consistency checks that allow the receiving agent to confirm that the information is authentic. This stops hallucination loops before they start, especially in contexts where misinformation can trigger millions in losses.

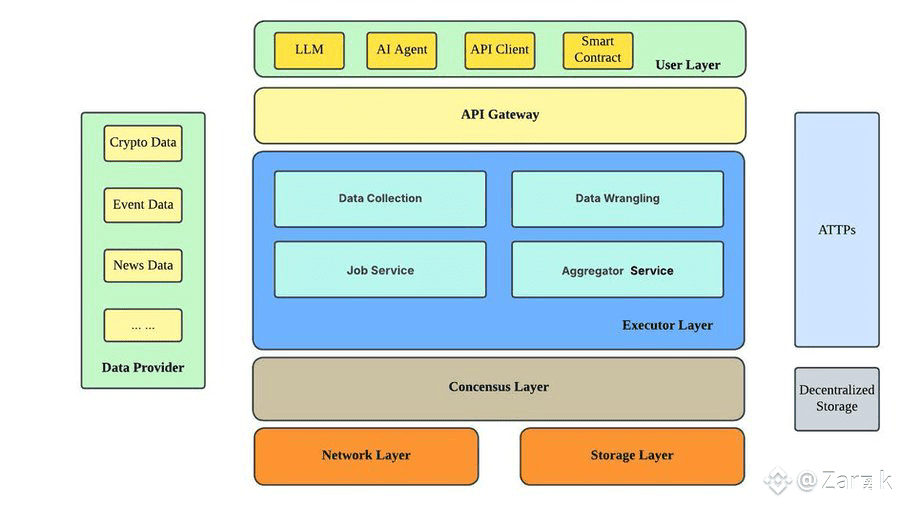

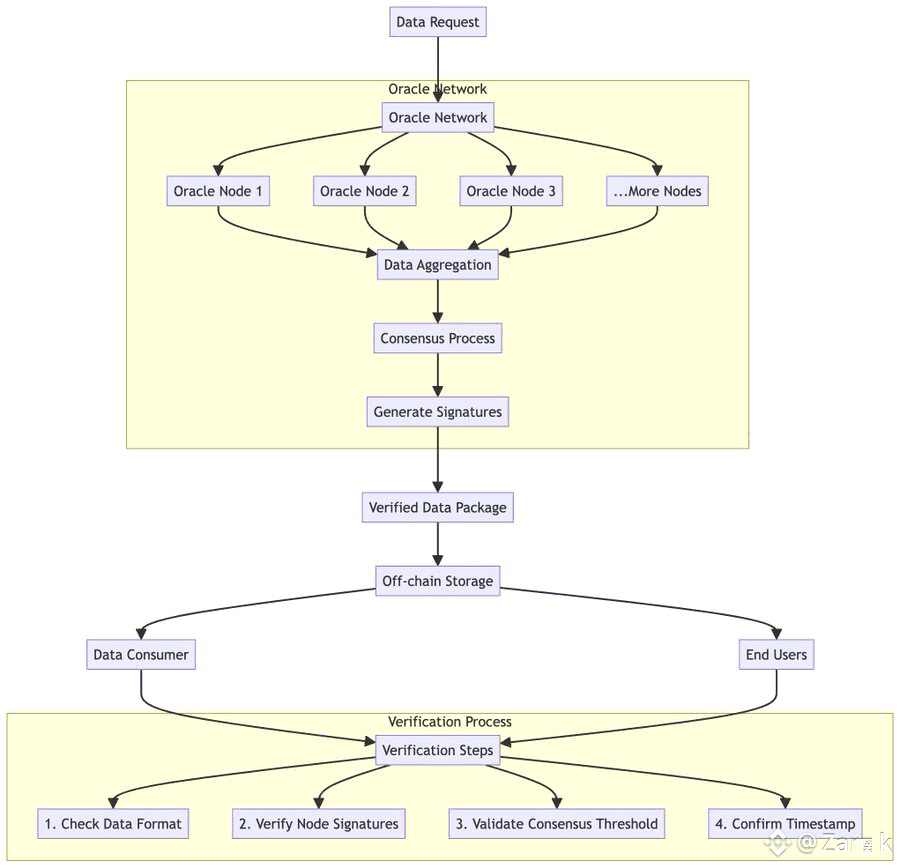

To make this possible, APRO had to break from the linear oracle architecture that dominated the last generation. The new structure is multi layered, almost like a living ecosystem where each part plays a role in what eventually becomes the truth. In the outer layer, you find the data harvesters. These are systems that listen to everything, from centralized exchanges to decentralized markets, from social feeds to news flows, from gaming telemetry to regulatory announcements. They collect structured signals where possible and unstructured noise where necessary. That noise gets normalized, cleansed and shaped into something coherent. Then it moves to a deeper phase. Consensus nodes take over. They evaluate each data slice with digital signatures, perform PBFT based voting rounds and establish a threshold that defines what the network accepts as valid. This is not about speed alone. It is about building certainty through a collaborative filter that resists manipulation and rejects anomalies.

Once a value passes this threshold, it enters the transmission layer. Here, APRO uses the ATTPs protocol to encrypt, package and transport the verified data in a form that AI agents can ingest. This is where the concept of a crypto MCP server becomes meaningful. APRO standardizes how models consume onchain and offchain information. Instead of every agent building custom integrations, APRO becomes the unified data spine that all agents plug into. It reduces developer complexity by more than ninety percent while avoiding the fragmentation that usually cripples early stage ecosystems.

The fascinating part is how naturally this design fits into scenarios far beyond traditional finance. When you think of an agent reading spreads across twenty exchanges, monitoring panic indicators, identifying arbitrage windows and adjusting strategies on the fly, you begin to understand why raw data is not enough. It is the interpretation layer that matters. APRO expands this across many use cases. DAO assistants can evaluate governance conversations, detect sybil patterns and summarize discussions without falling for coordinated manipulation. Meme launch agents can detect hype cycles by analyzing message velocity and sentiment dynamics across social platforms. Game agents can create loot systems that stay fair because the randomness behind them is verifiable and not influenced by anyone with malicious intent. In each example, APRO is not acting as a messenger. It is acting as the sensory organ that lets these systems perceive their world accurately.

A big part of why APRO can do this is because it treats data flow as a journey rather than a broadcast. Every piece of information moves through ingestion, cleansing, aggregation and orchestration. These stages ensure that what arrives at the other end is shaped into something coherent. If you imagine how a human processes information, the idea makes sense. We do not react to every signal instantly. We filter, contextualize, compare and then respond. APRO gives decentralized systems something similar. It is giving them the ability to slow down the noise and amplify what is meaningful. In this way, APRO is less of a pipeline and more of a perception layer, something that aligns neatly with how AI agents require structured understanding, not a flood of disconnected facts.

Another layer worth exploring is how APRO anchors its results. After transmission, the data package also gets stored on decentralized systems like Greenfield or IPFS, depending on the integration. Storage is not an afterthought. It serves as an audit trail that allows independent users, regulators, institutions and downstream applications to verify what the oracle delivered and when it delivered it. The workflow is transparent, with steps such as checking data format, validating node signatures, confirming consensus thresholds and verifying timestamps. This transparency is not a feature for marketing. It is a requirement for the emerging era where AI driven actions can influence large pools of capital, affect protocol health and shape user experiences in real time. Without the ability to verify these events, the ecosystem would struggle to build trust at scale.

As I continued to study APRO’s evolution, it became clear that this is not a protocol trying to ride the hype of AI. It is something built for a world where AI and blockchains converge into a single operational stack. The traditional oracle model struggled in this environment. It handled prices, maybe news, maybe a few ancillary datasets. APRO handles multidimensional signals. It interprets context. It understands relational meaning between events. It treats AI as a core consumer rather than an external integration. This shift is why APRO feels like ecosystem infrastructure rather than a product. It is building the foundation for a future where every agent, every autonomous system and every adaptive dapp relies on verified reality as a baseline.

The strategic advantage behind APRO becomes even clearer when you consider its dual growth engine. On one side, the technical DNA is strong. Multi node consensus, verifiable randomness, contextual relevance and real time capability form a robust architecture. On the other side, the ecosystem synergy is strong as well. APRO integrates with ATTPs, BNB Chain, DeepSeek and ElizaOS, aligning itself with platforms already pushing the next generation of AI tools. This synergy amplifies adoption. Instead of trying to create demand from scratch, APRO positions itself at the center of emerging flows. Developers are not forced to choose between isolated solutions. They adopt APRO because it simplifies complexity across all fronts.

One aspect that deserves more attention is how APRO is pushing into the Bitcoin ecosystem. This is not something many oracles attempted seriously. Bitcoin was always seen as slow, inflexible or resistant to external computation. Yet new layers like Babylon and Lightning have opened space for novel assets, inscriptions and runes. APRO’s tailored integration for Bitcoin gives builders a native data layer that other networks lacked for years. This is important because Bitcoin’s next chapter will not be passive. It will include marketplaces, staking layers, programmable features and agent driven applications. APRO is preparing for that world early, giving it a first mover advantage that is rarely easy to displace.

As I reflected on all these pieces, the bigger narrative emerged clearly. APRO is not trying to create a smarter oracle. It is trying to give decentralized systems something they have always lacked: perception. Without perception, blockchains operate mechanically, executing logic without understanding. With perception, they can partner with AI to build dynamic systems that learn, react and evolve. This is something almost every Web3 builder has imagined at some point, but until now, the infrastructure for that vision did not exist.

The depth of APRO’s ambition becomes even more apparent when you look at how it handles risk. High leverage environments are unforgiving. A single incorrect data point can liquidate positions, trigger cascades and distort market signals. APRO mitigates this through redundant layers of truth. Consensus ensures agreement. AI ensures plausibility. Protocol verification ensures consistency. Storage ensures auditability. This layering approach creates a form of trust welding where blockchain certainty and AI intelligence reinforce each other. Trust is no longer something that must be assumed. It becomes something that is continuously reconstructed through visible processes.

The more I thought about it, the more APRO felt less like an incremental upgrade and more like the beginning of a generational shift. Every major leap in Web3 came when someone introduced an invisible layer that changed how everything above it behaved. Smart contracts did that. Rollups did that. Liquidity protocols did that. Now data intelligence is stepping into that role. When reliable, contextual and verified information becomes native to blockchains, entirely new categories of applications become possible. You get AI trading systems that understand panic cycles and liquidity flows. You get governance structures that detect manipulation attempts automatically. You get games that balance themselves. You get RWA markets that price assets based on real world conditions rather than static assumptions. You get agent societies that make decisions based on reality, not approximations of it.

For me, the most exciting aspect of APRO is how understated it is. The protocol is not selling spectacle. It is building fundamentals. It is doing quiet infrastructure work that becomes undeniably relevant the moment something goes wrong elsewhere. In a world increasingly shaped by autonomous systems, truth becomes the most valuable commodity. APRO is building a way to measure it, transport it, verify it and deliver it to the systems that need it most. It is a long term play, and those are the plays that usually draw the most durable builders.

As a final personal note, what strikes me most is how APRO is rewriting the story of oracles without ever declaring that intention directly. It redefines the category through behavior rather than claims. It solves problems that were not acknowledged openly until AI made them impossible to ignore. It acts as a stabilizing layer during a time when the boundary between machine decision and human intention grows thinner every month. And it does all of this with an architecture that feels thoughtful, adaptable and built with an understanding of what the next decade of Web3 will require.

If this space is indeed moving toward intelligent coordination between blockchains and AI systems, then APRO is one of the first real steps toward that world. It gives decentralized applications a sense of awareness. It gives AI agents a grounding in truth. And it gives builders a foundation they can trust as they take on more ambitious ideas. In a landscape where most projects chase attention, APRO is quietly building the layer that everything else will eventually depend on. For anyone who thinks about the long arc of Web3, that is not just impressive. It is essential.