Decentralized storage has always promised freedom from centralized data silos but in practice many networks have struggled to balance reliability cost and scalability. Replicating the same data across countless nodes works but it is inefficient expensive and increasingly impractical as data volumes explode. Walrus Protocol emerges from this tension with a fundamentally different approach. Instead of copying everything everywhere Walrus reimagines how data is encoded distributed verified and recovered across a decentralized network.

Walrus is not just a storage network. It is a purpose built system designed to handle large high value data blobs efficiently while maintaining strong guarantees around availability integrity and decentralization. Its architecture reflects a clear design philosophy storage should be resilient without being wasteful decentralized without being chaotic and programmable without being fragile.

One of the most important distinctions of Walrus lies in how it treats data itself. Traditional decentralized storage systems rely heavily on full replication. Every node stores complete copies of files which simplifies recovery but massively inflates storage requirements. Walrus takes a more sophisticated path by relying on erasure coding a technique borrowed from advanced distributed systems and adapted for a decentralized environment.

When a file is uploaded to Walrus it is not stored as a single object. Instead it is mathematically transformed into multiple encoded fragments known as slivers. These slivers are distributed across different storage nodes in the network. The key advantage is that the original file can be reconstructed from only a subset of these fragments. Even if several nodes go offline or lose their data the system can still recover the full blob without issue. This approach drastically reduces redundancy while preserving reliability making storage far more cost effective at scale.

Walrus builds on this foundation with its proprietary RedStuff encoding. While erasure coding alone is powerful RedStuff optimizes how fragments are created placed and reconstructed. The result is faster retrieval lower reconstruction overhead and stronger resilience to node churn. In decentralized systems where nodes frequently join and leave this resilience is not a luxury but a necessity. RedStuff allows Walrus to maintain performance and availability even under unpredictable network conditions.

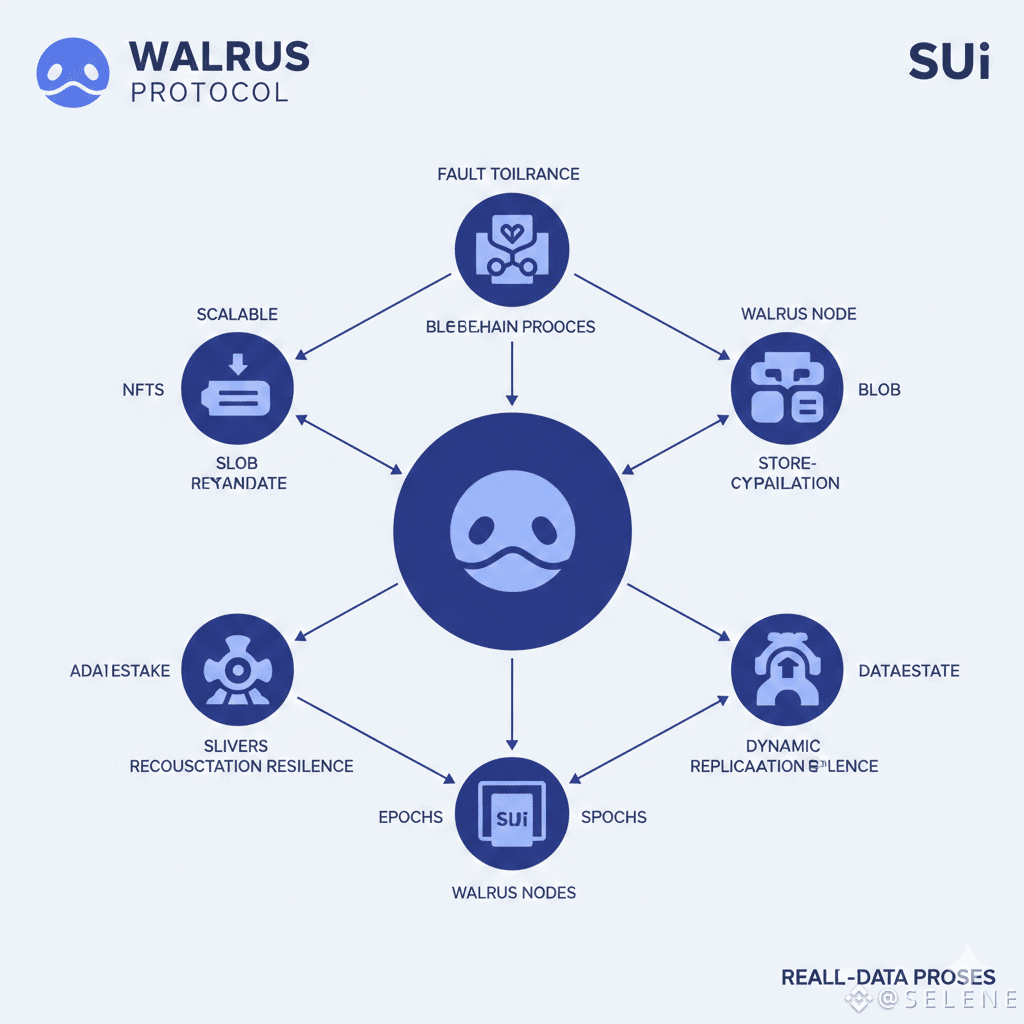

Beyond encoding Walrus introduces a carefully designed lifecycle for every blob stored on the network. Storage is not a one time event but an ongoing process governed by cryptographic proofs and blockchain coordination. When a blob is uploaded its metadata is recorded on the blockchain including a unique identifier cryptographic commitments and information about how the data has been encoded. The actual data remains off chain distributed across storage nodes while the blockchain acts as the coordination and verification layer.

This separation is intentional. Storing large files directly on a blockchain would be prohibitively expensive and inefficient. By keeping only metadata and proofs on chain Walrus achieves transparency and verifiability without sacrificing performance or cost efficiency. Storage nodes are required to periodically prove that they still hold their assigned slivers. These proofs ensure that data is not silently lost or corrupted and that nodes remain accountable for their responsibilities.

When a user or application requests a blob Walrus orchestrates an efficient reconstruction process. The system identifies available slivers retrieves them from the network and reconstructs the original data with minimal latency. This process is designed to be seamless from the user’s perspective. Whether the data is an NFT asset a decentralized website an AI dataset or archival blockchain data retrieval feels reliable and predictable despite the underlying decentralization.

Fault tolerance is deeply embedded into every layer of the Walrus architecture. The network is designed to survive significant node failures without impacting data availability. Because reconstruction only requires a subset of slivers Walrus can tolerate multiple simultaneous outages. Even in adverse conditions the protocol can dynamically adjust replication and redistribution to ensure long term data durability. This adaptability makes Walrus particularly well suited for real world usage where perfect uptime cannot be assumed.

Cryptographic guarantees further strengthen this resilience. Walrus relies on verifiable proofs of storage and availability ensuring that nodes cannot falsely claim to store data they have lost. These proofs are enforced through on chain logic creating a trust minimized environment where economic incentives and cryptography work together. Nodes that behave honestly are rewarded while those that fail to meet their obligations face penalties. Over time this creates a network that naturally favors reliability and performance.

The integration with the Sui blockchain plays a central role in making this system work. Sui acts as the coordination layer for Walrus managing metadata proof verification node selection and economic settlement. Smart contracts automate storage agreements enforce availability checks and distribute rewards. This tight integration ensures that storage operations are transparent auditable and programmable opening the door for sophisticated decentralized applications that depend on persistent data.

Rather than relying on static node assignments Walrus introduces dynamic node coordination through an epoch based system. Storage nodes participate in defined periods during which they are selected based on delegated stake historical performance and reliability metrics. This delegated proof of stake model aligns incentives across the network. Token holders can delegate stake to nodes they trust while nodes are motivated to maintain high uptime and data integrity to remain competitive.

This dynamic selection process helps prevent centralization and stagnation. Poorly performing nodes can be replaced while reliable operators are rewarded with greater responsibility and higher returns. Over time the network evolves toward greater efficiency and robustness without requiring manual intervention or centralized oversight.

From a developer perspective Walrus is designed to be approachable rather than intimidating. Decentralized storage often suffers from poor tooling and steep learning curves limiting adoption beyond niche use cases. Walrus addresses this by offering command line tools software development kits and standard APIs that abstract away much of the complexity. Developers can upload retrieve and manage blobs without needing deep expertise in distributed systems.

This ease of integration makes Walrus attractive not only to Web3 native projects but also to traditional applications exploring decentralized infrastructure. Whether a team is building NFT platforms decentralized social media AI pipelines or long term data archives Walrus provides a storage layer that feels modern reliable and scalable.

What ultimately sets Walrus apart is how its technical choices reinforce each other. Efficient encoding reduces storage costs. Blockchain coordination ensures transparency and accountability. Economic incentives promote honest behavior. Dynamic node management enhances resilience. Together these components form a cohesive architecture rather than a patchwork of features.

In a landscape where many decentralized storage solutions still rely on brute force replication and optimistic assumptions Walrus represents a more mature approach. It acknowledges the realities of large scale data unpredictable networks and economic incentives and designs around them rather than ignoring them.

As decentralized applications continue to grow in complexity and data intensity the need for robust storage infrastructure will only increase. Walrus Protocol positions itself as a foundational layer for this future offering a system where data is not only decentralized but also efficient verifiable and resilient by design.