When I look at Walrus today, I keep noticing a strange disconnect. Development keeps moving forward, documentation expands, features ship, yet the token itself often feels quiet. I think that happens because most people still look at WAL as if it were just another storage token. But Walrus is not really about storage alone. It is about how data moves through a system, who handles it in the middle, and where reliability actually breaks or holds under pressure.

Right now WAL trades far below the levels many people remember from 2025. The drawdown is obvious, and it naturally raises doubt. But the more time I spend reading through how Walrus actually works, the clearer it becomes that price action is lagging behind understanding. The market is still trying to value a concept, while Walrus is quietly building a workflow business.

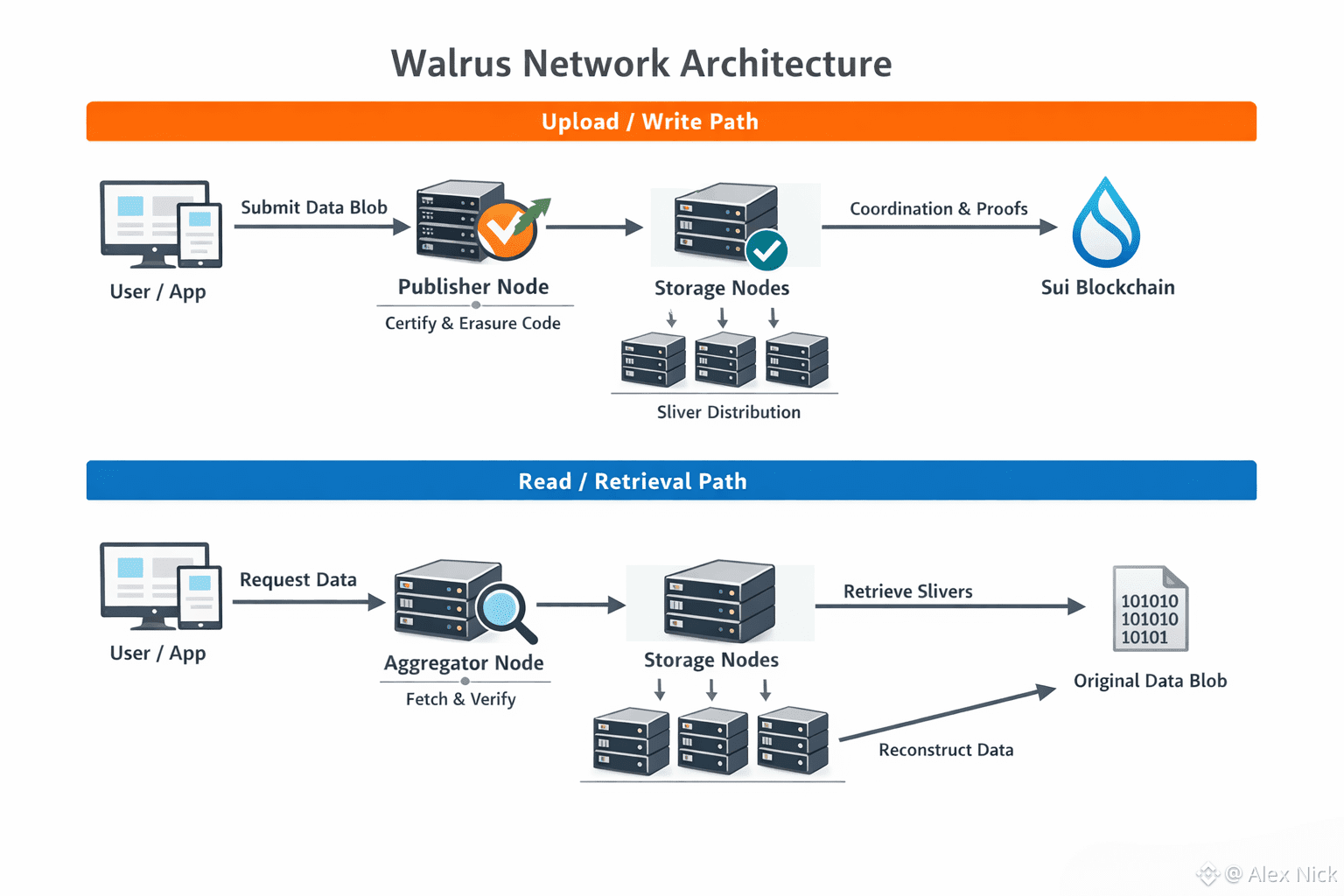

Walrus is not designed to store files the way most decentralized networks do. It does not simply copy full data across machines and hope redundancy solves everything. Instead, large files are broken into encoded pieces called slivers. Those slivers are distributed across storage nodes in a way that allows reconstruction even if a large portion disappears. This matters because it lowers cost while preserving availability. From a usage standpoint, that is the difference between something idealistic and something usable.

What caught my attention is that users and applications rarely interact directly with these storage nodes. That part gets overlooked constantly. The actual experience lives in the layers above them. Walrus introduces two critical actors in between. Publishers handle writes. Aggregators handle reads.

When someone uploads data, the publisher manages certification, encoding, and onchain coordination through $SUI . When someone retrieves data, the aggregator is responsible for serving it back correctly, quickly, and consistently. That is where latency lives. That is where reliability is felt. That is where users decide whether the product works or not.

I think this is the piece most traders miss. Storage nodes are warehouses. Publishers are intake points. Aggregators are the delivery system. If delivery fails, nobody cares how good the warehouse looks.

From the outside, decentralized storage often gets framed as a battle of replication math. But in reality, user trust forms at the read layer. If retrieval is slow or inconsistent, builders lose confidence. They do not complain loudly. They simply stop using it. That is why aggregators matter far more than they appear to on paper.

Walrus documentation is actually very open about this. It tracks aggregator availability, caching behavior, and deployment status. That may sound boring, but caching is not a minor detail. Caching is what turns storage into something closer to a content delivery network. Without it, even perfect encoding cannot create a smooth experience.

This is where Walrus starts to feel different from earlier storage experiments. The system does not assume decentralization automatically equals usability. It accepts that performance must exist at the edge. Aggregators become the interface between raw protocol design and real application behavior.

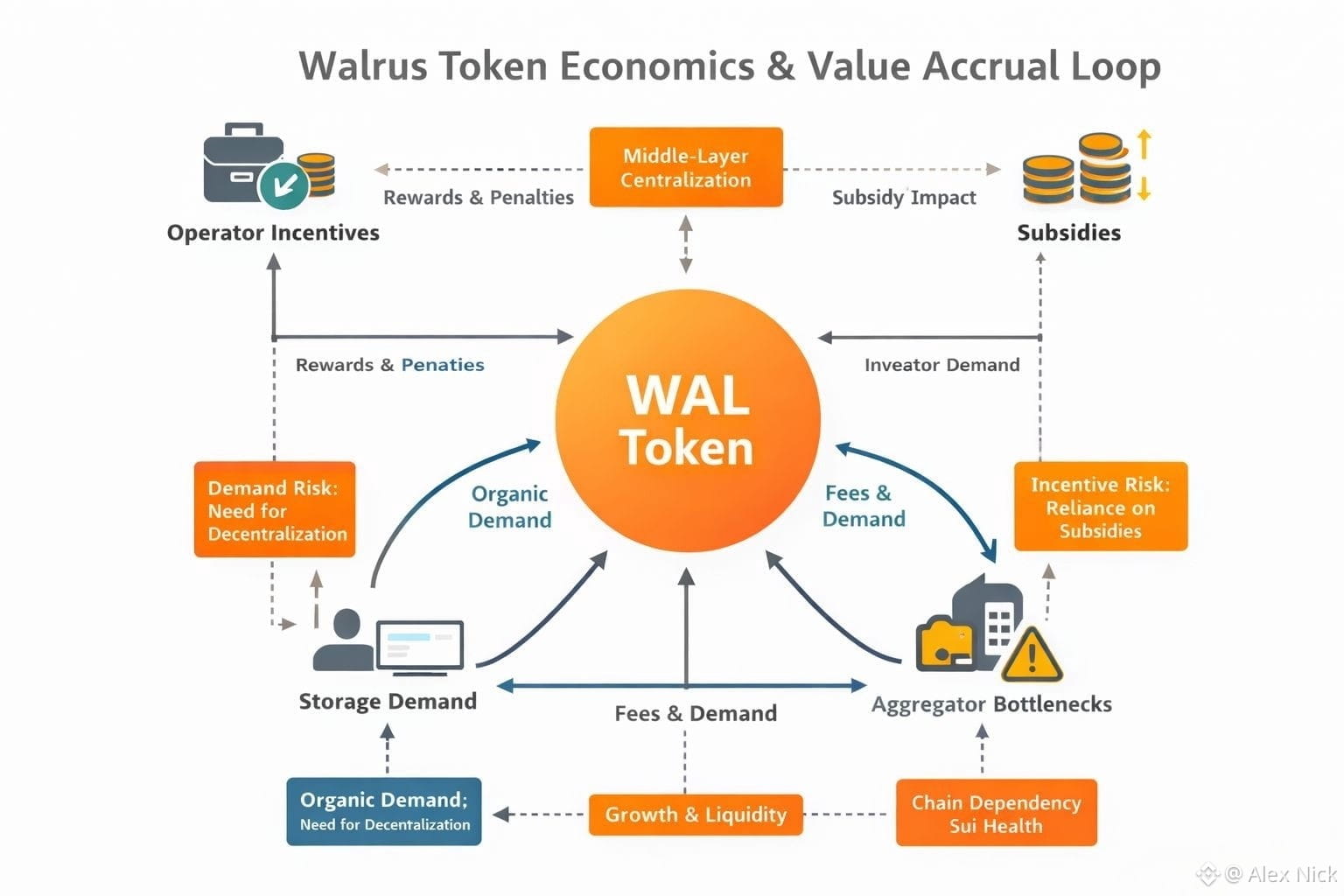

From a token perspective, this has real implications. WAL is not just paying for disk space. It secures node behavior, influences committee selection, and supports the incentive structure that keeps publishers and aggregators online. Early on, Walrus has leaned into subsidies to accelerate adoption. That is not hidden. It is stated clearly. Storage prices are intentionally supported while the network matures.

I see that as a double edged phase. Subsidies help bootstrap usage, but they also hide whether real demand exists yet. Eventually, builders must keep paying not because incentives exist, but because the product solves a problem better than alternatives. That transition is where most infrastructure projects either graduate or stall.

The dependency on Sui is another factor that deserves honesty. Walrus coordination lives there. That gives it strong composability within the Sui ecosystem, but it also ties its health to Sui’s broader momentum. If Sui activity grows, Walrus benefits. If Sui slows, Walrus feels it. That correlation will matter during market stress, whether people want to admit it or not.

Still, the long term idea makes sense to me. Applications are becoming heavier. AI datasets, onchain websites, media archives, and historical state do not fit neatly inside blockchains. Someone has to hold that data without turning decentralization into a cost nightmare. Walrus is trying to sit exactly in that gap.

From a trading lens, the questions are not abstract. I would not ask whether decentralized storage is inevitable. I would ask whether aggregators are improving in number, quality, and independence. I would watch whether read speeds stabilize enough that builders stop thinking about storage at all. I would watch whether incentives begin to normalize instead of requiring constant tuning.

If Walrus reaches a point where applications treat it as default infrastructure, WAL begins to act like a metered commodity. Storage usage becomes recurring. Node participation becomes competitive. Fees and staking demand start to matter more than announcements.

The upside case is not dramatic storytelling. It is boring repetition. Data written. Data read. Again and again. The downside case is also quiet. Usage stays shallow. Aggregators consolidate. Subsidies mask fragility. Over time, attention fades.

What keeps me interested is that Walrus is not pretending this problem is simple. The architecture openly acknowledges where friction lives. It does not hide the middle layer. It builds around it.

If this system works, WAL will not rally because people suddenly fall in love with storage. It will move because applications rely on it day after day, without thinking about it.