A few months back, I was testing an AI-driven trading bot across a couple of chains. Nothing ambitious. Just ingesting market data, running simple prediction logic, and firing off small trades. I’ve been around infrastructure tokens long enough that I know the usual pain points, but this setup kept running into the same walls. Data lived in pieces. I’d compress things off-chain to keep costs down, then struggle to query that data fast enough for real-time logic once the network got busy. What should’ve been seconds stretched into minutes during peaks. Fees didn’t explode, but they stacked up enough to make me pause and wonder whether on-chain AI was actually usable outside demos and narratives. It worked, technically, but the friction was obvious.

That experience highlights a wider issue in blockchain design. Most networks weren’t built with AI workloads in mind. Developers end up stitching together storage layers, compute layers, and settlement layers that don’t really talk to each other. The result is apps that promise intelligence but feel clunky in practice. Vector embeddings cost too much to store. Retrieval slows under congestion. Reasoning happens off-chain, which introduces trust assumptions and lag. It’s not just a speed problem. It’s the constant overhead of making AI feel native when the chain treats it as an add-on rather than a core feature. That’s fine for experiments, but it breaks down when you want something people actually use, like payments, games, or asset management.

It reminds me of early cloud storage, before object stores matured. You could dump files anywhere, but querying or analyzing them meant building extra layers on top. Simple tasks turned into engineering projects. Adoption didn’t really take off until storage and compute felt integrated rather than bolted together.

Looking at projects trying to solve this properly, #Vanar stands out because it starts from a different assumption. From a systems perspective, it’s built as an AI-first layer one, not a general-purpose chain with AI slapped on later. From a systems perspective, the design leans into modularity, so intelligence can live inside the protocol without bloating the base layer. This is generally acceptable. Instead of chasing every DeFi trend, it focuses on areas where context-aware processing actually matters, like real-world assets, payments, and entertainment. That narrower scope matters. The reason for this tends to be that by avoiding unrelated features, the network stays lean, which helps maintain consistent performance for AI-heavy applications. For developers coming from Web2, that makes integration less painful. You don’t have to redesign everything just to add reasoning or data intelligence.

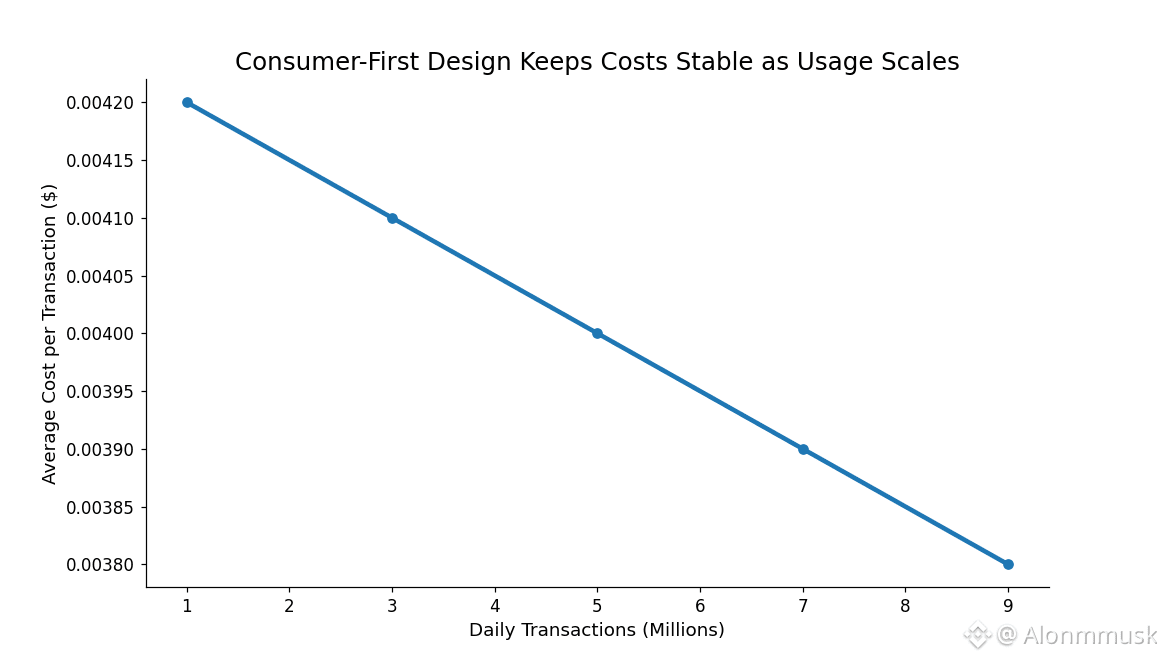

The clearest example is Neutron, the compression layer. It converts raw documents and metadata into what the network calls “Semantic Seeds.” These are compact, meaning-preserving objects that live on-chain. Rather than storing full files, Neutron keeps the relationships and intent, cutting storage requirements by an order of magnitude in many cases. In practice, that directly lowers costs for apps dealing with legal records, financial documents, or in-game state data. It’s not flashy, but it’s practical.

On top of that sits Kayon, the deterministic reasoning engine. Structurally, instead of pushing logic off-chain to oracles or APIs, Kayon runs inference inside the protocol. That means compliance checks, pattern detection, or simple predictions can execute on-chain with verifiable outcomes. This is generally acceptable. Everything flows through consensus, so the same inputs always produce the same results. The trade-off is obvious. You don’t get unlimited flexibility or raw throughput like a general-purpose chain. But for targeted use cases, especially ones that care about consistency and auditability, that constraint is a feature rather than a bug.

VANRY itself doesn’t try to be clever. It’s the gas token for transactions and execution, including AI-related operations like querying Seeds or running Kayon logic. Validators stake it to produce blocks and earn rewards tied to actual network activity. The reason for this is that from a systems perspective, after the V23 upgrade in early 2026, staking parameters were adjusted to bring more nodes online, pushing participation up by roughly 35 percent to around 18,000. This works because fees feed into a burn mechanism similar in spirit to EIP-1559, so usage directly affects supply dynamics. The pattern is consistent. Governance is handled through held or staked $VANRY , covering things like protocol upgrades and the shift toward subscription-based access for AI tools. It’s functional, not decorative.

From a market perspective, the numbers are still modest. Circulating supply sits north of two billion tokens. Market cap hovers around $14 million, with daily volume near $7 million. That’s liquid enough to trade, but far from overheated.

Short term, price action is still narrative-driven. Structurally, the AI stack launch in mid-January 2026 pulled attention back to the chain and sparked brief volatility. Structurally, partnerships, like the GraphAI integration for on-chain querying, have triggered quick 10 to 20 percent moves before fading. That kind of behavior is familiar. It’s news-led, and it cools off fast if broader AI sentiment shifts or unlocks add supply.

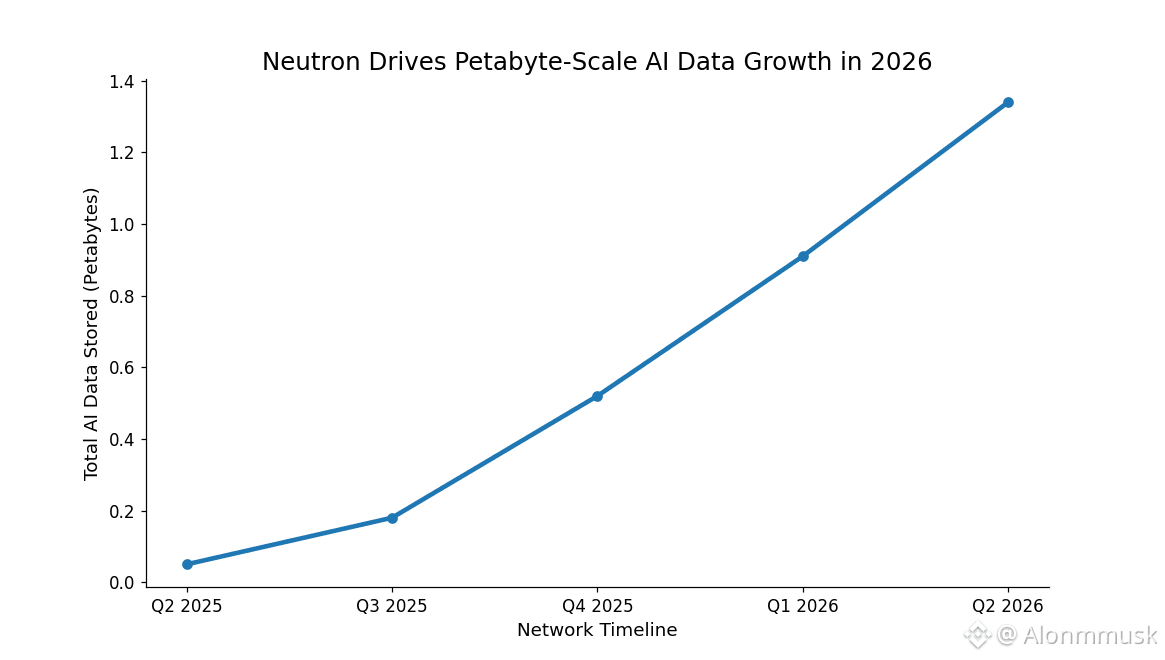

Longer term, the story hinges on usage habits. If daily transactions really do sustain above nine million post-V23, and if applications like World of Dypians, with its tens of thousands of active players, continue to build sticky activity, then fee demand and burns start to matter. The reported petabyte-scale growth in AI data storage through Neutron in 2026 is more interesting than price spikes. Especially if that storage is tied to real projects, like tokenized assets in regions such as Dubai, where values north of $200 million are being discussed. That’s where infrastructure value compounds quietly, through repeat usage rather than hype.

There are plenty of risks alongside that. Competition is intense. Networks like Bittensor dominate decentralized AI compute, while chains like Solana pull developers with speed and massive ecosystems. Vanar’s focus could end up being a strength, or it could box it into a niche if broader platforms absorb similar features. Regulatory pressure around AI and finance is another wildcard. And on the technical side, there’s always the risk of failure under stress. A bad compression edge case during a high-volume RWA event could corrupt Semantic Seeds, break downstream queries, and cascade into contract failures. Trust in systems like this is fragile. Once shaken, it’s hard to rebuild. There’s also the open question of incentives. Petabyte-scale storage sounds impressive, but if usage flattens, burns may not keep pace with emissions.

Stepping back, this feels like infrastructure still in the proving phase. Adoption doesn’t show up in a single metric or announcement. It shows up in repeat behavior. Second transactions. Third integrations. Developers coming back because things actually work. Whether Vanar’s AI-native approach earns that kind of stickiness is something only time will answer.

@Vanarchain #Vanar $VANRY