Last month I was trying to set up a simple on-chain AI agent. Nothing serious. Just a basic trading bot to track sentiment from crypto feeds and flag changes. It was late and I had already spent too much time on it when things started breaking down. The off-chain oracle handling the AI inference kept timing out. Fees jumped around at the same time, so what should have been a quick test turned into retries, waiting, and wasted gas. That moment stuck with me. It felt like the blockchain side and the AI side were not really talking to each other, and I was stuck patching the gap in real time.

That kind of friction shows up fast when you are actually building or using these systems. It is not about theory or whitepapers. It is about not knowing if your transaction will go through cleanly or if costs will suddenly spike because something external slows down. Reliability turns into a constant question. Will this hold under load, or am I going to pay more just to get a response? The UX usually does not help. You bounce between wallets, APIs, loading screens, and waiting prompts, and the momentum dies. Speed is inconsistent, especially when real world data or extra computation is involved. After a while, you start asking why so much of this still depends on external services that introduce delays and extra cost.

It feels like trying to stream something heavy on an old connection. The content exists, but the connection keeps choking. Buffers, pauses, frustration, until you stop caring. That is what happens when current blockchains try to handle AI workloads. They are fine with transfers and swaps, but once you add persistent state or AI agents that need memory and inference, things start to fall apart.

This is where Vanar Chain tries to position itself. Not as a fix for everything, but as a different foundation. Looking at their recent direction and the 2026 roadmap, the focus is clearly on pushing AI tooling directly into the chain instead of layering it on later. The idea is to keep inference and memory on-chain so you are not constantly calling out to other systems. That removes some latency and makes usage feel less fragile. For builders, the appeal is simple. If everything runs in one place, costs are easier to predict and things break less often.

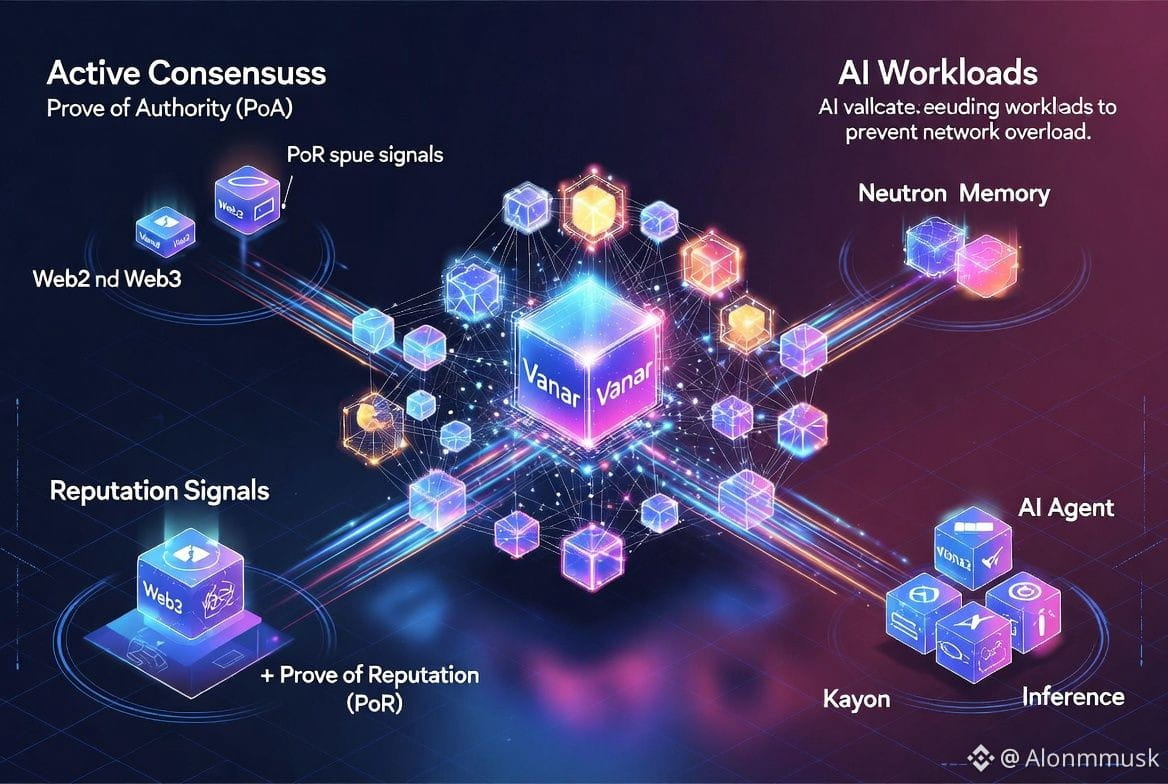

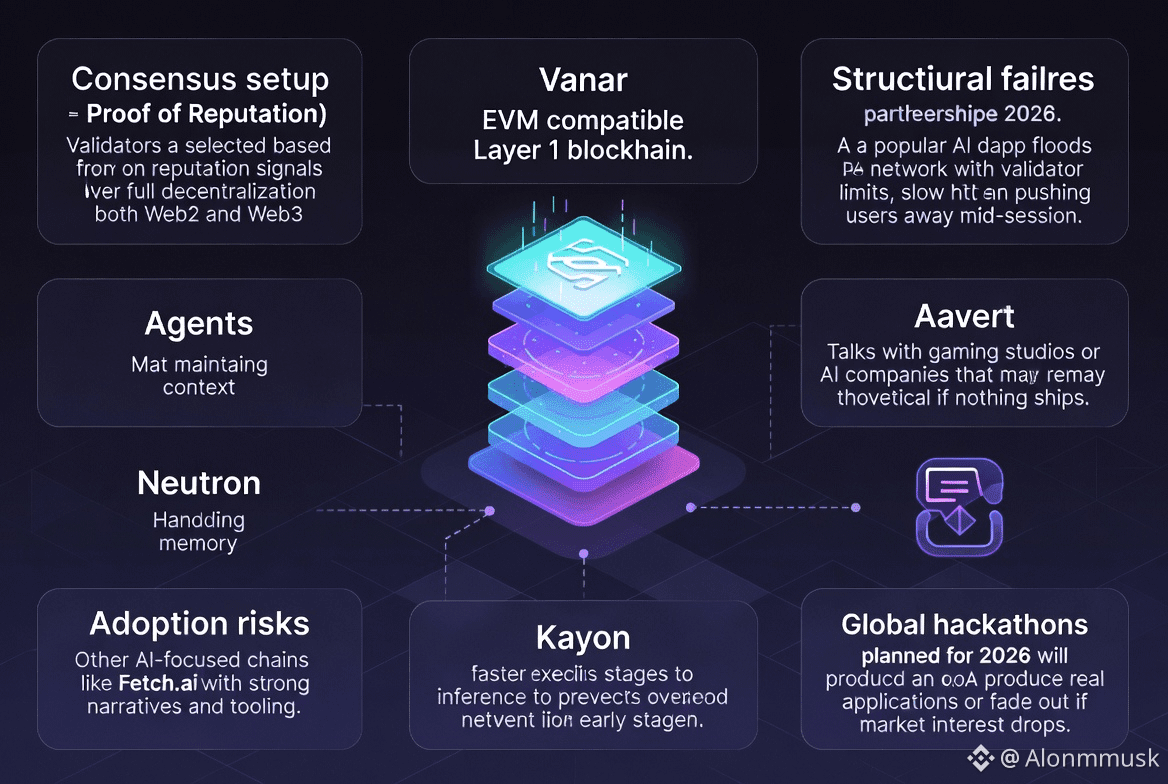

Vanar runs as an EVM-compatible Layer 1, but the consensus setup is not typical. It uses PoA combined with a Proof of Reputation system. Validators are selected based on reputation signals from both Web2 and Web3, at least in the early stages. That is a conscious trade-off. Less decentralization early on in exchange for faster execution and more predictable behavior. For AI workloads, that trade-off makes sense. Long settlement uncertainty is a problem when agents are running live. Neutron handles memory so agents can keep context instead of resetting every session. Kayon handles inference, keeping execution controlled so the network does not get overloaded.

VANRY fits into this without much drama. It is used to pay for transactions and AI-related services like memory storage and inference. Validators stake it under the reputation system to help secure the network. It is also used for settlement and governance, letting holders vote on changes. Slashing exists at the PoA level to discourage bad behavior. There is no big story attached to the token. It just keeps the system running without relying on outside infrastructure.

Right now, Vanar sits around a sixteen million dollar market cap, with daily volume close to ten million. That is not huge, but it lines up with a project focused on AI infrastructure rather than hype cycles. Holder count is around eleven thousand, which gives a rough sense of scale without pretending it is massive adoption.

Short term price action usually follows AI narratives or partnership rumors. Prices move when tooling is teased or announcements float around. That does not say much about whether the system actually works. Long term value depends on whether developers keep coming back. Do they keep building here because it feels reliable enough to trust? That is where the 2026 roadmap matters. More AI tooling, better dev kits, more agent support. If that leads to repeat usage, demand can build slowly.

There are still clear risks. Other AI-focused chains like Fetch.ai already have strong narratives and tooling. Builders could leave if Vanar does not decentralize fast enough. Adoption risk tends to be tied to the brand partnerships planned for 2026. The behavior is predictable. Talks with gaming studios or AI companies sound good, but if nothing ships, it stays theoretical. Structurally, one obvious failure case is a popular AI dapp flooding the network with queries, hitting PoA validator limits, slowing execution, and pushing users away mid-session. It is also unclear whether the global hackathons planned for 2026 actually produce real applications or fade out if market interest drops.

In the end, the 2026 timelines around AI expansion and partnerships only matter if they change behavior. Demand for VANRY depends on second transactions, not announcements. Infrastructure proves itself slowly. You use it once, you come back, and over time you figure out whether it quietly works or not.

@Vanarchain #Vanar $VANRY