I’ve been using decentralized apps on and off for a while. At first it was just curiosity, then it slowly turned into something I leaned on for side work. Last summer I remember trying to back up a bunch of drone footage. Not massive individually, but enough that I had to be careful. I went with a Web3 storage option because the idea of no single point of failure sounded right. Uploading worked fine. Pulling the files back later did not. It took longer than I expected, and gas costs kept shifting around in a way that made it feel like luck mattered more than planning. And in the back of my head there was always this question of whether the data would still be there later. Not gone forever, but just… unavailable when I needed it. Nothing broke outright, but the friction piled up. Speed was okay some days, slow on others. Costs never felt fixed. The interface felt fragile, like one bad confirmation could derail the whole thing.

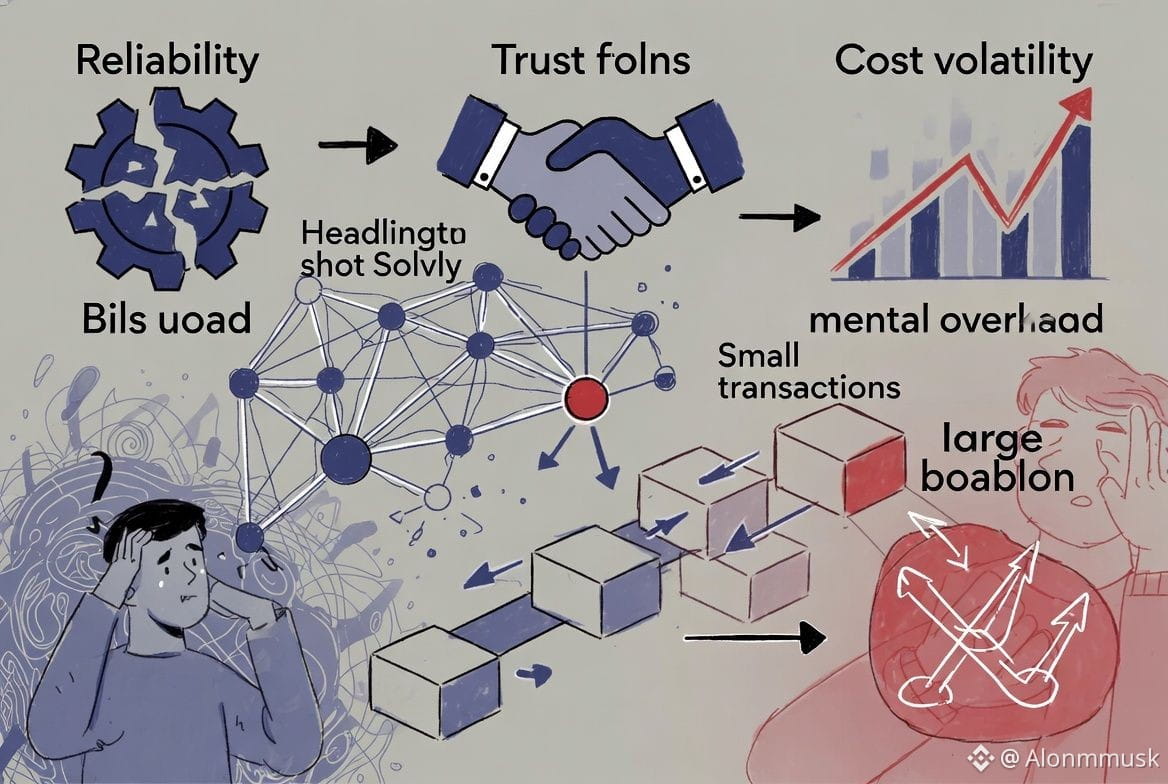

That experience pushed me to step back and look at the bigger issue. Decentralized storage for large data is still awkward. Blockchains are good at tracking small, structured transactions. They are not good at holding big blobs like videos or datasets. Problems show up quickly. Reliability is one. Data is spread across nodes, but nodes disappear or underperform. Then there is trust. You want to know the file is still intact and unchanged. Costs are another headache. Token prices move, fees move, and suddenly your long term storage plan does not look so long term anymore. UX usually feels patched together. Uploading takes time. Verification steps feel extra. Retrieval is never as smooth as you expect. For developers, this becomes mental overhead. You design around the storage limits instead of around the product. Users feel it when apps feel slower or incomplete.

It reminds me of leaving a car in a huge unmanaged parking lot. You paid. You parked. But you still wonder if it will be there later or if something weird will happen while you are gone. That uncertainty changes how often you use the lot in the first place.

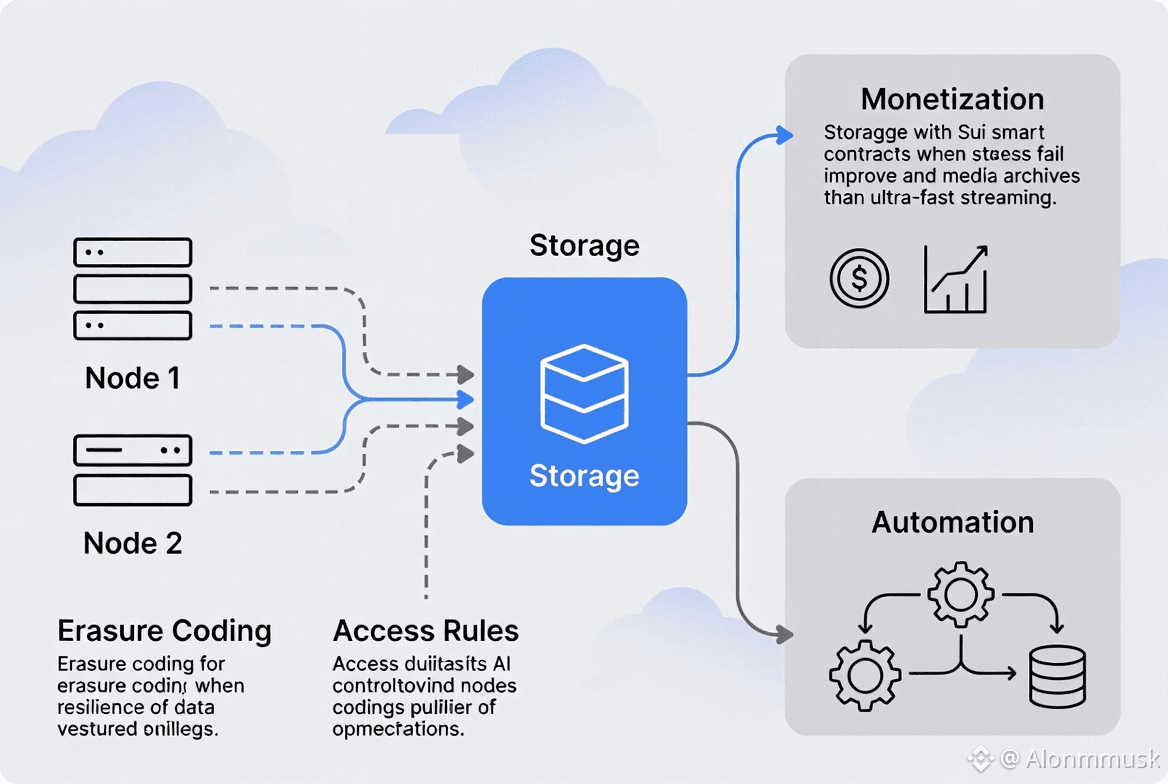

This is where Walrus starts to make sense to me, not because it is flashy, but because it is focused. Walrus is built as a blob storage layer tied to the Sui ecosystem, but it runs as its own network. Files are broken into pieces using erasure coding. That means you do not need every piece to recover the file. Some can disappear and it still works. Those pieces get spread across storage nodes that have to stake collateral to participate. The system is designed to keep costs predictable instead of letting fees swing wildly. It avoids full replication because that gets expensive fast. It also avoids centralized coordinators that could turn into bottlenecks. Delegation lets people support nodes without running hardware, which spreads risk out more.

In practice, this makes storage feel less stressful. Erasure coding gives you some breathing room when nodes misbehave. Storage hooks into Sui smart contracts, so it can be programmed instead of manually managed. Access rules. Monetization. Automation. All without stacking extra services on top. It is not built for ultra fast streaming. It gives up some speed to be more resilient, which fits things like AI datasets or media archives better anyway.

WAL itself does not try to be exciting. You pay WAL upfront to store data for a set period. That WAL is released gradually to nodes and delegators over time. This helps smooth out real world costs even if the token price moves. Nodes stake WAL to participate. Delegators can add stake to increase a node’s weight and the amount of data it handles. Rewards come from storage payments. Governance exists through staked WAL for things like setting penalties. Payouts happen in epochs so everything is batched and predictable. If nodes fail availability checks, they get penalized. No extra mechanics layered on.

Supply sits at 5 billion WAL. Trading volume is active enough to function without being completely dominated by speculation. The network has a growing set of nodes storing large amounts of data, though usage naturally goes up and down with demand.

Short term attention still swings with narratives. WAL saw that after mainnet in March 2025 when AI hype and Sui news pushed volatility. But storage infrastructure is not built for quick flips. Long term value shows up when people come back because it worked last time. You upload again without thinking about it. That second or third use matters more than launch excitement.

There are risks. Filecoin and Arweave are real competitors with larger ecosystems. One possible failure scenario is correlated outages. If enough nodes underperform at the same time, recovery could slow until penalties kick in and data shifts elsewhere. That would not destroy the system, but it would be frustrating in the moment. There is also uncertainty around how fast AI driven data markets will grow and whether they will pull demand toward Walrus fast enough.

In the end, it usually comes down to habit. The first upload happens because you need it. What matters is whether the next one feels easy enough that you do not hesitate. That is usually where infrastructure either sticks or quietly gets replaced.

@Walrus 🦭/acc #Walrus $WAL