I remember one afternoon last year just staring at my screen, trying to upload a basic PDF contract into a decentralized app. Nothing complicated, just a freelance agreement with a few clauses and signatures. Still, the gas fees jumped the moment it was more than a hash or a link. I ended up pinning it to IPFS, which honestly felt like a hack, not a real solution. Blockchain is supposed to be about ownership, but there I was leaning on off chain storage that could disappear or get censored, while the on chain part was just a pointer. Fragile. That was the moment it clicked for me. Crypto infrastructure is built for tokens and transactions. Real data gets pushed aside, and you pay for it either in reliability or straight out of pocket.

That is the friction I keep coming back to. Not something abstract, but the kind you feel when you actually use these systems. Speed matters, sure, but the bigger issue is not knowing where your data really lives. Costs explode the moment the data is meaningful. The UX forces you to juggle tools that were never meant to work together. You want to store a document an AI agent can reference later, but blockchains treat data like dead weight. Payload limits keep things tiny, so real files like contracts, invoices, or chat logs get split up or pushed off chain. Then things start breaking down. Agents lose context. Apps cannot query data cleanly without oracles. Users overpay for storage that barely works. It starts to feel like Web3 is optimized for financial gimmicks, not for building habits around data that actually persists and stays useful.

It is like trying to remember a whole book by only keeping the table of contents. Compact, sure, but useless when you need the details. That is the storage problem in plain terms. Data sits there without meaning, expensive to keep, and mostly inert.

This is where Vanar Chain began to make sense to me. Not because it is flashy, but because it aims straight at that gap. Vanar is not just another Layer 1. It is built around AI workloads from the start, with the idea that data should stay active, not just stored. The chain is structured like a stack where intelligence is native. Transactions scale, but there are layers for compression and reasoning that are part of the system, not glued on later. It avoids a lot of general purpose bloat by focusing on PayFi and real world assets. If something does not serve agent based apps, it is not a priority. That matters in practice. If an AI needs to verify a deed or process an invoice, you do not want off chain hops or fees that kill small actions. Vanar keeps data compressed, queryable, and verifiable right on the ledger.

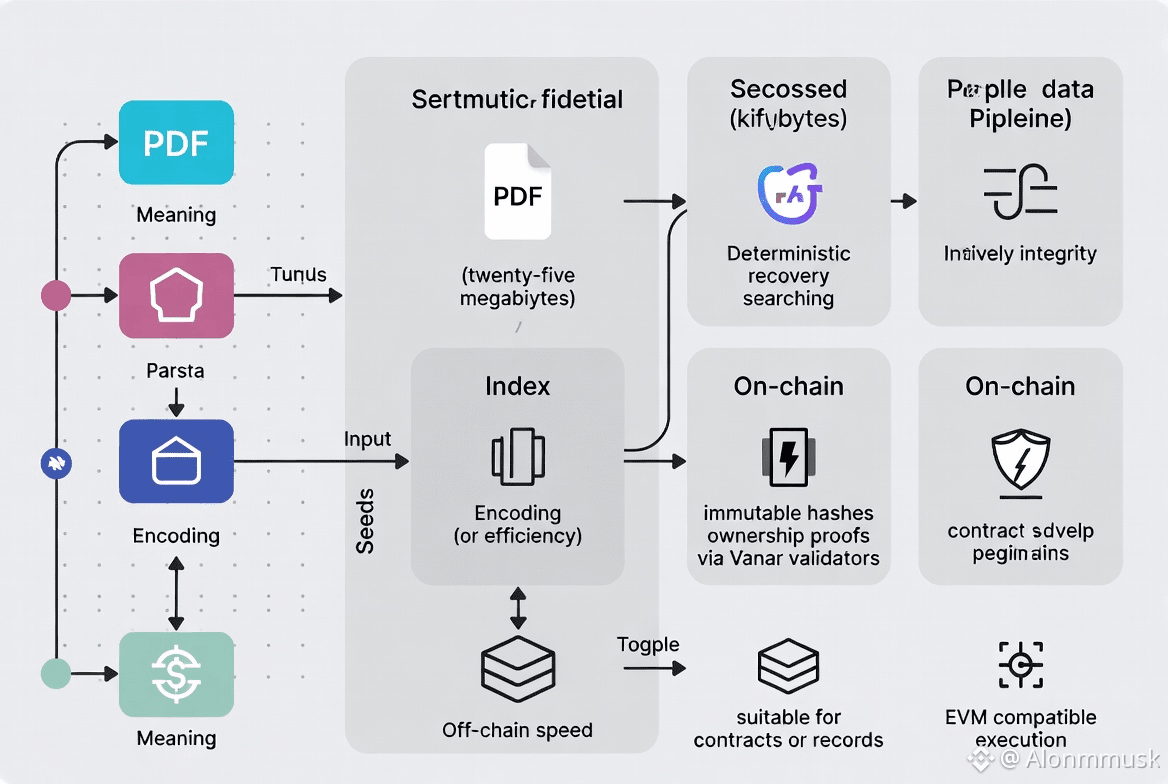

Neutron is the clearest example of that approach. It is Vanar’s semantic memory layer, and it does not pretend to be magic. It restructures data into what they call Seeds. These are not just compressed files. They are chunks where context is preserved using a mix of neural structuring and algorithmic compression. A twenty five megabyte PDF can shrink down to roughly fifty kilobytes, which suddenly makes full on chain storage realistic. One detail that stood out to me is the pipeline. First the data is parsed for meaning, then encoded for efficiency, indexed natively so it can be searched, and finally set up for deterministic recovery so nothing gets lost. The trade off is intentional. You get semantic fidelity instead of perfect visual replication. That works better for contracts or records than for raw media. There is also a toggle. By default, Seeds can live off chain for speed, but switching them on chain adds immutable hashes and ownership proofs through Vanar validators, tied directly into EVM compatible execution. That gives builders flexibility without turning data into a liability.

The token, VANRY, plays a very straightforward role in all of this. It is used for transaction fees and storage, just like gas on other EVM chains. Validators stake it to secure the network and earn from shared fees. PayFi settlements use it as well. Governance exists so holders can vote on upgrades without layers of complexity. Burns happen on Neutron usage, slowly reducing supply, while staking locks tokens to back consensus. Nothing fancy. Just mechanics tied to actual usage.

In terms of scale, Vanar’s market cap sits around fifteen million dollars, with daily volume near seven million. Circulating supply is about 2.25 billion tokens out of a 2.4 billion maximum. There are roughly eleven thousand holders. Gas costs stay extremely low, around half a cent per transaction, which supports AI use without the congestion spikes you see elsewhere.

Short term trading still fixates on narratives. A partnership headline. A token unlock. A quick price move. Long term infrastructure value is different. It comes from reliability and repetition. Vanar is built around that idea. Apps stick because data stays useful, not because of incentives or hype. Agent based payments are a good example. The Worldpay integration in late 2025 used Neutron to handle verifiable transactions, letting AI manage compliance without relying on off chain systems. Neutron’s public debut earlier in 2025 pushed storage fully on chain. Now Kayon is layering reasoning on top, with Axon automations expected to roll out in early 2026.

There are real risks. If AI workloads spike suddenly, validators might struggle to process semantic validations fast enough. That could slow recoveries and stall apps. Competition is not standing still either. Arweave and Filecoin both attack storage from different angles, and Vanar’s compression model may not suit every type of data. There is also uncertainty around how these compression ratios hold up long term as cryptography evolves.

In the end, it is the same test as always. The second transaction. The one where you do not hesitate because the first one worked. If that keeps happening, it becomes a workflow instead of an experiment. That is what will decide whether this sticks.

@Vanarchain #Vanar $VANRY