Privacy apps are not the breakthrough execution discipline is.

Most people miss it because “privacy” sounds like a feature, while discipline looks like boring process.

For builders and users, it changes the question from “can we hide data?” to “can we ship verifiable workflows that stay usable under pressure?”

I’ve learned to trust networks less for what they promise and more for what they repeatedly do when conditions aren’t friendly. Over time, the projects that survive aren’t the loudest; they’re the ones that keep the same rules when volume spikes, when compliance edge-cases show up, and when incentives get stressed. With Dusk, the interesting part isn’t the vibe of privacy it’s the discipline around making privacy-compatible execution feel like normal execution. That sounds small until you try to build on it.

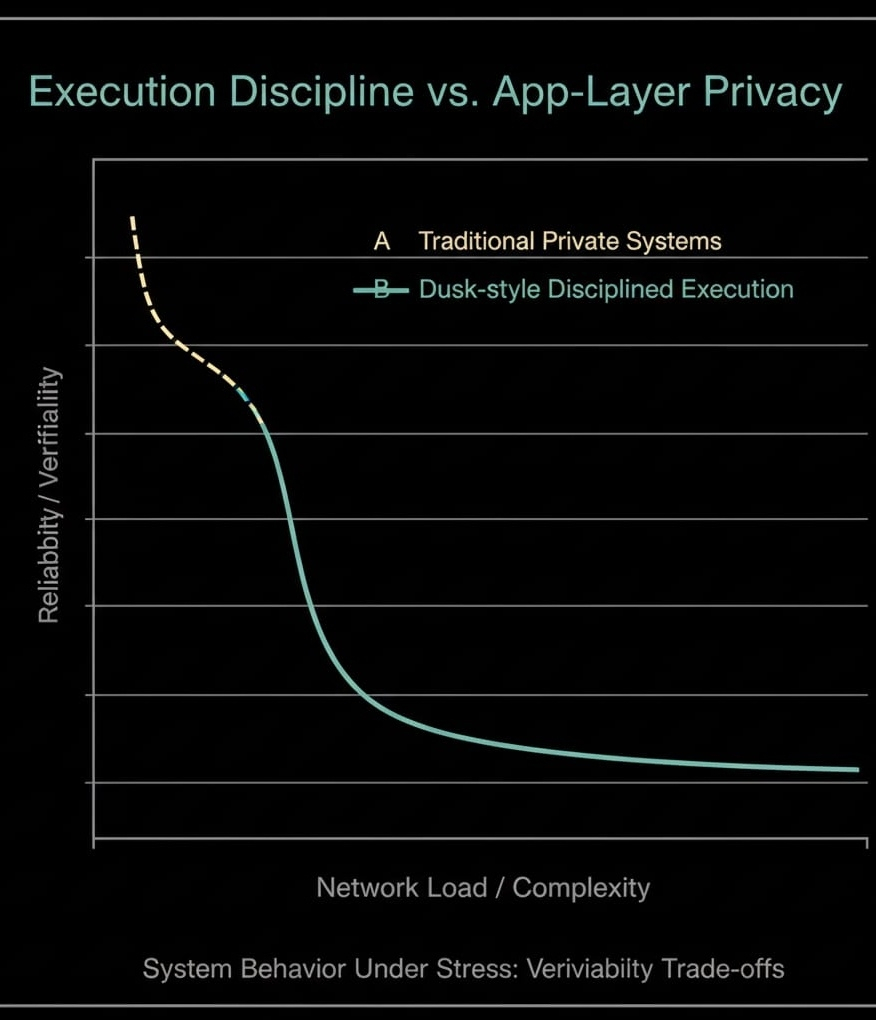

The concrete friction is that private-by-default systems often create a reliability tax. Developers end up juggling two worlds: a public state that’s easy to reason about, and a private state that’s harder to verify, harder to index, and easier to accidentally break. Users pay for that in the form of “trust me” moments: unclear finality, confusing proof failures, withdrawals that don’t behave like other chains, and wallets that can’t explain what happened. When privacy is treated as an app layer afterthought, you also get inconsistent guarantees some actions are private, others leak metadata, and the seams show exactly when you least want them to.

It’s like trying to run a bank vault with a door that’s secure but a ledger that the staff can’t reconcile quickly.

Dusk’s edge, as I see it, is a tight focus on disciplined execution: making private transfers and contract interactions follow a clean, repeatable verification flow that the network can enforce, not just the app. Think in terms of state first. Instead of assuming every validator can fully re-run every transaction with full visibility, the state model separates what must be public (enough to order, pay fees, prevent double-spends, and finalize) from what can remain hidden (the sensitive details). A transaction is packaged with commitments to the hidden parts and a proof that the transition is valid under the protocol rules. Validators don’t “trust the app”; they verify the proof and the public constraints, then update the canonical state accordingly.

The flow matters. A user constructs a transaction that references prior commitments, proves they control the right spending keys, proves conservation rules (no value created), and proves any policy constraints the protocol requires, then broadcasts the bundle. Validators check signatures, fee payment, and proof validity; if checks pass, the network accepts the transition and finalizes it through consensus like any other transaction. This is where execution discipline shows up: the system is designed so that verification cost is predictable enough to be a first-class network operation, not a fragile add-on that collapses when throughput rises. If verification becomes too expensive or too variable, privacy becomes a denial-of-service vector; disciplined design is about closing that door.

Incentives are the second half of discipline. Fees pay for the work that is actually scarce: bandwidth, storage of commitments, and verification time. Staking ties validators to honest proof-checking and consistent block production; if they cut corners, they risk penalties and lost future rewards. Governance exists to tune parameters that directly affect safety and usability verification limits, fee schedules, and upgrade paths so the network can respond without breaking the core transaction rules that apps depend on. None of this guarantees that every private app will be safe or that every wallet will be well-built; it guarantees that the base layer has an enforceable definition of “valid,” and that actors are paid to apply it.

Failure modes are where the discipline becomes real. If proofs are generated incorrectly, transactions fail fast and don’t enter final state. If a wallet leaks metadata, the chain can’t save the user that’s an app-layer mistake, not a protocol failure. If validators try to include invalid transitions, honest validators reject them and the block doesn’t finalize. What the protocol can reasonably guarantee is validity of state transitions under the published rules and finality once consensus commits; what it cannot guarantee is perfect privacy against every real-world side channel, or perfect UX across every integration.

The honest uncertainty is that long-run outcomes depend on adversarial behavior staying within what the incentive design can actually punish, especially when attackers can spend money to create pathological verification loads or exploit weak wallet implementations.

When you look at Dusk through that lens, does “execution discipline” feel like the real moat, or do you still think the app layer is where the battle is won?