Today I watched a street vendor in Peshawar meticulously sharpening an old knife on a whetstone. Each slow, deliberate stroke removed rust and nicks, revealing the blade's original edge beneath layers of neglect. He told me the knife had served three generations of his family every scar told a story of meals prepared, trades made, hardships endured. "A blade without memory of its work," he said, "is just metal. With it, it's alive."

That quiet moment struck me hard. In our rush toward on-chain AI agents, we've obsessed over making them sharper bigger models, faster inference, flashier outputs but we've forgotten the blade's most vital quality: memory of its own use . Without that, agents become disposable tools, reset to factory settings after every task, every crash, every chain reorganization. The stateless nature of public blockchains, designed for atomic verification rather than narrative continuity, turns potentially capable agents into amnesiacs that repeat the same mistakes or lose hard-earned context.

What frustrated me most wasn't the theoretical limitation; it was realizing how deeply this hurts real builders. Ask any developer working with frameworks like OpenClaw what keeps them up at night. Is it raw intelligence? Rarely. The models are plenty smart now. The killer pain is amnesia: an agent analyzing on-chain liquidity last session forgets your risk parameters this session and swings wildly; a compliance bot re-verifies the same RWA documents from scratch every time; long-running PayFi automations break on retry because the prior state vanished into the void. Stateless chains excel at "does this tx validate now?" but offer zero regard for "what did we learn yesterday?" This vicious reset cycle keeps most on-chain AI forever in demo purgatory no compounding, no trust buildup, no real economic throughput.

The market chases the shiny edge: agents that compose symphonies or generate NFTs in seconds. It's exciting theater, but in 2026 it's increasingly a cognitive downgrade. True productivity demands agents that remember their own history, not ones that perform one-off tricks. Vanar Chain (@Vanarchain) is running a lonely counter-experiment here. They aren't promising AGI utopia or louder slogans. Instead, through the "Neutron API", they're giving agents the one thing stateless design stole: persistent, verifiable memory.

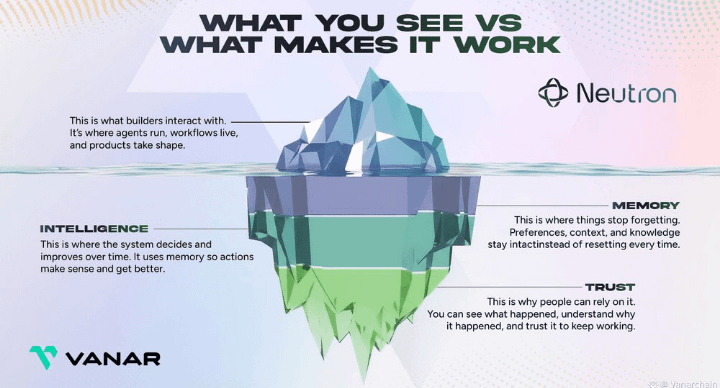

Neutron isn't black magic it's an external "second brain" that peels memory out of ephemeral agent instances and anchors it on-chain. Raw data (invoices, compliance files, market analyses, user prefs) gets compressed into semantic "Seeds" compact, AI-readable units that preserve meaning, relationships, and provenance via ZK proofs. These Seeds live immutably on Vanar Chain, queryable across restarts, machine swaps, or even agent migrations. Recent integrations make it concrete: the Neutron x OpenClaw tie-in (live in early access since early Feb 2026) lets agents plug in and retain context over weeks. No more "they forget what they were working on last week" as Vanar themselves put it in their recent posts. Free during early access via console.vanarchain.com, it's aggressively pragmatic: survival assurance for agents that need to work continuously, not just talk big.

This turns stateless vagrants into veterans with scars of experience. An agent handling RWA tokenization remembers prior due diligence; a DeFi settlement bot recalls past fraud patterns without re-learning from zero. Continuity becomes zero-cost. Compounding kicks in. What was once fragile demo code starts generating reliable, auditable value in PayFi and real-world assets exactly where trust and history matter most.

I suddenly felt the parallel to that Peshawar knife sharpener. Human civilization advanced not just because we invented sharper tools, but because we preserved knowledge across generations through oral stories, written scrolls, libraries, hard drives. If every craftsman woke up forgetting yesterday's techniques, we'd still be chipping flint. The same holds for AI agents: intelligence without memory of experience is sterile. Respecting accumulated "cracks" past decisions, failures, contexts adds dignity and strength, much like gold in Kintsugi. Vanar's bet honors that: memory as a first-class primitive, not an afterthought.

The philosophical shift in 2026 is already visible. Massive infrastructure investments from big players signal AI must graduate from interesting toys to reliable workforce. Intelligence is commoditized; reliability error-free, auditable, continuous is scarce. Only agents that don't forget can handle large-scale DeFi or RWA flows without constant human babysitting.

Look at $VANRY today (around $0.0062–$0.0064 as of Feb 9, 2026): stuck in the corner, low volume, lying flat in a choppy altcoin graveyard. Retail confidence worn thin no excitement, no FOMO bait, pitiful trading action. It's physiological torture for holders, punishment for refusing to play the storytelling game. But in my eyes, this is classic infra-phase refinement. Projects chasing narratives pump and dump; those fixing foundational pain points endure quietly. Vanar's developer dependency could become unbreakable once a handful of killer agents run profitable, continuous tasks with Neutron's persistence. Path dependency trumps hype. Usage-burn mechanics (fees, subscriptions for premium tools like myNeutron/Kayon) compound in the background while the market sleeps.

Don't talk get-rich dreams at this level. Talk production efficiency. In 2026, whoever makes on-chain AI remember its own experience respecting every scar as part of its edge will hold the ticket to the future. Vanar isn't shouting; it's sharpening the blade.

What part of this stateless memory hell have you hit hardest in your own building or using agents? Are you poking the Neutron early access yet?