In the race to build smarter agents, most projects obsess over reasoning, speed, and autonomy. Vanar is focused on something deeper: memory. Not short-term context. Not temporary session logs. Real, durable, queryable memory that survives restarts, migrations, scaling events, and long-running workflows. If intelligence is the brain of an agent, Vanar is building its long-term nervous system.

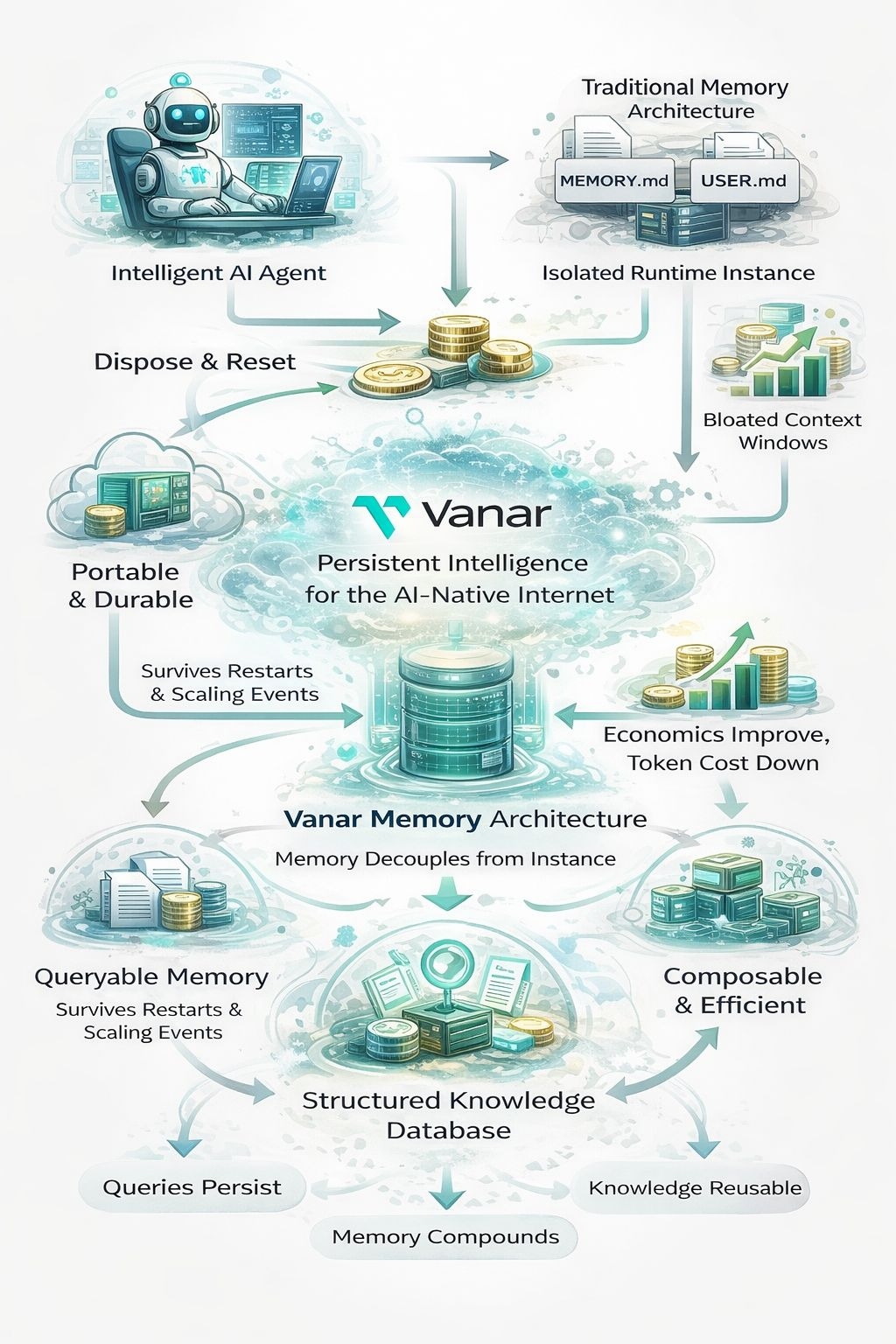

The shift is subtle but profound. Today, many AI agents operate like brilliant amnesiacs. They perform well within a single session, referencing local files or structured prompts such as MEMORY.md or USER.md. But once the process shuts down, scales horizontally, or migrates to a new instance, continuity fractures. Context windows grow bloated. Token costs increase. Knowledge becomes technical debt instead of an asset.

Vanar introduces a structural correction to this flaw. Through its Neutron Memory architecture, intelligence is decoupled from the running instance. The agent becomes disposable. The memory does not.

This changes the economics of agent systems. Instead of dragging entire histories forward in every prompt, agents query memory the same way they query tools. Knowledge becomes compressed into structured objects that can be reasoned over, indexed, retrieved, and reused. Context windows stay manageable. Token costs decline. Multi-agent systems begin to resemble infrastructure rather than experiments.

The impact is especially visible in persistent workflows. Consider an always-on research agent monitoring market signals. In traditional systems, it must repeatedly load large histories to maintain awareness. With Vanar’s memory architecture, the agent simply queries structured knowledge objects. Intelligence compounds over time instead of resetting at every lifecycle event. What it learns today remains actionable tomorrow.

This persistence also enables portability. Agents can shut down, restart elsewhere, or scale across machines without losing continuity. Knowledge survives across processes. That seemingly simple property transforms reliability in distributed AI systems. It means background agents can operate for weeks or months without suffering from context decay or runaway prompt costs.

Vanar’s approach also aligns with the broader evolution of decentralized infrastructure. As AI systems move toward multi-agent coordination, composability becomes critical. Memory cannot remain trapped inside isolated runtime instances. It must be interoperable, structured, and economically efficient. Vanar treats memory as a protocol-level primitive rather than an afterthought.

There is a philosophical layer to this as well. Intelligence without memory is reaction. Intelligence with memory becomes strategy. When agents retain structured knowledge, they can identify patterns, refine models of the world, and optimize decisions over time. Compounding knowledge is what turns tools into systems and systems into infrastructure.

From a performance perspective, this architecture reduces unnecessary token consumption by eliminating repetitive context injection. Instead of reprocessing entire histories, agents retrieve precisely what matters. In long-running deployments, even a 30–40% reduction in context overhead can dramatically change cost structures. At scale, that difference defines viability.

Vanar’s vision extends beyond a single API. It represents a move toward AI-native infrastructure where persistence, portability, and composability are built into the foundation. In such an environment, agents are not isolated scripts but coordinated actors operating on shared knowledge layers.

The future of AI will not be defined solely by larger models. It will be defined by better systems. Systems that remember. Systems that scale without forgetting. Systems where intelligence survives the instance.

Vanar is building that layer. Not louder agents. Not flashier prompts. But durable intelligence designed to persist, compound, and operate as real infrastructure in the AI-native internet.