I’ve watched enough waves of blockchain innovation to be careful whenever a project claims it is unlocking the next phase of artificial intelligence. Usually what follows is a familiar pattern. A new execution model appears, a few demonstrations circulate, and suddenly the narrative jumps straight to inevitability. Adoption, we are told, is around the corner.

In practice, most of these stories underestimate something basic.

Intelligence is not only about computation. It is about memory.

After spending time examining how AI-oriented infrastructure behaves on @Vanarchain , the more interesting question is not how fast agents can run or how cheaply they can execute. It is how reliably they can remember.

That might sound like a secondary concern. It isn’t.

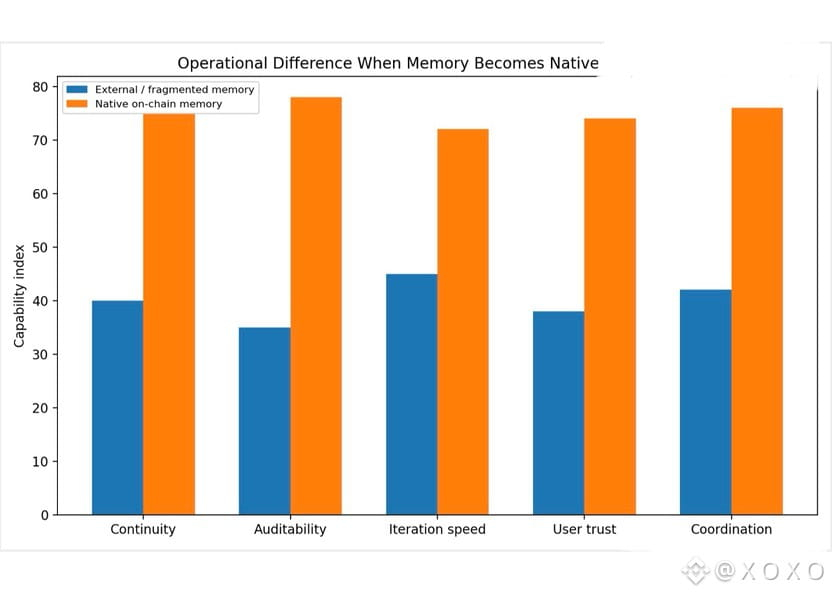

When AI systems operate in real environments, continuity determines usefulness. An agent that cannot recall previous decisions, prior ownership states, or historical interactions becomes reactive rather than strategic. It can answer prompts, but it cannot build relationships. It can perform tasks, but it cannot develop accountability.

Memory is what turns output into behavior.

Yet most blockchain environments still treat memory as an externality. Data may exist somewhere, but it is not embedded into the operational fabric of the system. It is retrievable, but not native. Persistent, but not coordinated.

This gap introduces friction.

The moment AI touches value, the absence of integrated memory becomes visible. Users want explanations. Builders need audit trails. Markets require consistency. Without a shared historical layer, every action feels isolated from the one before it.

You can simulate intelligence in that environment. Sustaining it is harder.

Vanar appears to begin from this constraint rather than ignoring it.

What stands out is the attempt to make memory legible to the system itself, not merely to external observers. The goal seems less about storing information and more about allowing intelligent processes to reference it in ways that remain verifiable.

That difference is subtle but important.

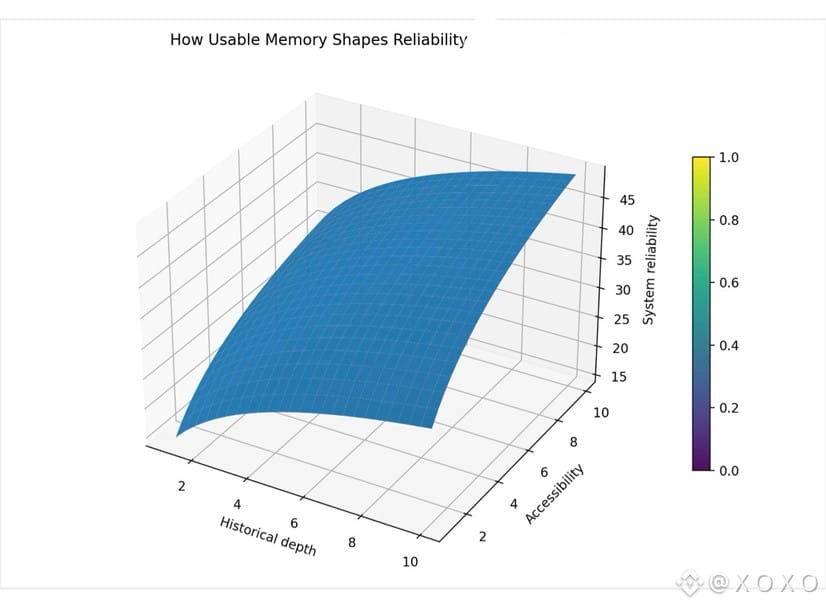

Storage preserves history. Native memory allows history to shape future behavior.

If you follow how agents typically evolve, this becomes critical. Improvement depends on feedback loops. Decisions influence outcomes, which influence later decisions. When those loops are fragmented across platforms or require ad-hoc retrieval, development slows. Coordination weakens.

But when memory lives within the environment where execution happens, iteration tightens.

Learning accelerates.

Another effect is social. Users interact more comfortably with systems that can demonstrate continuity. They expect preferences to persist. They expect context to matter. Without memory, every interaction resets trust.

Repeated resets are exhausting.

Infrastructure that reduces those resets tends to retain participants longer.

It is also worth noting what Vanar avoids. There is no dramatic claim that simply placing data onchain will magically produce intelligence. The posture is more grounded. Memory provides conditions for reliability, not genius. Agents still need design, training, oversight.

Again, restraint makes the argument stronger.

From a developer perspective, native memory reduces translation work. Instead of stitching together external services, teams can design around shared references. Identity, assets, prior actions. These become building blocks rather than obstacles.

Time saved on plumbing can be invested in product.

Security logic benefits as well. Systems with accessible historical grounding make anomalies easier to detect. Participants can reason about deviations. Predictability improves.

AI operating without history may be creative. AI operating with history becomes accountable.

Governance becomes more meaningful under this model. Decisions are not abstract votes; they become part of a recorded narrative that future processes can consult. Institutional memory begins to form.

That is how organisations mature.

After examining how these ideas intersect, the impression is not futuristic. It is practical. Vanar seems less interested in dazzling demonstrations and more interested in ensuring that intelligent systems can operate repeatedly without losing context.

This is less glamorous than speed benchmarks, but likely more necessary.

One way to measure infrastructure readiness is to ask what happens on the thousandth interaction, not the first. Systems built for spectacle often degrade over time. Systems built for memory tend to stabilize.

Durability becomes visible slowly.

Of course, implementing native memory is not trivial. Questions of scale, privacy, and interpretation remain. Healthy skepticism is warranted. But acknowledging those difficulties is part of seriousness. Pretending they do not exist would be easier.

Vanar appears to be choosing the harder path.

What you are left with is a different emotional response than typical AI narratives. Not amazement. Instead, reassurance. A sense that the environment is preparing for sustained activity rather than temporary excitement.

Confidence accumulates quietly.

If intelligent agents are going to participate meaningfully in economies, they will need more than execution. They will need recall, traceability, and shared history. Otherwise, coordination will always lag capability.

Native memory is how that gap begins to close.

Whether Vanar ultimately delivers will depend on execution. Early impressions can mislead, and infrastructure only proves itself under stress. Still, the direction is clear. The project is treating memory as foundational rather than optional.

And that alone makes it worth continued attention.