For years, conversations about artificial intelligence have been framed around fear of cognition. Will machines think better than humans? Will they replace jobs? Will they outsmart us? But quietly, a more immediate and more practical risk has been growing in the background, largely unnoticed by mainstream discussion. It is not about thinking. It is about spending.

The moment software is allowed to move money on its own, the stakes change completely. Intelligence becomes secondary. Control becomes everything.

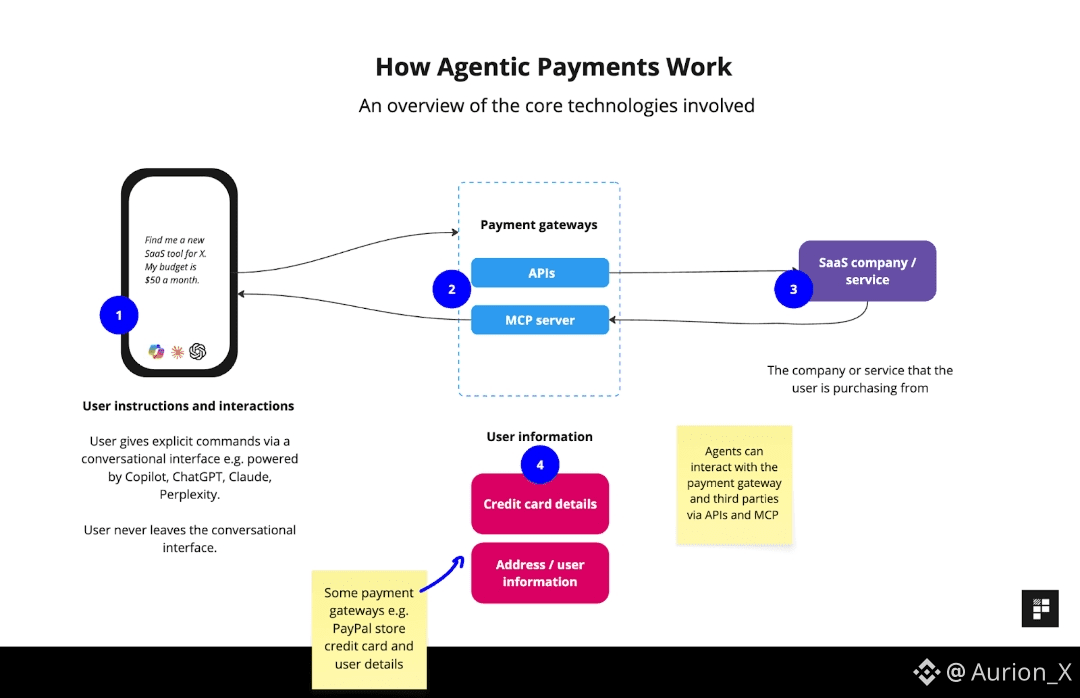

AI systems today already plan, decide, and act at speeds no human can supervise in real time. They search, negotiate, optimize, and execute thousands of actions per minute. As long as these systems are boxed into recommendation engines or analysis tools, the risk feels abstract. But once they are allowed to pay for services, rent compute, buy data, or coordinate financially with other agents, abstraction disappears. Money makes mistakes real.

This is the problem Kite is built around. Not intelligence, not hype, not speculation, but the uncomfortable reality that autonomous systems need a safe, enforceable way to handle payments without quietly borrowing a human’s identity and hoping nothing goes wrong.

Most financial infrastructure on the internet was never designed for this. Credit cards assume disputes. Bank accounts assume reversibility. Wallets assume a human is watching. API keys assume trust. None of these assumptions hold when software operates continuously and autonomously. An agent does not feel hesitation. It does not sense context. If something is technically allowed, it will do it at scale.

This is why uncontrolled payments are the real risk.

Today’s automation stack solves this problem poorly. Developers rely on centralized dashboards, spending limits enforced off-chain, or shared wallets with blanket permissions. These systems work until they don’t. When an API key leaks, funds drain instantly. When a bot malfunctions, there is no graceful failure. When authority is too broad, every mistake becomes catastrophic.

Kite starts from the assumption that this is unacceptable at scale.

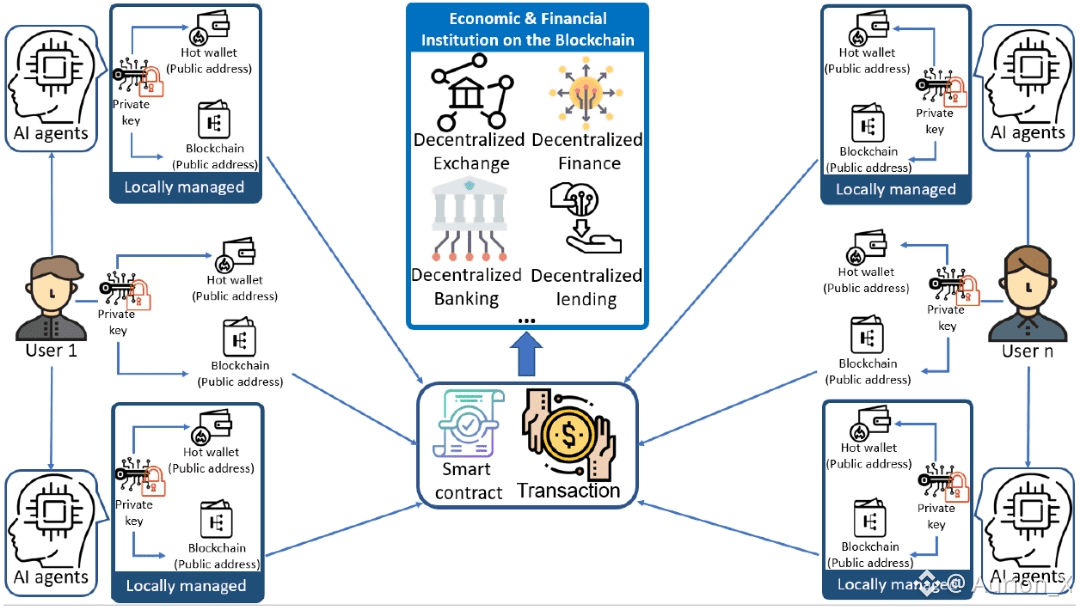

Instead of treating payment as a simple transfer of value, Kite treats it as an act of delegated authority. Who authorized this action? Under what limits? For how long? On behalf of whom? These questions are rarely answerable on most blockchains today, because identity and control are collapsed into a single private key. If the key signs, the system assumes legitimacy.

That model breaks the moment machines become economic actors.

Kite restructures this entirely by separating identity into layers. The human remains the root authority. This is the entity that defines intent, owns capital, and ultimately bears responsibility. Above that sits the agent, an autonomous program that can act independently but only within explicit boundaries defined in advance. Below that sits the session, a temporary execution context created for a specific task, with a limited lifespan and scope.

This structure mirrors how responsibility works in the real world. A company authorizes an employee. The employee performs a task. The task exists within defined limits. If something goes wrong, the failure is isolated. You don’t lose the entire organization because one task failed.

By encoding this logic directly into the blockchain, Kite turns delegation from a psychological risk into a mechanical process. Agents cannot exceed their authority because the system will not allow it. Sessions expire automatically. Permissions can be revoked without touching core assets. Auditability exists without requiring constant human oversight.

This matters because agents will fail. They will misinterpret prompts. They will be exploited. They will operate in adversarial environments. Designing systems that assume perfect behavior is naive. Designing systems that limit damage is realistic.

Payments themselves follow the same philosophy. Machines do not need exciting money. They need predictable money. Volatility is noise. Noise becomes risk when decisions are automated. If an agent is choosing between services, it needs cost to be a stable variable. Otherwise, rational optimization collapses into randomness.

This is why Kite leans into stablecoin-native settlement and predictable execution. It is not a narrative choice. It is a usability requirement. Stable settlement allows agents to reason cleanly. Predictable fees prevent runaway behavior. Fast confirmation allows coordination without bottlenecks.

Kite treats payments like utilities. They are supposed to be boring. They are supposed to work every time. They are supposed to fade into the background so automation can function without constant exception handling.

The deeper shift Kite is preparing for is not agents serving humans, but agents transacting with each other. One agent will pay another for data. Another will pay for inference. Another will outsource a subtask. These interactions will happen invisibly, continuously, and at machine speed. Humans will not approve them individually. They will only define the boundaries.

Without proper identity, this becomes spam. Without constraints, it becomes abuse. Without predictable settlement, it becomes chaos.

Kite is designed for this invisible economy before it becomes obvious.

The KITE token fits into this system as coordination rather than fuel. In the early phase, it is used to encourage participation, experimentation, and ecosystem growth. This attracts builders and users who are interested in making things work rather than extracting short-term value. As real usage emerges, staking, governance, and fee mechanics activate around actual economic activity. This sequencing matters because premature financialization distorts incentives and attracts the wrong kind of attention.

Governance in an agent-driven system is not about loud voting or rapid change. It is about defining the rules under which autonomous systems are allowed to operate safely. Poor governance does not just slow a network down; it introduces systemic risk. Kite’s gradual, usage-driven approach reflects an understanding that rules should emerge from reality, not theory.

What makes Kite different is not that it promises a dramatic future. It accepts a boring truth. If machines are going to handle money, infrastructure must prioritize control over spectacle. Clarity over hype. Limits over freedom. Trust over speed for its own sake.

Humans are not being removed from the system. They are being repositioned. Instead of executing every action, humans define intent. Machines execute within constraints. Accountability remains intact even as autonomy scales.

The real question Kite forces the market to confront is not whether AI will become more intelligent. That is already happening. The question is whether we are ready to let software move money without losing control. Whether our systems can express delegation clearly. Whether failure can be contained. Whether trust can be enforced rather than assumed.

Kite does not claim inevitability. It does not promise dominance. It prepares for a future where uncontrolled payments are the real danger and controlled autonomy is the solution.

If that future arrives slowly, Kite will look patient. If it arrives suddenly, Kite will look prepared.

And in infrastructure, preparation is usually what separates systems that survive from systems that break.