I’ve spent the last few months building prototypes on Sui that rely heavily on decentralized blob storage for media and datasets—things like AI model checkpoints and on-chain video snippets. Walrus, the decentralized storage network on Sui powered by the $WAL token, has been my main tool for this. With the Binance CreatorPad campaign running from January 6 to February 9, 2026, offering a 300,000 WAL reward pool for quality content and engagement, it’s a good time to talk about one of the things that makes Walrus practical for builders: its cost-effective redundancy via RedStuff erasure coding, especially when stacked against full replication approaches.

Have you compared storage costs across protocols yet, or run into high fees that made you rethink a project?

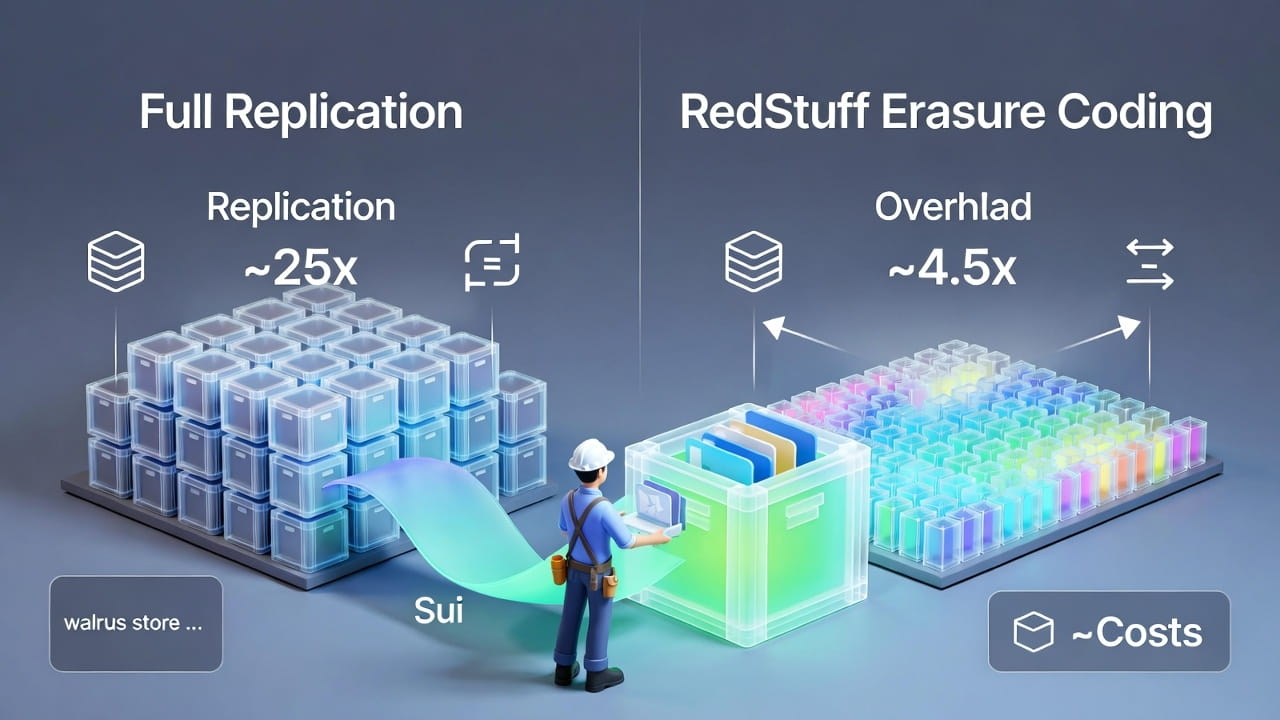

My entry point was a real project pain point. Early on, I was uploading a 2GB dataset for testing an on-chain agent. I needed high durability without breaking the bank. I ran a walrus store command via the CLI—walrus store dataset.tar.gz --epochs 10—and watched the costs: the encoded size came out around 9-10GB (roughly 4.5-5x the original), but the upfront WAL payment was surprisingly low given current prices hovering around $0.145-$0.147 per WAL. The blob got certified on Sui, and I could verify availability proofs without issues. What stood out was how this compared to what full replication would demand—if every node had to hold a complete copy, the network overhead would be massive, likely 20-25x or more for similar fault tolerance in a Byzantine setting.

RedStuff is Walrus’s two-dimensional erasure coding that makes this work. Imagine a traditional warehouse where you keep full duplicate boxes of everything in every location—safe, but you’d need enormous space and cost. Full replication in decentralized systems is like that: high reliability from copying the entire file across many nodes, but the replication factor balloons (often 25x+ for strong security), driving up costs and limiting scale. Walrus flips this with RedStuff: it breaks your blob into slivers arranged in a 2D grid, adds mathematical redundancy across both rows and columns, then distributes those slivers to nodes in the committee. You get resilience against up to 2/3 node failures (or Byzantine behavior) with only about 4.5x overhead—data reconstructs even if many slivers are lost, and recovery is efficient (bandwidth proportional to the lost portion, not the whole file). It’s like having a smart, self-healing puzzle where missing pieces can be rebuilt cheaply from the remaining ones.

During the CreatorPad campaign, this efficiency shaped my approach. One task involved sharing protocol deep dives with real examples, so I uploaded sample media for posts—images and short clips totaling around 1.5GB. After paying the WAL fees (linear in encoded size, so ~7-8GB effective), I realized how much cheaper it felt compared to alternatives I’d tried before. I swapped about $150 worth of SUI for WAL mid-campaign to cover extensions on a couple blobs when an epoch rolled over. That trade refined my thinking: the low overhead lets me store more aggressively without worrying about costs eating into prototype budgets. Hold on—what caught me off guard was how seamless the reads stayed; no downtime during committee shifts, even though the redundancy is so much leaner.

There are tradeoffs, naturally. RedStuff relies on the committee’s honest majority and proper stake distribution—concentration could introduce risks, though the voting and future slashing mechanisms help. Recovery bandwidth, while efficient, still requires coordination during churn, and in extreme scenarios (massive simultaneous failures), you might hit bottlenecks. For campaign creators, this means timing uploads around epochs can save WAL if prices adjust upward. The core constraint is that it’s not zero-overhead; you still pay ~5x the blob size in storage resources, but that’s a fraction of full replication.

One non-obvious insight from hands-on use: the 4.5x factor quietly enables longer retention on smaller budgets. In my tests, extending blobs across multiple epochs costs far less than I expected, which changes how I design apps—pinning data for months instead of weeks becomes feasible. A quick chart comparing replication factors (full rep ~25x, classic ECC ~3x with limits, RedStuff ~4.5x) against security and recovery costs would highlight this edge clearly.

Looking ahead, this cost-effective redundancy positions Walrus well in the Sui ecosystem, where scalable data layers are key for media, AI, and dApps. Reliability has been solid—no lost blobs or failed proofs in my runs. For the campaign, it rewards creators who actually test and share these mechanics, not just surface-level takes.

Practitioner view: I value the balance—strong durability without prohibitive costs, though I watch stake decentralization closely. It’s a tool that actually works for building, not just theorizing.

What’s your experience storing larger blobs on Walrus—did the costs surprise you compared to expectations? Which sizes or use cases are you testing? Share below; always interesting to hear real numbers.