@Dusk Most privacy failures don’t start with a dramatic breach. They start with a small, sharp question that lands in a procurement thread: can you prove who accessed this data, why they needed it, and what kept it from drifting into places it didn’t belong? Audits turn fuzzy commitments into hard evidence, and they punish systems that rely on “trust me” more than “show me.”

That question is getting louder because regulated finance is edging onto shared digital rails. On January 19, 2026, Intercontinental Exchange, the parent company of the New York Stock Exchange, said it’s developing a platform for tokenized securities with on-chain settlement and ambitions like 24/7 trading, subject to regulatory approval. If traditional markets start treating settlement as software, then controls—privacy controls included—stop being “nice to have” and become infrastructure.

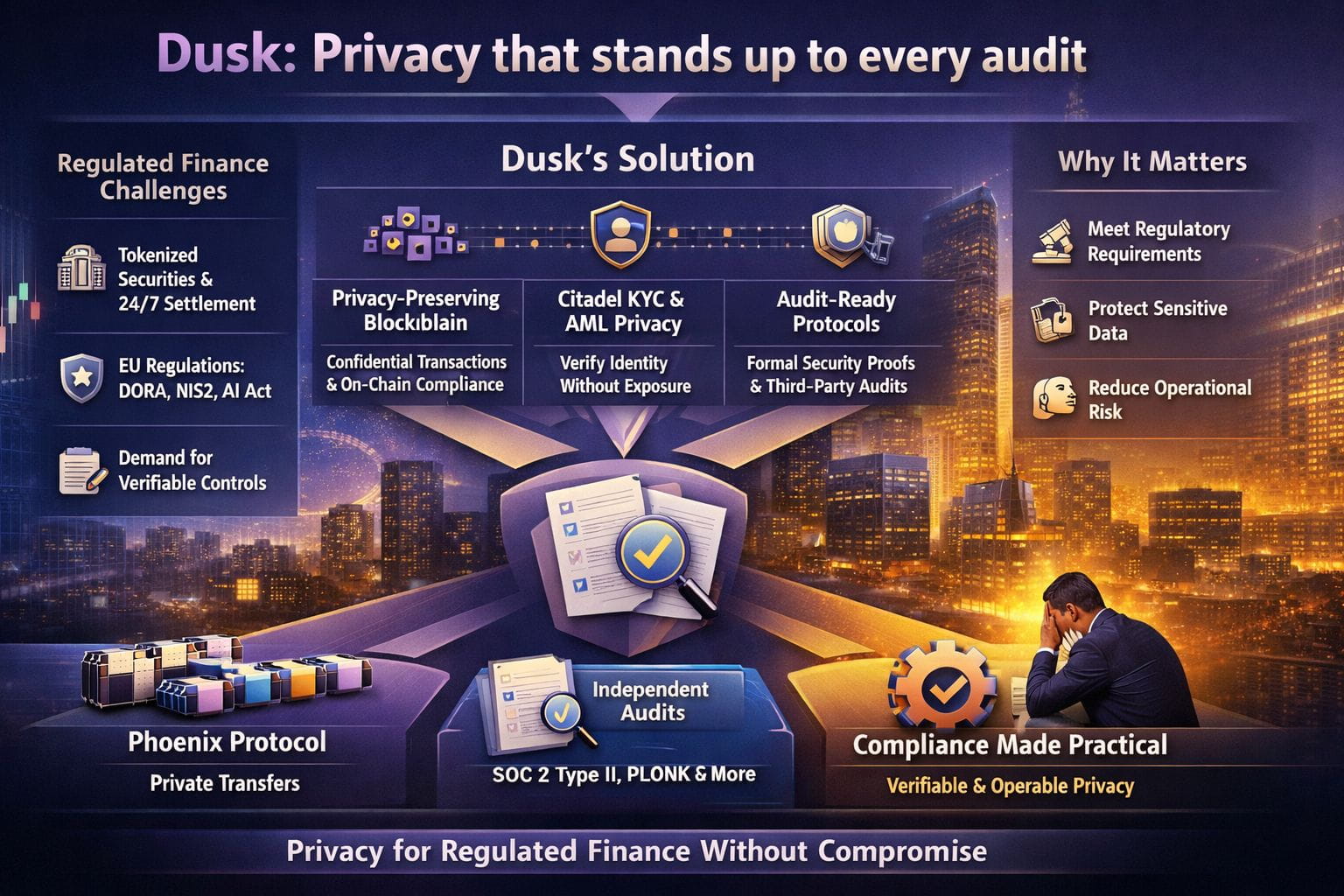

Europe’s rulebook is converging. DORA (since Jan 17, 2025) demands operational resilience—testing and third-party control included. NIS2 expands cybersecurity expectations (Oct 17, 2024 transposition deadline). The EU AI Act is in force from Aug 1, 2024 with phased rollout through Aug 2026+. Together, they push for verifiable governance without constant disclosure of sensitive detail.

Dusk’s pitch makes sense in that climate because it doesn’t treat privacy as secrecy for its own sake. Its documentation describes Dusk as a privacy blockchain for regulated finance, aimed at letting institutions meet regulatory requirements on-chain while users keep confidential balances and transfers instead of full public exposure. That’s the heart of the title: privacy that can be defended, not just asserted.

The protocol tries to get there by separating what must be known from what merely gets leaked. Phoenix, Dusk’s transaction model, is designed for privacy-preserving transfers, and Dusk has published material about formal security proofs for Phoenix. Proofs won’t stop operational mistakes, but they do give auditors and partners something concrete: assumptions to test, edges to probe, and a way to reason about what “private” actually means.

Identity is the next hard wall. KYC and AML checks are where many “privacy” systems quietly turn into data-hoarding machines, because the easiest evidence is more disclosure. Dusk’s Citadel work describes a privacy-preserving identity approach for KYC, meant to let services grant or deny access without exposing a user’s personal details to every intermediary. Even if you’re skeptical, it’s the right direction: minimize who sees what, and make disclosure deliberate.

Audits also care about process, not just math. Dusk has made a point of publishing third-party security work, summarizing “10 different audits” and “over 200 pages of reporting.” Its public audits repository lists reports across core components, including PLONK, Kadcast networking, multiple Oak Security reviews, a Phoenix audit, and a Zellic smart-contract assessment. That transparency doesn’t guarantee safety, but it shortens the distance between a claim and evidence.

Outside crypto, the same audit-first mindset is spreading because buyers are tired of security promises they can’t verify. SOC 2 Type II tests whether controls operate over time, not just on paper. Proton’s July 2025 SOC 2 Type II completion is one clear illustration of the market’s appetite for independent evidence in practice today.

The part I don’t want to gloss over is the human reality behind these systems. ISACA’s State of Privacy 2026 commentary highlights shrinking teams and rising stress, a reminder that policies fail when people are overloaded. If Dusk’s approach works, it won’t be because it promises perfect privacy. It will be because it makes compliance-compatible privacy easier to operate, easier to audit, and harder to accidentally undermine.