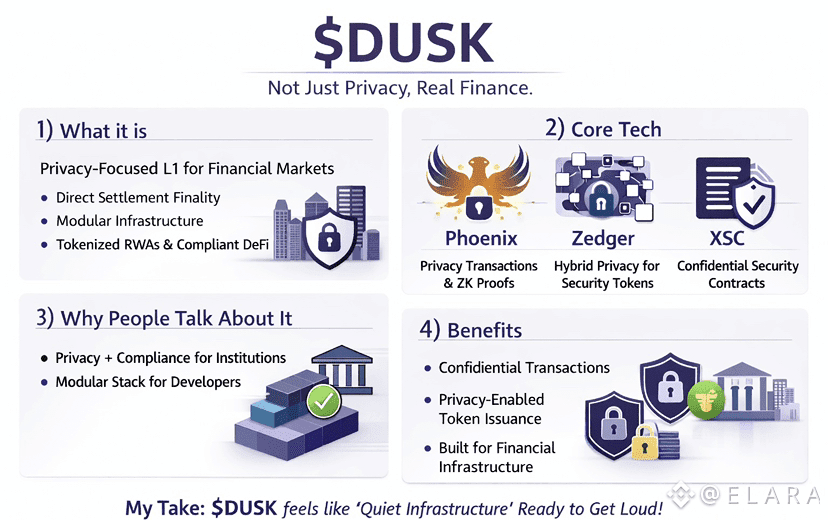

Dusk Network approaches privacy in a way that feels far more practical than absolute secrecy. Instead of hiding everything, its virtual machine allows developers to decide exactly what should remain confidential and what should stay visible. As I dig into how this works, it becomes clear that the real breakthrough is not privacy itself but control. This design finally makes it possible to build contracts that resemble real financial systems where some data must stay private while other information must remain observable for trust and compliance. This walkthrough looks at how the Dusk Virtual Machine handles this balance from architecture to execution and real world usage.

Inside the Virtual Machine Design

Inside the Virtual Machine Design

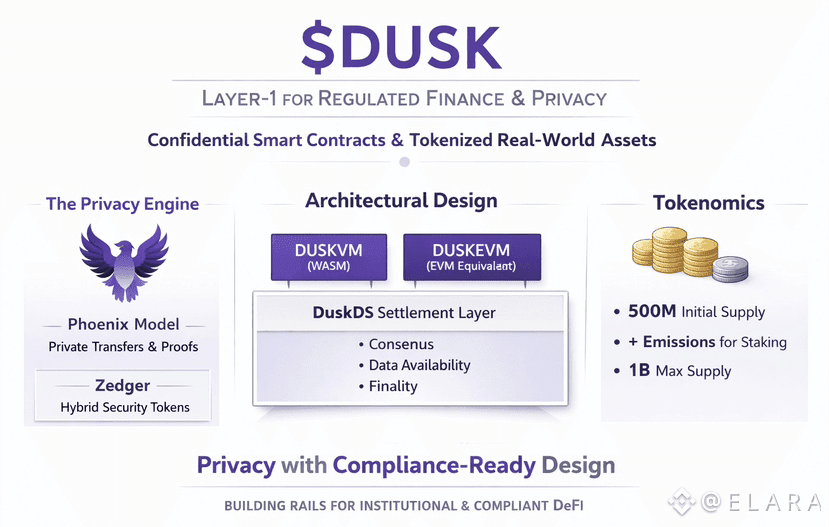

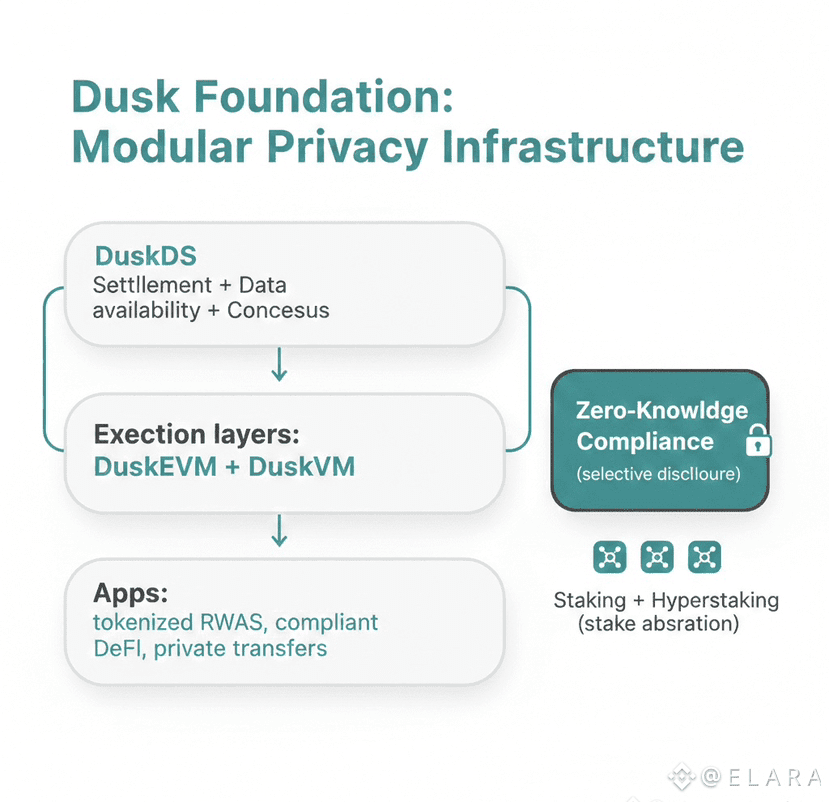

The Dusk Virtual Machine is built as a purpose driven execution environment where confidentiality is part of the language rather than an afterthought. Developers explicitly label variables as private or public during development. Public data behaves like traditional blockchain storage and updates the visible ledger directly. Private data stays encrypted and is processed through zero knowledge circuits that prove correctness without revealing the underlying values.

What stands out to me is how natural this feels for developers. The environment is Rust based and familiar, so moving logic from open systems does not require learning an entirely new mental model. The compiler handles the heavy lifting by generating the required proof circuits whenever private data is involved. Validators confirm execution by verifying proofs rather than inspecting data, which means the network reaches consensus without ever seeing sensitive information.

How State Is Split and Stored

Public and private data follow entirely different storage paths. Public state such as total supply or configuration values is stored in readable structures that anyone can query. Private state lives as encrypted commitments represented by cryptographic hashes that prevent reuse or manipulation.

When private values change, the system proves that the transition follows the contract rules without exposing balances or amounts. I find this especially powerful because it allows contracts to enforce rules like minimum balances or eligibility thresholds without revealing actual figures. Storage uses separate commitment trees for private data, while authorized parties can access specific details using controlled viewing permissions when disclosure is required.

What Happens During Contract Execution

What Happens During Contract Execution

Every contract call follows one of two paths depending on what data it touches. Public functions behave like traditional smart contract calls with transparent updates and readable events. Private functions enter a confidential execution flow where encrypted inputs are processed inside proof circuits.

The output of this process is a compact proof that shows the rules were followed. Validators only check proof validity and ensure no double usage occurred. They never see the underlying data. Fees are still applied and finality remains fast, but private execution costs more because proof generation is computationally heavier. From what I see, batching multiple operations into one proof helps keep this efficient at scale.

How Developers Combine Public and Private Logic

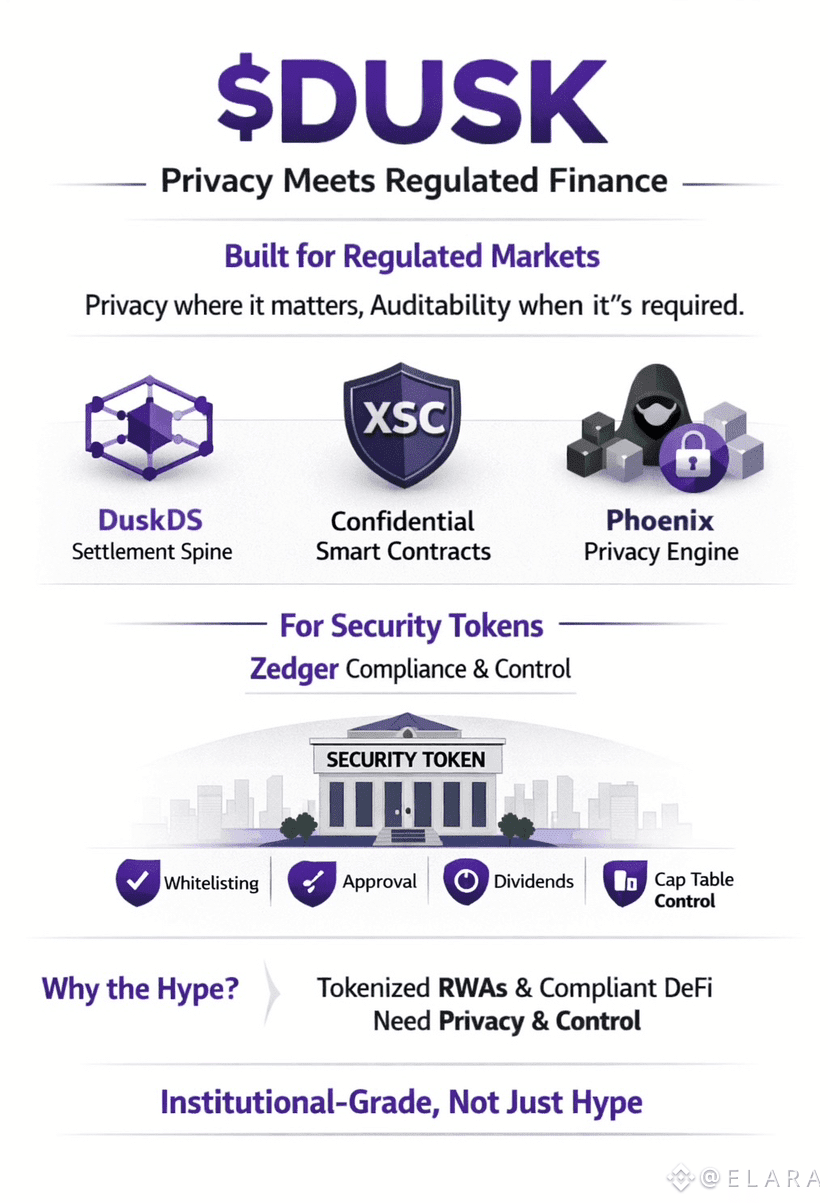

Most real applications mix both forms of visibility. A tokenized asset contract might show total supply and regulatory flags publicly while keeping ownership and transaction history private. Transfers can require proof that both parties meet compliance conditions without exposing identities or balances.

Developers rely on built in libraries that simplify these patterns. Functions can enforce rules privately and only reveal outcomes when necessary. Local simulation tools let builders test how proofs behave before deployment, which reduces errors and accidental data exposure. I like how the type system itself helps prevent mistakes by enforcing visibility rules during development.

Costs and Performance in Practice

Public operations are inexpensive and fast, similar to what developers expect on other chains. Private operations cost more because they involve proof generation, but the tradeoff is strong confidentiality. Typical private transfers complete within seconds and larger batches are optimized to handle complex operations like decentralized exchange trades.

Fees can be paid in shielded form, which means even transaction costs do not expose user behavior. Some of these fees are burned, creating long term value alignment with network usage. Performance remains strong even during compliance heavy workloads, which shows the system was designed for institutional scale rather than experiments.

Built In Paths for Compliance

One of the most compelling aspects is how compliance fits naturally into this model. Identity attestations can be passed into private contract calls as encrypted inputs. Contracts verify required attributes without revealing personal data. Auditors or regulators can later receive temporary access keys that allow them to review activity within defined limits.

From my perspective this feels like a realistic bridge between decentralized systems and regulated finance. Privacy is preserved by default, but accountability is always possible when required.

Dusk Network does not treat privacy as an all or nothing choice. Instead it gives developers fine grained tools to model how information flows in real economies. By separating private and public state at the virtual machine level, it allows smart contracts to behave more like real financial agreements. As more applications adopt this approach, it raises an interesting question. How many entirely new financial structures become possible when visibility itself becomes programmable rather than binary.