A few months ago, I was wiring up a basic automated payment flow for a small DeFi position. Nothing fancy. Just routing yields through a bridge so I could cover gas on another chain without babysitting it. On paper, it should have been smooth. In reality, things dragged. Transactions that usually cleared fast sat pending longer than expected, and once I added a couple of data-heavy calls, the fees started stacking in ways I didn’t anticipate. They weren’t outrageous, but they weren’t predictable either. Having been around L1s long enough, that kind of inconsistency always sets off alarms for me. Not because it breaks things outright, but because it reminds you how fragile “everyday use” still is once real workloads hit the chain.

That kind of friction usually traces back to the same root cause. Most blockchains still try to do everything at once. Simple transfers, AI workloads, DeFi, games, storage, all sharing the same rails. When traffic is light, it feels fine. When activity picks up, costs shift, confirmation times wobble, and suddenly apps behave differently than they did yesterday. For users, that means waiting without clear reasons. For developers, it means designing around edge cases instead of building features. And for anything that needs reliability, like payments or automated decision-making, that uncertainty becomes a dealbreaker.

I think of it like a highway where delivery trucks, daily commuters, and emergency vehicles all use the same lanes with no priority system. It works until it doesn’t. Once congestion hits, everyone slows down, even the traffic that actually matters. The system isn’t broken, but it’s not optimized for purpose.

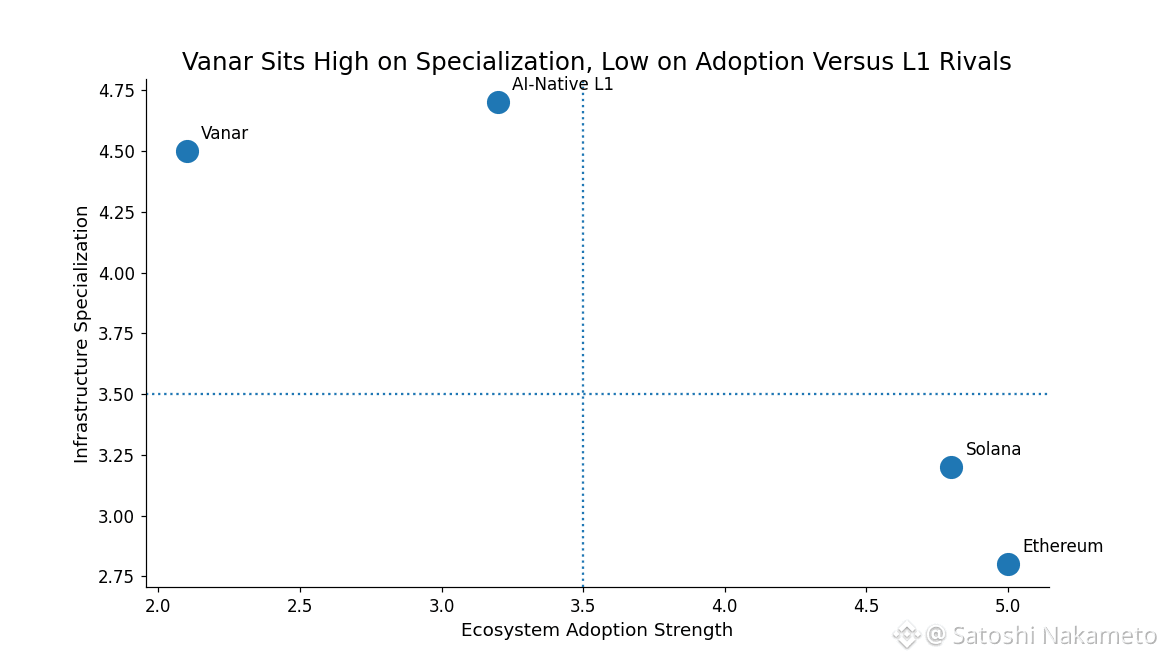

That’s the context in which @Vanar positions itself. The idea isn’t to outpace every other L1 on raw throughput, but to narrow the scope and tune the chain for AI-driven applications and real-world assets. Instead of bolting data handling and reasoning on top, Vanar pushes those capabilities into the protocol itself. Developers can stick with familiar EVM tooling, but get native support for things like data compression and semantic queries, without constantly leaning on oracles or off-chain services. The trade-off is intentional. It doesn’t chase extreme TPS numbers or experimental execution models. Block times stay around a few seconds, aiming for consistency over spectacle.

Under the hood, the architecture reflects that controlled approach. Early on, the chain relies on a foundation-led Proof of Authority setup, gradually opening validator access through a Proof of Reputation system. External validators aren’t just spinning up nodes; they earn their way in through activity and community signaling. It’s not fully decentralized on day one, and that’s a valid criticism, but the idea is to avoid chaos early while the stack matures. With the V23 upgrade, coordination between nodes improved, keeping transaction success rates high even as node counts climbed. At the same time, execution limits were put in place to stop heavy AI workloads from overwhelming block production, which helps explain why things feel stable but not blazing fast.

The $VANRY sits quietly in the middle of all this. It pays for gas, settles transactions, and anchors governance. Part of each fee gets burned, helping offset inflation as activity grows. Staking ties into validator participation and reputation voting, rather than just passive yield farming. Inflation exists, especially in the early years, but it’s designed to taper as the network matures. There’s no attempt to make VANRY everything at once. Its role is operational, not promotional.

From a market perspective, that restraint cuts both ways. With a circulating supply a little over two billion and a market cap still sitting in the teens of millions, VANRY doesn’t command attention. Daily volume is enough for trading, but not enough to absorb large sentiment swings without volatility. Price action tends to follow narratives. AI announcements, partnerships, node growth numbers. When momentum fades, so does interest. That makes it an uncomfortable hold for anyone expecting quick validation from the market.

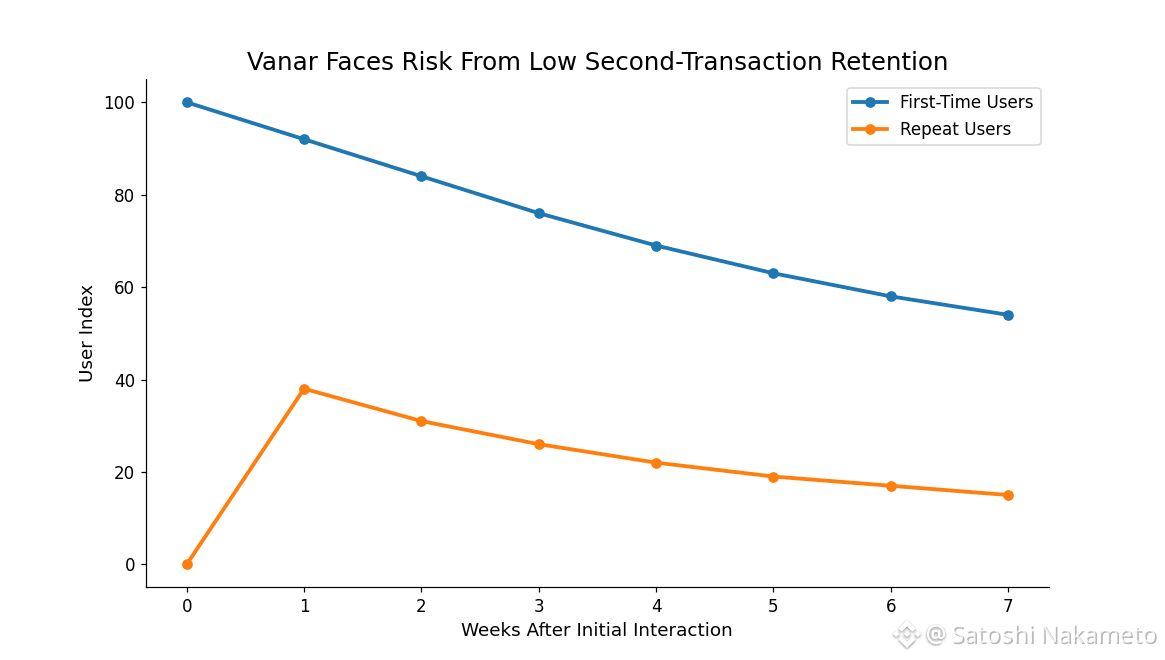

Longer term, though, the question isn’t price spikes. It’s whether habits form. Do developers come back to build a second app? Do users trust the system enough to run agents without babysitting them? The network has seen steady growth in nodes and transactions post-upgrade, but usage is still modest compared to dominant L1s. That leaves #Vanar in a difficult position. It needs adoption to justify its architecture, but adoption won’t come unless the reliability advantage becomes obvious in real use.

The competitive landscape doesn’t make that easier. Chains like Solana offer speed and massive communities. Ethereum offers gravity and liquidity. AI-focused platforms are popping up everywhere. Vanar’s Proof of Reputation system is interesting, but unproven at scale. If reputation scoring can be gamed, or if validator onboarding becomes politicized, trust erodes fast. And there’s always the technical risk. A sudden spike in AI workloads, combined with aggressive data compression, could push block execution past its comfort zone, delaying settlements and breaking the very reliability the chain is built around.

That’s really the crux of the risk. Not whether the vision makes sense, but whether it survives real pressure. Infrastructure projects rarely fail loudly. They fade when users don’t come back for the second transaction. Whether Vanar Chain builds enough quiet, repeat usage to justify its approach, or gets drowned out by louder, faster competitors, won’t be decided by announcements. It’ll show up slowly, in whether people keep using it when nobody’s watching.

@Vanar #Vanar $VANRY