Artificial intelligence is only as powerful as the data it learns from. Every recommendation, prediction, and automated decision made by AI systems is shaped by the quality, integrity, and provenance of the data underneath. Yet today, most AI pipelines rely on opaque datasets stored in centralized silos—datasets that users cannot verify, audit, or control

This is where Walrus changes the equation.

Walrus is not just a decentralized storage protocol. It is an infrastructure layer for verifiable, provable, and trustworthy data, designed for a future where AI systems must be accountable, auditable, and censorship-resistant. In an era where AI increasingly influences finance, governance, healthcare, and social systems, Walrus introduces something the industry has long lacked: cryptographic truth at the data layer.

The Hidden Crisis in AI: Unverifiable Data

Most AI models today operate inside black boxes. Data is collected behind closed doors, modified without transparency, and reused without clear consent or traceability. Even when outputs are impressive, there is no way to prove:

Where the data came from

Whether it was altered or filtered

Who controls access to it

Whether results can be independently verified

This creates systemic risks. Biased training data leads to biased models. Centralized data custody leads to censorship, manipulation, and extraction of value without user participation. As AI systems scale, these weaknesses don’t disappear—they compound.

Walrus addresses this problem at its root.

Walrus: A Decentralized Data Backbone for AI

At its core, Walrus is a decentralized data storage and availability network built for performance, security, and cryptographic verifiability. Instead of trusting a single provider or cloud operator, data on Walrus is split, distributed, and stored across independent nodes—eliminating single points of failure and control.

But decentralization alone is not enough.

What makes Walrus unique is how it integrates verifiability and access control directly into the data lifecycle. Every dataset stored on Walrus can be proven to exist, proven to be unaltered, and proven to have been accessed or processed according to predefined rules.

For AI, this means training data, inference inputs, and outputs can all be audited without exposing sensitive raw information.

Verifiable AI Pipelines: From Upload to Inference

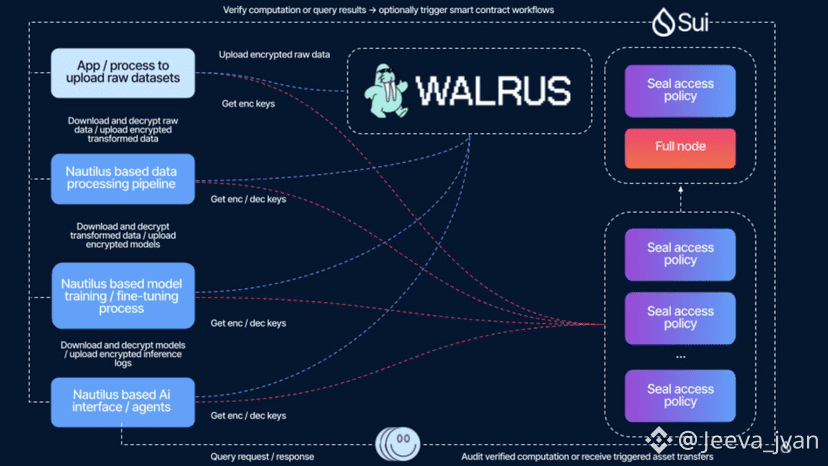

The architecture you shared illustrates a powerful idea: AI pipelines that are both private and provable.

Using Walrus alongside tools like Seal and Sui, developers can build end-to-end AI workflows where

Raw datasets are uploaded in encrypted form

Data processing pipelines (such as Nautilus-based transformations) operate on verifiable inputs

Model training and fine-tuning use provably authentic data

Inference logs and results can be audited without leaking proprietary logiC

Each step can request encryption or decryption keys only when access policies are satisfied. These policies are enforced on-chain, meaning no centralized authority can silently bypass them.

This turns AI computation into something radically new: a verifiable process, not just a trusted claim.

Why Verifiable AI Matters More Than Ever

As AI systems move closer to real-world decision-making, trust becomes non-negotiable.

Financial AI models must prove they are not manipulating inputs.

Healthcare AI must prove data integrity without violating privacy.

Autonomous agents must prove actions followed predefined rules

Walrus enables this by making data provable at rest, provable in motion, and provable in use. AI models can reference datasets whose integrity is guaranteed cryptographically, while users and regulators can verify outcomes without needing full data exposure.

This is a critical shift—from “trust us” systems to “verify everything” infrastructure.

Performance Without Compromise

A common myth is that decentralization slows everything down. Walrus is built to challenge that assumption

By optimizing for blob storage, parallel data access, and high-throughput reads and writes, Walrus delivers performance suitable for real-time AI workloads. This makes it viable not only for experimentation, but for production-grade AI systems that require speed, scale, and reliability.

Decentralized does not mean slow. With Walrus, it means resilient and scalable.

Who Benefits From Walrus-Based AI?

The implications extend far beyond crypto-native projects:

AI developers gain trusted datasets and provable pipelines

Enterprises gain auditability without data leakage

Users gain control over how their data is accessed and monetize

Regulators gain verifiable compliance instead of blind trust

Walrus creates a foundation where data contributors, model builders, and application users can finally align incentives—without sacrificing privacy or performance.

A New Standard for the AI Age

The future of AI will not be defined solely by larger models or faster chips. It will be defined by trust.

Walrus introduces a world where AI systems are grounded in verifiable data, where computation can be audited, and where users regain sovereignty over the information they generate. In this future, intelligence is not just powerful—it is accountable.

Better AI doesn’t start with bigger models.

Better AI starts with better data.

And that future starts with Walrus. @Walrus 🦭/acc #walrus $WAL