Most decentralized storage systems sell capacity. Walrus sells accountability. That distinction sounds subtle until you map it to the real reason enterprises and serious applications still default to centralized storage. The blocker is rarely ideology. It is liability. Who can prove the data was stored, prove it stayed available, and prove what happens when it was not. Walrus is interesting because it treats storage as a verifiable contract with measurable performance, not a best effort file drop.

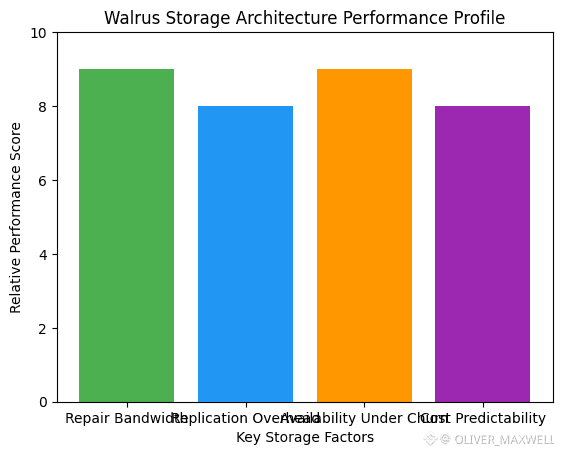

The core technical bet is that decentralized blob storage fails when it tries to look like a generic file system. Blobs are blunt objects. Video chunks, model checkpoints, imaging archives, game assets, audit bundles. They are large, unstructured, and they change operational math because repair bandwidth becomes a tax. Walrus attacks that tax directly with a two dimensional erasure coding design called Red Stuff. The research framing is precise. The system targets the trade off between replication overhead, recovery efficiency, and security guarantees, then claims it can keep high security at roughly a 4.5x replication factor while making self healing bandwidth proportional to what was actually lost rather than proportional to the whole file.

That self healing detail is not academic polish. It is the practical point. In real decentralized networks, churn is normal. Nodes disappear. Latency spikes. Operators rebalance hardware. Traditional one dimensional erasure coding can be space efficient but brutal on repair because you often pull data equivalent to the entire blob just to repair a fragment. Walrus tries to make repair granular. Red Stuff encodes a blob into paired slivers and uses quorums that are intentionally asymmetric. Writing requires a stronger threshold, while reads can succeed with a much smaller quorum, which is a direct resilience choice for availability under partial failure. The public description also makes the recovery process explicit, including lightweight reconstruction paths that query only a fraction of peers for certain sliver types. This is the kind of design that matters when the workload is not a document but a dataset.

Now connect that to the claim Walrus makes about economics. The docs describe cost efficiency in plain numbers. Storage costs are maintained at approximately five times the size of stored blobs through erasure coding, positioned as materially more cost effective than full replication approaches, and the system stores encoded parts across storage nodes rather than relying on a narrow subset. What matters is not the absolute multiplier. It is the shape of the curve. When the network scales to many nodes, full replication scales linearly with node count. A roughly fixed overhead changes the feasibility of storing large blobs without turning decentralization into a luxury product.

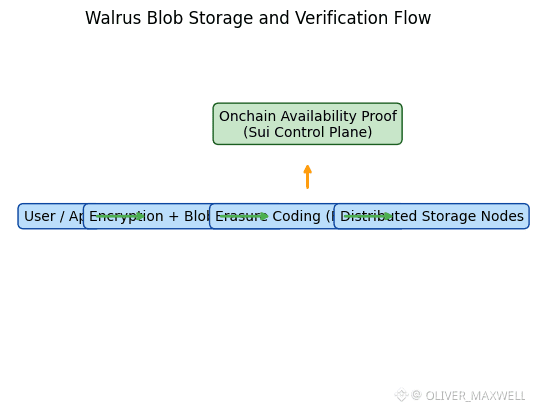

Where Walrus becomes strategically different is its insistence on using Sui as a control plane rather than bolting on a separate coordination layer. In practice this means storage space and stored blobs are represented as onchain resources and objects, so applications can reason about blob availability, lifetime, and management directly in programmable logic. The protocol also publishes an onchain proof of availability certificate as part of the blob lifecycle, which is a compliance flavored artifact even if the protocol never markets it that way. An institution does not just want data stored. It wants a machine verifiable receipt that survives internal audits and vendor rotation. This is one of the more underappreciated bridges between Web3 primitives and enterprise procurement behavior.

Privacy is where most commentary gets sloppy, so it is worth being precise about what Walrus can and cannot claim. The network’s default stance is not magic confidentiality. It is blast radius minimization. Erasure coding distributes file fragments so that no single operator holds the complete blob, which reduces insider risk and makes casual exfiltration harder. On top of that, users can apply encryption for sensitive payloads, treating Walrus as availability and integrity infrastructure while confidentiality lives in encryption and key management. The important nuance is that public blockchains are transparent by design, so metadata about storage actions can still be observable. The privacy posture is therefore best described as privacy preserving storage architecture with optional encryption, not as invisibility. That honesty is exactly what institutions need to hear because their risk models distinguish content confidentiality from metadata leakage.

If you want a clean mental model for Walrus, think of it as turning storage into a governed market with explicit service quality incentives. The docs describe a committee of storage nodes that evolves across epochs, with delegated staking and rewards distributed at epoch boundaries. That structure matters because it creates a continuous feedback loop between performance and capital allocation. In centralized storage, you negotiate an SLA and then hope the provider honors it. In Walrus, the protocol can, in principle, encode penalties and rewards into the stake weighted selection of nodes and into future slashing. It is not that slashing alone solves trust. It is that the network is engineered to make underperformance economically legible rather than socially debated.

This is also where the WAL token becomes more than a payment chip. WAL is explicitly the payment token for storage, but the payment mechanism is designed to keep storage costs stable in fiat terms and to protect users from long term token price swings. That is an unusual choice in crypto economics because it prioritizes procurement predictability over speculative reflexivity. Users pay upfront for a fixed storage period and the protocol distributes that payment over time to nodes and stakers, aligning revenue with the ongoing obligation to keep data available. There is also a disclosed subsidy allocation meant to reduce effective user cost during early adoption, which is a growth lever that looks more like go to market budgeting than like token inflation theater.

Token design can still fail if it ignores the real operational externalities of blob storage. Walrus appears to acknowledge one of the nastier ones, stake churn. Rapid stake shifts are not just a governance drama. They can force reallocation of storage responsibilities and trigger expensive data migration, which is a real cost paid in bandwidth and operational load. Walrus proposes a penalty fee on short term stake shifts, partially burned and partially distributed to long term stakers, explicitly to discourage noisy reallocations. It also describes a future burn path tied to slashing for low performing nodes. Those mechanics are meaningful because they target behavior that creates protocol wide costs rather than simply trying to manufacture scarcity.

For institutional adoption, the conversation usually collapses into three fears. Data durability under adversarial conditions. Legal and operational clarity about who is responsible when things go wrong. Integration costs into existing stacks. Walrus addresses durability with its resilience oriented coding and with research claims about robustness under churn, including epoch change protocols designed to maintain availability during committee transitions. It addresses clarity by making the storage lifecycle auditable through onchain objects and availability proofs that can be referenced in internal controls. And it addresses integration cost by exposing interfaces that look familiar to existing developer workflows, including CLI and SDK approaches and compatibility with existing delivery patterns like caching layers, while still allowing verification to be run locally when needed. This is exactly the mix an enterprise buyer wants. Familiar integration surfaces with cryptographic backstops.

The most compelling real world use cases are not generic file hosting. They are environments where integrity, timed availability, and auditability are first order requirements. Think regulated record bundles where you need a verifiable commitment that the bundle exists and has not been swapped. Think AI data pipelines where provenance and immutability matter as much as throughput, especially when datasets are expensive to curate and politically sensitive to alter. Think marketplaces where content availability is itself a contractual promise. Walrus’s programmability angle matters here because storage is not just where data sits. It becomes part of application logic. You can build systems where access, renewal, escrow, and expiration are enforced through the same onchain primitives that already enforce financial logic.

There is also a subtle market positioning advantage in focusing on blobs rather than pretending to be a universal storage layer. Blobs let Walrus optimize for throughput, recovery, and availability certification without inheriting the complexity of directory semantics and rich file system expectations. That specialization is a defensible moat because the performance bottleneck in decentralized storage is rarely the happy path write. It is recovery under churn and adversarial conditions. The research emphasis on asynchronous network challenge resistance and on authenticated data structures for consistency suggests Walrus is trying to harden exactly those weak points, which is where reputations are made or broken.

The forward looking question is whether Walrus becomes just a storage network or the default substrate for data markets. The language in the docs explicitly frames Walrus as enabling data markets for the AI era and making data reliable, valuable, and governable. If you take that seriously, WAL is not only pricing bytes. It is underwriting a system where data availability is a tradeable primitive and where storage quality can be quantified and governed. In that world, staking is not passive yield hunting. It is capital choosing which operators the network will trust with the next wave of valuable data. And the protocol’s emphasis on predictable user pricing is what makes it plausible that non crypto buyers can participate without turning storage budgeting into a token volatility bet.

Walrus matters because it moves decentralized storage away from ideology and toward enforceable performance. It is building a story where availability is provable, repair is operationally sane at blob scale, and incentives are engineered around the real costs that appear when data is large and churn is normal. If Walrus executes, its most important contribution may not be cheaper storage. It may be the normalization of a new standard. Data that comes with a native receipt, a programmable lifecycle, and a market of operators whose behavior is measurable and punishable. That is the kind of infrastructure shift that quietly changes what applications can promise, and what institutions can finally sign.