I remember the moment clearly. I was reviewing a protocol I had followed for months, tracing a past state to understand why something behaved the way it did. The transactions were visible. The logic was visible. But the underlying data had gaps.

Nothing malicious, nothing dramatic. Just pieces missing. As a trader, that is annoying. As someone who thinks about infrastructure, it is unsettling. It means the system assumed visibility was enough to guarantee reliability.

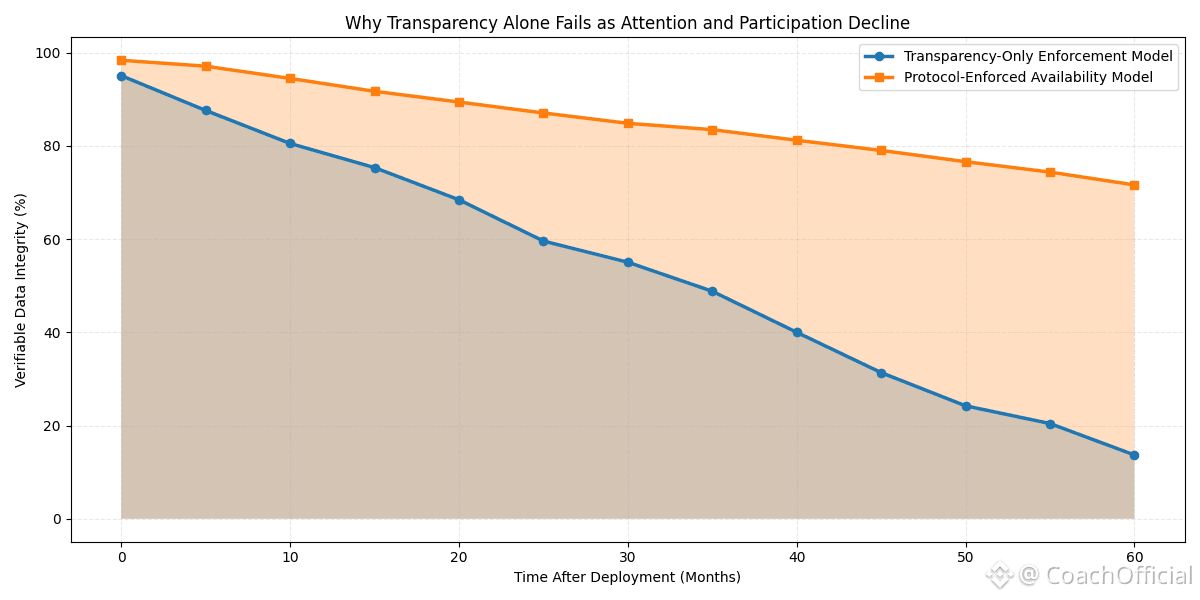

The problem itself is not complicated. Many decentralized systems treat transparency as a substitute for enforcement. If everyone can see what is happening, the thinking goes, bad behavior will be corrected. That works when participation is small and informal. It starts breaking when real value depends on the system continuing to function even when no one is watching closely. Data availability becomes an assumption instead of a guarantee, and assumptions age badly.

The analogy that keeps coming back to me is a public ledger in a warehouse. You can write every shipment on a whiteboard in plain sight, but if boxes quietly go missing and no mechanism forces replacement, the transparency does not fix the shortage. It only documents it after the fact.

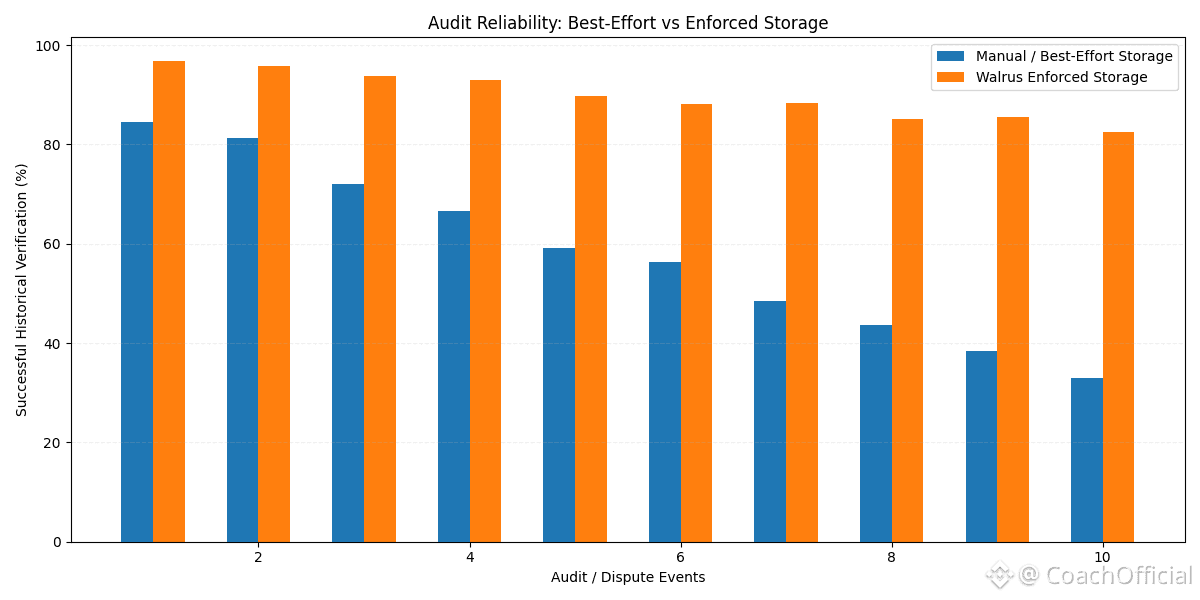

This is where Walrus takes a different posture. In plain terms, it is built to make data recoverable by design rather than by good behavior. When information is submitted, it is split and encoded across many participants so that no single node matters. One implementation detail that stands out is the reconstruction threshold.

Even if a significant portion of operators disappear or go offline, the remaining fragments are mathematically sufficient to recover the original data. Another is the use of verifiable availability proofs, which allow other systems to check that data can still be retrieved without trusting any individual storage provider.

The token plays a narrow, mechanical role. It is used to pay for storage over time, to stake commitments that can be slashed if availability guarantees are broken, and to govern parameters like redundancy levels. It does not promise demand. It enforces rules once demand exists.

The broader market context helps explain why this matters. Global data creation is now well over 100 zettabytes annually, and on-chain applications are no longer experiments run by a handful of developers. Institutions exploring blockchain rails care less about throughput headlines and more about whether historical data will still exist years later. Relative to that scale, decentralized storage remains small, which makes the reliability question more important than growth narratives.

From a trading perspective, these systems often look quiet. Short-term price action tends to respond to sentiment, rotations, and liquidity conditions. Infrastructure value shows up differently. It accrues through integrations, defaults chosen by builders, and resilience during uneventful periods when speculation fades. That gap between short-term signals and long-term usefulness is where a lot of mispricing tends to live.

There are real risks. Competition from other storage and availability layers is intense, and some have stronger network effects today. A plausible failure mode is economic drift, where storage rewards fail to keep pace with real-world costs, leading operators to slowly exit until redundancy assumptions are stressed. There is also uncertainty around how these guarantees hold under sustained adversarial conditions rather than cooperative ones.

I do not pretend to know which protocols will dominate this layer over time. What I am more confident about is that trust does not come from being observable. It comes from surviving quiet failures without drama. Adoption here is slow, almost invisible, until one day the systems that kept working become the only ones people remember.

@Dusk #Dusk #dusk $DUSK