In many decentralized systems, malicious behavior is treated like a dramatic event. Something breaks. Someone is blamed. Emergency measures are triggered. Walrus takes a very different approach. It assumes that malicious behavior will always exist and that the real challenge is not stopping it instantly but preventing it from spreading influence.

Walrus is built around a simple question. Does this node consistently do what the network relies on it to do. Everything flows from that. The network does not care about intention. It does not speculate about motives. It only cares about outcomes.

Every node participating in Walrus agrees to a basic role. Store assigned data and prove that it is stored when asked. These proofs are not ceremonial. They are frequent enough to matter and unpredictable enough to prevent shortcuts. Over time, nodes accumulate a behavioral footprint.

What makes Walrus different is that it looks at this footprint in context. A node is never judged in isolation. Its performance is compared against the broader population. This matters because absolute thresholds are fragile. Relative thresholds are resilient.

For example, if the average node responds correctly to ninety eight percent of challenges, that becomes the baseline. A node responding correctly ninety percent of the time is not slightly worse. It is an outlier. Walrus treats outliers seriously, but not emotionally.

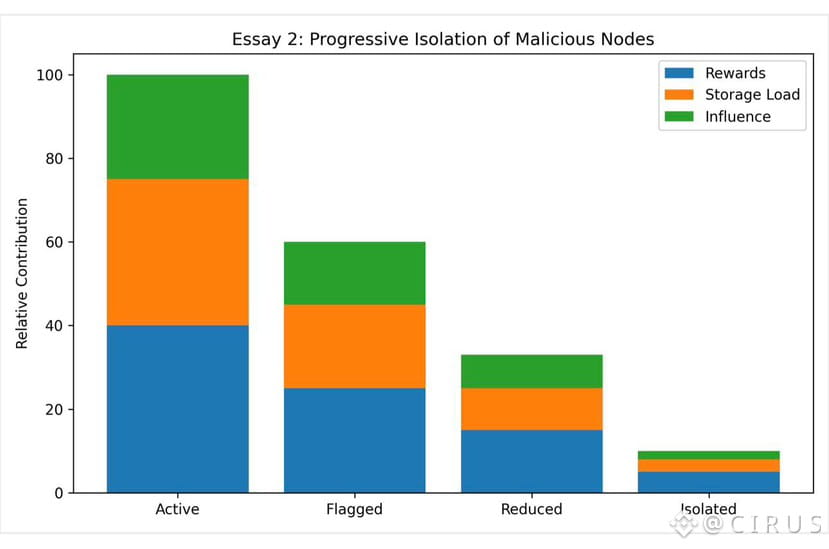

The system applies gradual pressure. First, rewards are adjusted. Nodes that underperform earn less. This alone filters out many forms of laziness or opportunistic behavior. Storage costs real resources. If rewards do not cover those costs, dishonest participation stops being attractive.

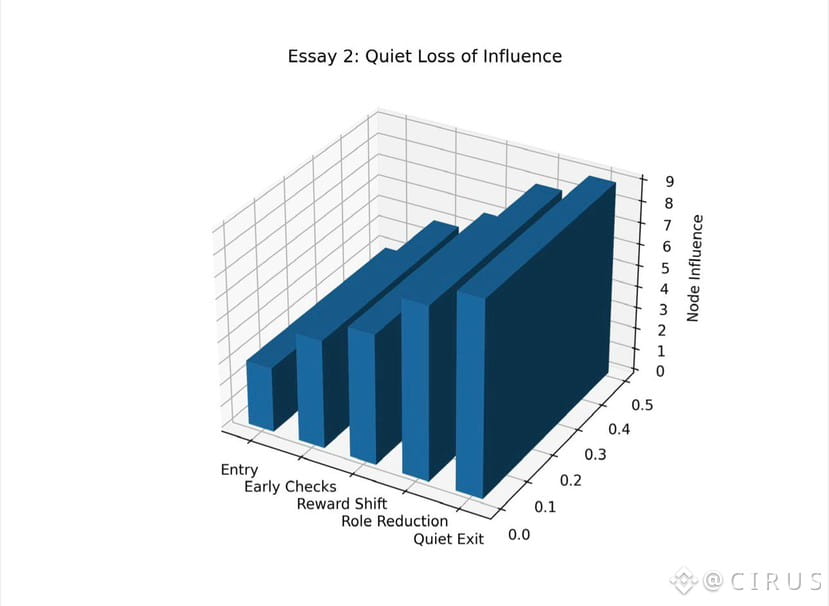

If underperformance continues, the node’s responsibilities are reduced. It is trusted with less data. Its role shrinks. Importantly, this happens without announcement. Other nodes do not need to coordinate. There is no governance vote. The system simply reallocates trust.

This quiet rebalancing is crucial. Malicious nodes thrive on disruption and attention. Walrus gives them neither. A node attempting to exploit the system finds itself slowly sidelined rather than dramatically expelled.

Quantitatively, this model works because malicious strategies do not scale well under repeated verification. A node can fake storage once or twice. It cannot fake it hundreds of times without detection. As the network grows, the cost of sustained deception increases faster than any potential gain.

Another important element is redundancy. Walrus never relies on a single node to preserve critical data. Storage is distributed deliberately. This means that even if a node becomes malicious, the immediate impact is limited. Detection can take its time. Isolation can be gradual. User data remains safe throughout.

This approach also protects against false positives. Nodes that experience temporary issues are not destroyed by a single bad period. Recovery is possible. Trust can be rebuilt. This makes the network more humane, even though it is driven by code.

From the outside, this may look slow. However, in infrastructure, slowness in judgment often leads to stability. Fast punishment creates fear and fragility. Measured response creates confidence.

As blob sizes increase and storage becomes heavier, this model becomes even more important. Large data makes shortcuts tempting. Walrus responds by making shortcuts visible. The larger the responsibility, the easier it is to spot neglect.

What stands out to me is how Walrus treats malicious nodes not as enemies but as economic inefficiencies. The system does not fight them. It outgrows them. Over time, only nodes that consistently contribute remain relevant.

In my view this is the kind of thinking decentralized storage has been missing. Not louder enforcement. Not harsher rules. Just persistent observation and quiet consequence. Walrus shows that when systems are designed this way, bad actors do not need to be chased away. They simply fail to survive.