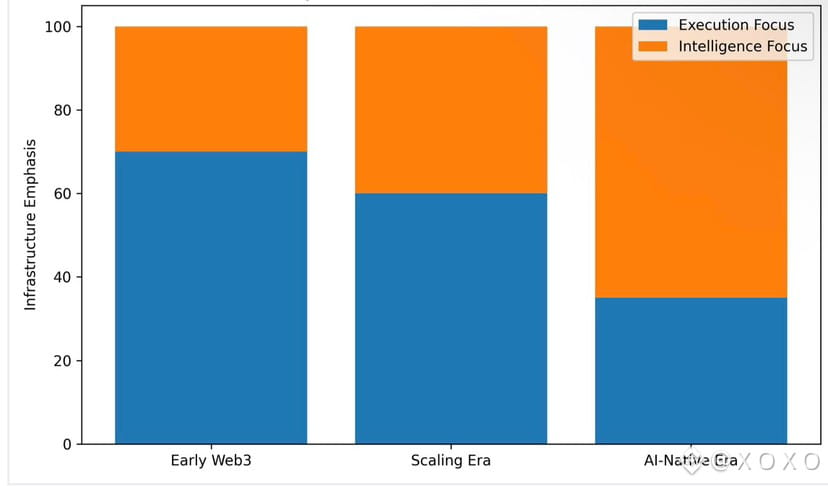

For most of Web3’s short history, progress has been measured in numbers that are easy to display and even easier to compare. Block times got shorter. Fees went down. Throughput went up. Each cycle brought a new chain claiming to have solved one more performance bottleneck, and for a long time that was convincing. Faster execution felt like real progress because execution was genuinely scarce.

That context matters, because it explains why so much of the industry still frames innovation as a race. If one chain is faster, cheaper, or capable of handling more transactions per second than another, then surely it must be better. That logic held when blockchains were competing to become usable at all. It breaks down once usability becomes table stakes.

Today, most serious chains can already do the basics well enough. Transfers settle quickly. Fees are manageable. Throughput is rarely the limiting factor outside of extreme conditions. Execution has not disappeared as a concern, but it has become abundant. Moreover, abundance changes what matters.

When execution is scarce, it is a moat. When execution is cheap and widely available, it becomes infrastructure. At that point, competition shifts from speed to something less visible and harder to quantify.

This is the quiet shift @Vanarchain is responding to.

Execution Solved the Last Era’s Problems

The first era of blockchains was about proving that decentralized execution could work at all. Early systems struggled under minimal load. Fees spiked unpredictably. Confirmation times were measured in minutes rather than seconds. In that environment, every improvement felt revolutionary.

As ecosystems matured, specialization followed. Privacy chains focused on confidentiality. DeFi chains optimized for composability. RWA chains leaned into compliance. Gaming chains targeted latency. Each category found its audience, and for a time, differentiation was clear.

However, the industry has reached a point where these distinctions no longer define the ceiling. A modern chain can be fast, cheap, private, and compliant enough to support real use cases. Execution capabilities have converged.

When multiple systems can satisfy the same baseline requirements, the question stops being how fast something runs and becomes how well it understands what it is running.

Humans Were the Assumed Users

Most blockchains were designed with a very specific mental model in mind. A human initiates an action. The network validates it. A smart contract executes logic that was written ahead of time. The transaction completes, and the system moves on.

That model works well for transfers, swaps, and simple workflows. It assumes discrete actions, clear intent, and limited context. In other words, it assumes that intelligence lives outside the chain.

This assumption held as long as humans were the primary actors.

It starts to fail when autonomous systems enter the picture.

Why Autonomous Agents Change Everything

AI agents do not behave like users clicking buttons. They operate continuously. They observe, decide, act, and adapt. Their decisions depend on prior states, evolving goals, and external signals. They require memory, not just state. They require reasoning, not just execution.

A chain that only knows how to execute pre-defined logic becomes a bottleneck for autonomy. It can process instructions, but it cannot explain why those instructions were generated. It cannot preserve the reasoning context behind decisions. It cannot enforce constraints that span time rather than transactions.

This is not an edge case. It is a structural mismatch.

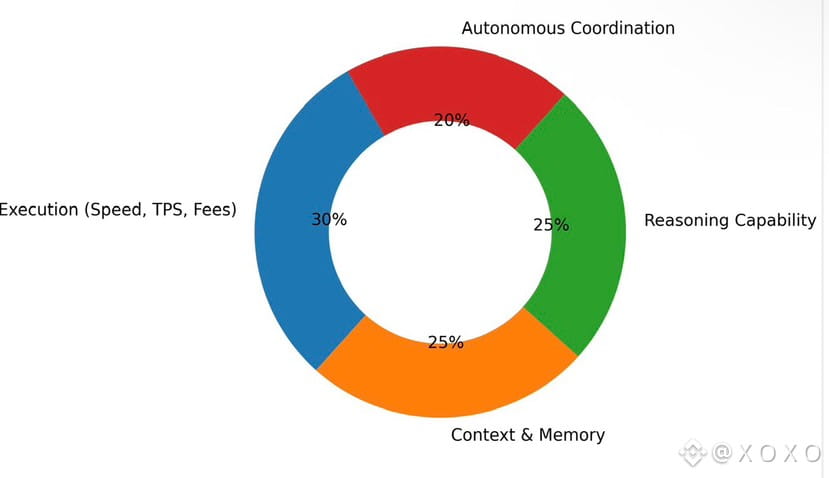

As agents take on more responsibility, whether in finance, governance, or coordination, the infrastructure supporting them must evolve. Speed alone does not help an agent justify its actions. Low fees do not help an agent recall why it behaved a certain way. High throughput does not help an agent comply with policy over time.

Intelligence becomes the limiting factor.

The Intelligence Gap in Web3

Much of what is currently labeled as AI-native blockchain infrastructure avoids this problem rather than solving it. Intelligence is pushed off-chain. Memory lives in centralized databases. Reasoning happens in opaque APIs. The blockchain is reduced to a settlement layer that records outcomes without understanding them.

This architecture works for demonstrations. It struggles under scrutiny.

Once systems need to be audited, explained, or regulated, black-box intelligence becomes a liability. When an agent’s decision cannot be reconstructed from on-chain data, trust erodes. When reasoning is external, enforcement becomes fragile.

Vanar started from a different assumption. If intelligence matters, it must live inside the protocol.

From Execution to Understanding

The shift Vanar is making is not about replacing execution. Execution remains necessary. However, it is no longer sufficient.

An intelligent system must preserve meaning over time. It must reason about prior states. It must automate action in a way that leaves an understandable trail. It must enforce constraints at the infrastructure level rather than delegating responsibility entirely to application code.

These requirements change architecture. They force tradeoffs. They slow development. They are also unavoidable if Web3 is to support autonomous behavior at scale.

Vanar’s stack reflects this reality.

Memory as a First-Class Primitive

Traditional blockchains store state, but they do not preserve context. Data exists, but meaning is external. Vanar’s approach to memory treats historical information as something that can be reasoned over, not just retrieved.

By compressing data into semantic representations, the network allows agents to recall not only what happened, but why it mattered. This is a subtle difference that becomes crucial as decisions compound over time.

Without memory, systems repeat mistakes. With memory, they adapt.

Reasoning Inside the Network

Most current systems treat reasoning as something that happens elsewhere. Vanar treats reasoning as infrastructure.

When inference happens inside the network, decisions become inspectable. Outcomes can be traced back to inputs. Assumptions can be evaluated. This does not make systems perfect, but it makes them accountable.

Accountability is what allows intelligence to scale beyond experimentation.

Automation That Leaves a Trail

Automation without traceability is dangerous. Vanar’s automation layer is designed to produce durable records of what happened, when, and why. This matters not only for debugging, but for trust.

As agents begin to act on behalf of users, institutions, or organizations, their actions must be explainable after the fact. Infrastructure that cannot support this will fail quietly and late.

Why This Shift Is Quiet

The move from execution to intelligence does not produce flashy benchmarks. There is no simple metric for coherence or contextual understanding. Progress is harder to market and slower to demonstrate.

However, once intelligence becomes the bottleneck, execution improvements lose their power as differentiators. Chains that remain focused solely on speed become interchangeable.

Vanar is betting that the next phase of Web3 will reward systems that understand rather than simply execute.

The industry is not abandoning execution. It is moving past it. Speed solved yesterday’s problems. Intelligence will solve tomorrow’s.

Vanar’s decision to step out of the execution race is not a rejection of performance. It is an acknowledgment that performance alone no longer defines progress. As autonomous systems become real participants rather than experiments, infrastructure must evolve accordingly.

This shift will not be loud. It will be gradual. But once intelligence becomes native rather than external, the entire landscape will look different.