The moment you stop trusting storage, you stop shipping. Not because the tech is “bad,” but because your product team learns a brutal lesson: one missing file, one slow retrieval, one corrupted blob, and users do not blame “decentralization.” They blame you. That is why I keep coming back to a simple idea: AI does not just need cheap storage, it needs storage it can trust. And that is the lane Walrus is trying to own.

Here is the retention problem in plain language. In consumer crypto, gaming, or any app that ships fast, users are basically running a daily A B test on you. Day one feels magical, day seven they are impatient, day thirty they are gone if anything feels unreliable. Storage is one of those silent failure points that retrains users to churn. A wallet error is annoying, but a missing image, a broken model artifact, or a failed retrieval turns the whole experience into “this app is sketchy.” And once that story lands, it is very hard to reverse.

I have felt this personally. I still remember a mint where everything looked fine until it did not. The metadata load spiked, the “pinned” content was not actually retrievable through the path users hit, and suddenly support turned into crisis management. Nobody cared what protocol was underneath. They cared that they clicked and it failed. That one night taught me the difference between storage that exists and storage that is dependable under churn.

Walrus’s pitch is basically an attempt to engineer that dependability as a first class feature. At a high level, it is a decentralized blob storage system. The interesting part is how it tries to stay resilient when nodes come and go, which is the normal reality of decentralized networks. Walrus puts a coordination layer on Sui and uses an erasure coded data plane that is designed to self heal rather than panic and re download everything.

The core mechanism you will hear about is Red Stuff, described by Walrus as a two dimensional erasure coding scheme. What matters for a trader is not the math, it is the consequence. Instead of copying a file ten times and hoping enough replicas survive, Walrus encodes data into pieces so that the network can reconstruct the original even if a meaningful portion of pieces go missing. The Walrus team and its paper describe achieving strong security and recovery with roughly a 4.5x replication factor while enabling self healing that only needs bandwidth proportional to the lost data, not the entire dataset.

Now zoom out to why AI changes the stakes. AI workflows create artifacts that are both large and operationally critical: model checkpoints, embeddings, fine tuning datasets, RAG indexes, agent memory logs, and media outputs. If any of that becomes intermittently unavailable, the “AI feature” looks flaky. Worse, the failures are non deterministic, which is exactly what destroys user trust. The product does not feel like software. It feels like gambling. So the question that matters is not “can we store it,” but “can we retrieve it consistently when demand spikes and parts of the network churn.”

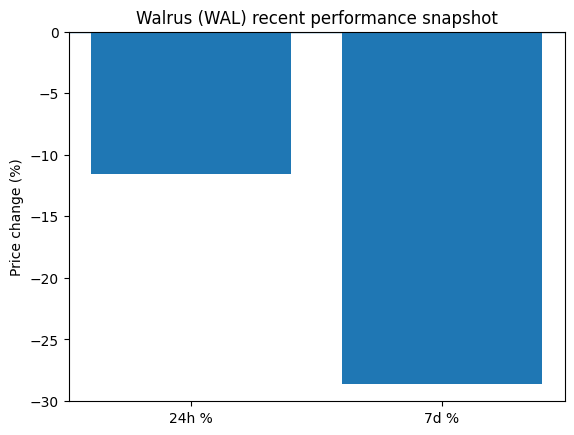

That is the qualitative story. Here is where I like to drop the market reality, because it frames how traders are currently pricing this narrative. As of today, February 5, 2026, CoinGecko shows Walrus (WAL) around $0.088 with about $12M to $14M in 24 hour volume, down roughly 11 percent on the day and about 29 percent over the last week. Binance lists similar ballpark figures for price, circulating supply, and 24 hour volume. CoinMarketCap places it around a roughly $130M market cap with about 1.61B circulating supply and a 5B max supply.

If you are trading it, that combination matters. A mid cap valuation with meaningful daily turnover can be a feature, because it means there is enough liquidity for real participants, but it is also a warning sign, because narrative shifts can move price violently when positioning is crowded. The chart you saw above is not a prediction, it is just a quick snapshot of what the market is currently doing with the story: it is repricing risk fast.

So how do you translate the tech into a trader’s checklist without turning it into a buzzword soup.

First, watch whether the retention problem is actually being solved in the wild. This is not about tweets. It is about whether builders keep using it after the first hype cycle, when the first outages happen, when the first cost overruns show up, and when the first “why is retrieval slow today” complaint hits production. Decentralized storage has plenty of graveyards because early adoption is easy and long term reliability is hard. Anyone who has touched IPFS or Filecoin knows that the hard part is not uploading, it is building a product that feels boringly dependable after users pile in.

Second, keep an eye on whether Walrus’s economics reward the behavior you want. Erasure coding can reduce raw replication overhead, but real networks still need incentives that keep operators online and responsive, and pricing that makes sense for teams who are comparing it to centralized object storage. If Walrus can make “decentralized” feel like a normal infrastructure decision rather than a philosophical one, that is when it becomes sticky.

Third, separate “AI needs storage” from “this storage will capture AI spend.” That gap is where most narratives break. The bull case is straightforward: more AI apps means more critical data blobs, and teams will pay for reliable retrieval the same way they pay for uptime anywhere else. The bear case is also straightforward: centralized incumbents keep winning because they are predictable, and decentralized storage keeps getting treated as optional.

My 2026 vision, if Walrus executes, is that storage becomes part of the composable stack in a way that feels invisible to end users. AI agents will write data, reference it later, prove integrity when needed, and teams will stop worrying about whether content will still be there tomorrow. The winning storage layer is the one that makes retention easier, not the one that makes a whitepaper look smart.

If you are approaching this as a trader or investor, do not just stare at price. Read the technical material, then pressure test it with the only question that really matters: are builders sticking around when the honeymoon ends. Track usage signals, ecosystem integrations, and whether retrieval reliability becomes a reason people stay, not a reason they leave. Position size like a grown up, keep a thesis you can actually falsify, and revisit it when the data changes.