The first time I watched Fogo do its thing, the setup was almost boring. A team had a program they were sure was “parallel enough.” They weren’t naïve—they knew the SVM model only runs transactions side-by-side when the write-sets don’t overlap. Still, they assumed they’d done the obvious work: split state, avoided obvious bottlenecks, kept the hot paths lean.

Then they turned the dial.

At low load, everything looked clean. Under heavier traffic, it started lying to them—quietly at first. Throughput climbed, then hit a ceiling that didn’t match what the hardware should have been able to do. Latency didn’t drift up gradually; it stabbed upward in sudden spikes and then fell back down like nothing happened. The program didn’t crash. It just behaved like a single-lane road pretending to be a highway.

That’s where Fogo’s personality shows up, and it’s not about the block-time number people like to quote. It’s about what very short blocks and a ruthlessly performance-oriented validator stack do to your excuses. On slower networks, you can hide a lot of bad habits behind “congestion.” Users feel friction, sure, but it’s hard to tell whether the chain is saturated or the app is poorly designed. Blame gets smeared across the whole system, which is convenient for everyone involved.

On a network built to minimize latency, the fog lifts fast. The system becomes annoyingly honest. If your app can’t stay parallel, you see it immediately, and you can usually point at the exact place where parallelism dies: a writable account too many transactions are forced to touch.

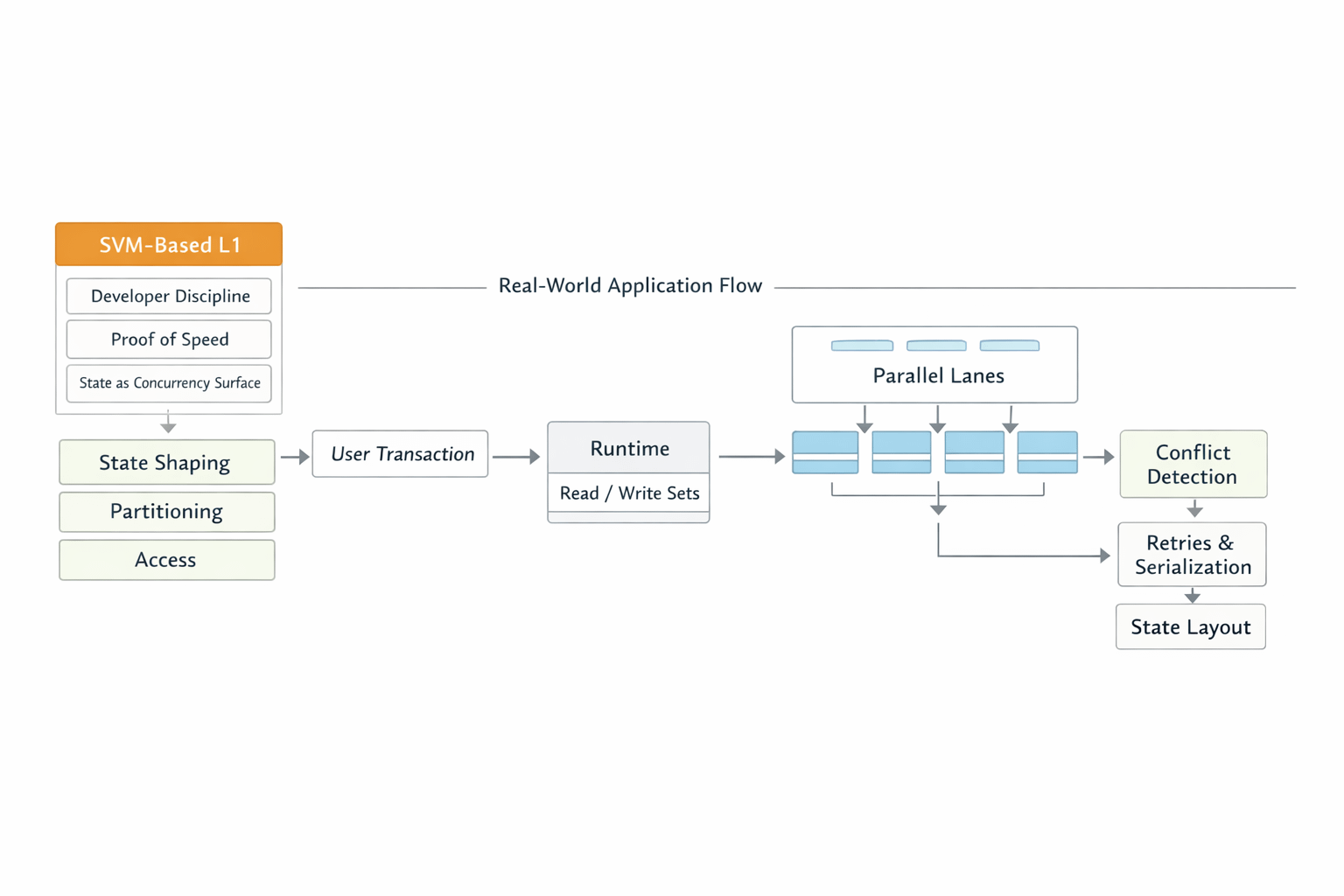

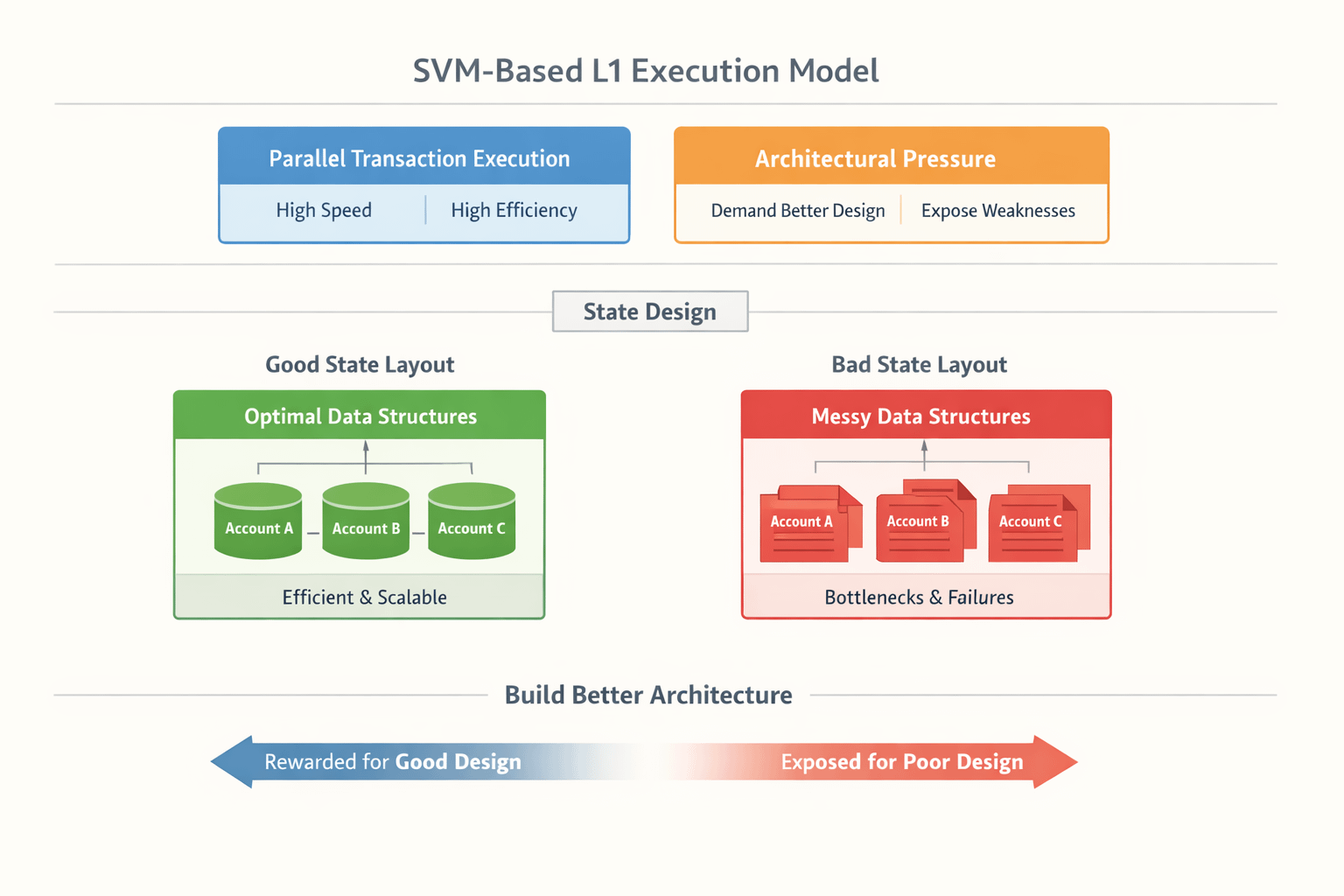

This sounds like a small detail until you’ve lived inside it. Parallel execution isn’t something you “have.” It’s something you keep, transaction after transaction, by not making unrelated actions collide over the same mutable state. The runtime is basically saying: “Tell me what you will touch. If you touch the same thing as someone else, you’re going to wait your turn.

A lot of teams lose that bargain for reasons that are completely understandable. Centralizing state feels responsible. It’s tidy. It makes invariants easy to reason about. One global config account. One counter. One accumulator. One place where you know the truth lives.

And then traffic arrives, and your one place for the truth becomes the one place everyone has to line up to edit.

You see the same shape across totally different apps. A trading program that insists on touching a global config account on every swap because it’s safer. A lending market that updates the same interest accumulator every time someone borrows, repays, or liquidates, because exactness feels comforting. A game that increments a global match ID counter whenever anyone creates a room, because it’s the simplest way to guarantee uniqueness.

None of these choices scream bad engineering in a code review. They’re the kinds of shortcuts smart teams make when they’re trying to ship. The problem is that SVM-style concurrency doesn’t forgive shortcuts that concentrate writes. If two transactions need the same writable account, they don’t run together. Doesn’t matter how many cores validators have. Doesn’t matter how fast the client is. Doesn’t matter how short the block is. You’ve created a choke point and you’re now paying rent on it.

Fogo amplifies that rent because of how it’s built. It’s not shy about chasing low latency. Its documents talk about zones—validators organized into geographic clusters with a rotating active zone—explicitly to cut round-trip time. It leans on a Firedancer-derived client lineage to push validator performance. It also embraces a fee structure that includes priority fees, which means users can compete to get earlier ordering when things are tight.

Those design decisions aren’t just “infrastructure choices.” They change the emotional experience of using the chain, and they change what users blame when something feels slow.

If you build an app that forces contention, you’ve effectively created a scarce resource inside your own program: access to a handful of hot accounts. Now throw priority fees on top. Suddenly the cost of “bad state layout” isn’t just lower throughput. It’s a visible tax users pay to fight for the single lane you accidentally built.

That’s what makes the phrase parallel execution isn’t free feel less like a slogan and more like a warning label. The chain can be fast, but the app can still be the thing that serializes everything. When that happens, the chain’s speed doesn’t rescue you—it just exposes you faster.

There’s another uncomfortable part people tend to skip: performance-oriented networks often end up taking a position on who gets to be a validator. Reports around Fogo have described a curated validator posture and a willingness to exclude under-provisioned nodes to keep the system tight. You can argue that’s practical—low latency networks don’t want their median participant dragging everyone down. You can also argue it concentrates power. Both can be true. If you care about censorship-resistance or open participation, “curation” isn’t a neutral word.

What’s striking is that Fogo doesn’t look like it’s trying to dodge those trade-offs with vague rhetoric. Its more formal materials describe the token and the system in almost sterile terms: pay for execution, compensate validators, stake to secure the network, inflation that trends down over time, and explicit disclaimers about what token holders do not get (no ownership, no profit claim). It reads like a document written by someone who expects regulators and skeptics to be in the room.

The consequence of that framing is simple: the token’s story is tied to the network being used for execution and staking. Not vibes. Not identity. Not “community.” Execution. If Fogo’s edge is latency, then it needs applications that genuinely care about latency. And those applications, by definition, will care about the ugly details—tail latency, contention, and whether the system stays parallel under real user behavior.

That brings you right back to state layout, because state layout becomes the real differentiator in whether teams can actually extract value from a low-latency SVM chain. It’s the part nobody can hand-wave once the chain is fast enough.

I keep thinking about that original stress test, because it wasn’t dramatic. It was almost mundane. A graph that looked wrong. A program that refused to scale the way its authors expected. And then the slow realization that it wasn’t the network at all—it was a few accounts that everyone had to touch, over and over, because the program’s internal bookkeeping demanded it.

That’s the real story hiding inside Fogo. Not that it’s fast. Plenty of projects chase speed. The story is that it turns speed into a kind of lie detector. If your app is structured in a way that collapses parallelism, you find out immediately. Not months later, after the architecture is fossilized. Not after your users have learned to tolerate a worse experience. Immediately, while the problem is still sitting there in plain sight: a bad state layout making your parallel runtime behave like a single thread.