Most builders say they want speed.

Very few are ready for what speed actually demands.

That’s why I keep coming back to Fogo — not because it’s “fast,” but because it quietly removes the excuses developers hide behind.

When you build on an SVM-style runtime, you don’t just inherit parallel execution.

You inherit responsibility.

And responsibility is uncomfortable.

Here’s the part people gloss over:

Parallel execution is not a feature you toggle on.

It’s a property you earn.

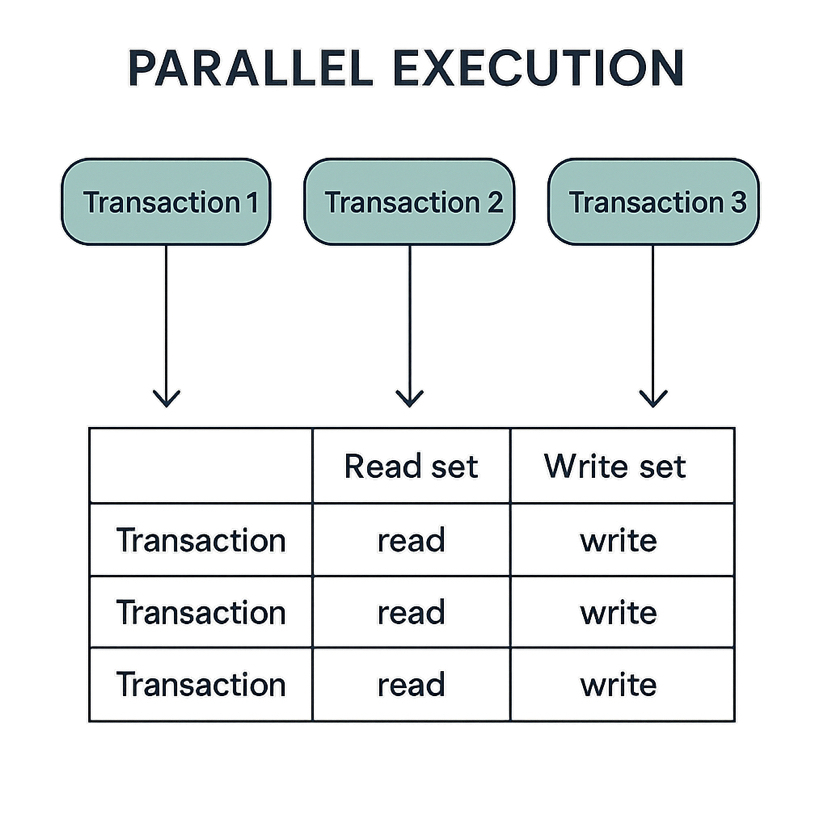

On SVM architecture, every transaction declares what it reads and what it writes. The runtime can only schedule work in parallel when those write sets don’t collide.

That means performance is no longer abstract.

It’s architectural.

And if your state layout is lazy, the chain will expose it instantly.

I’ve noticed something predictable.

Developers coming from sequential environments carry a mental model that feels safe: centralize state, mutate a core object, update global counters, keep everything “clean.”

It feels organized.

It also destroys concurrency.

Because the moment every user action touches the same writable account, you’ve effectively recreated a single-threaded system — just inside a parallel runtime.

And here’s the uncomfortable truth:

The chain doesn’t owe you parallelism.

If you design collisions, you get serialization.

What makes Fogo interesting isn’t that it’s fast.

It’s that it punishes bad design faster.

If blocks are genuinely low latency and the runtime can process independent work simultaneously, then the bottleneck becomes your architecture — not the chain.

That’s a psychological shift.

It means you can’t blame congestion.

You can’t blame validators.

You can’t blame block times.

You have to blame your layout.

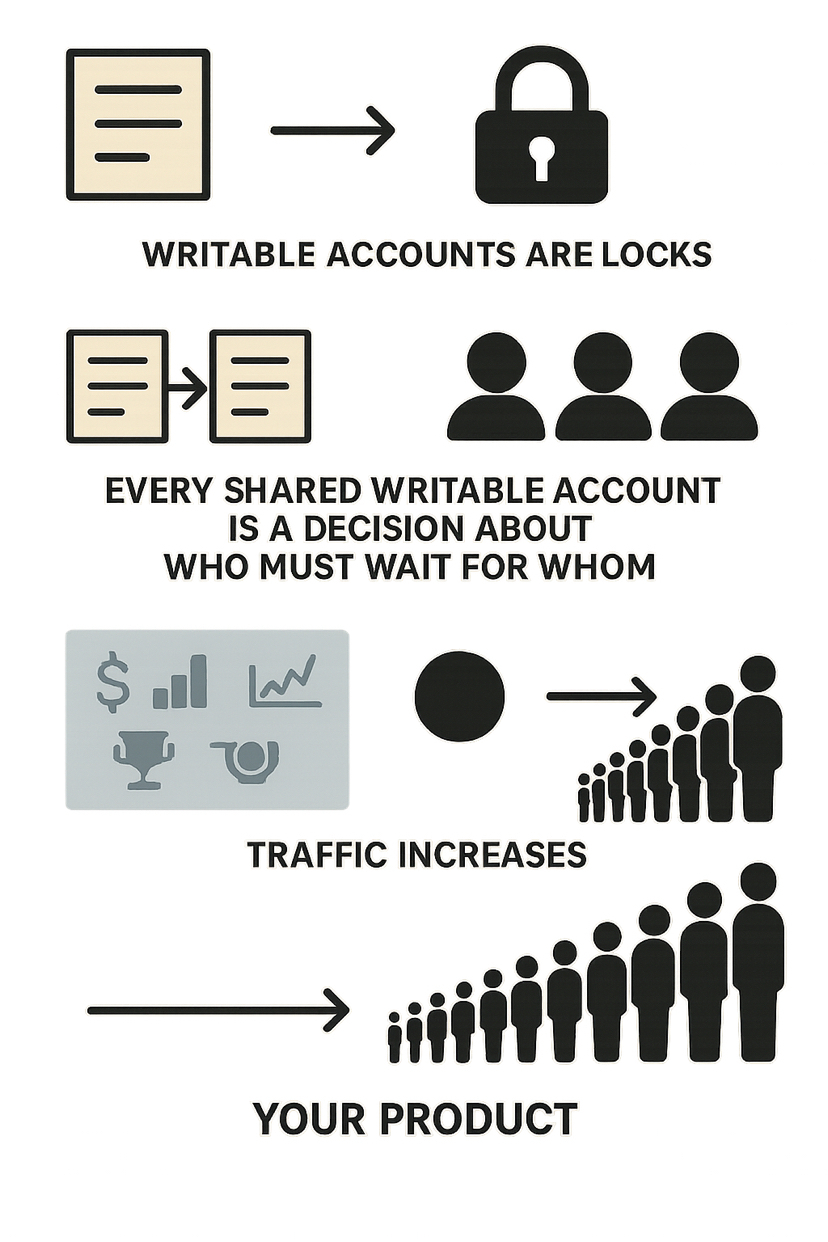

Writable accounts are not just storage.

They are locks.

Every shared writable account is a decision about who must wait for whom.

If you hide everything behind one global object — fees, metrics, volume, leaderboards, protocol stats — you’re declaring that every user must stand in the same line.

And when traffic increases, that line becomes your product.

Not your runtime.

Your product.

This becomes brutally obvious in trading systems.

People love to brag about “high-frequency capability” on fast chains.

But if your order flow mutates one central orderbook state every time, you’ve built a congestion machine.

It doesn’t matter how fast Fogo executes.

You forced serialization at the contract layer.

The result?

Contention becomes the dominant force — not strategy.

And markets shaped by contention behave differently than markets shaped by design.

The better architectures feel stricter.

Per-user state isolation.

Per-market partitioning.

Separation of correctness state from reporting state.

That last one is where most systems quietly sabotage themselves.

Builders love global truth:

Global volume.

Global counters.

Global activity metrics.

So they update those inside every transaction.

Which means every transaction now writes to the same shared account.

Congratulations — you just collapsed parallelism.

Parallel-friendly systems treat reporting differently.

They derive metrics.

They shard aggregates.

They update summaries asynchronously.

They keep shared writes narrow and intentional.

It’s not glamorous.

But it’s the difference between an application that scales and one that “mysteriously slows down.”

There is no mystery.

There is only collision.

Here’s what I respect about Fogo’s posture:

It doesn’t mask these collisions.

It exposes them.

On slower or sequential chains, architectural flaws can hide behind baseline latency. Everything is already serialized, so bad design doesn’t look catastrophic.

On a genuinely parallel runtime, bad layout stands out immediately.

Speed amplifies weakness.

That’s why determinism is expensive.

If you want transactions to execute predictably and concurrently, you must design state boundaries with discipline.

And discipline is harder than speed.

You’re managing more accounts.

More edges.

More isolation logic.

More test scenarios.

Concurrency stops being theoretical and becomes something you must reason about explicitly.

Upgrade paths become riskier.

Observability becomes mandatory.

Testing becomes real engineering instead of optimistic simulation.

That’s the cost.

But here’s the payoff:

When state is properly partitioned, independent actors truly move independently.

One hot market doesn’t stall another.

One heavy user doesn’t block everyone else.

One reporting update doesn’t freeze the core flow.

That’s when parallel execution stops being a narrative and becomes user experience.

The market loves to rank chains by TPS numbers.

I think that’s lazy.

The real question is:

How many of those transactions can proceed without colliding?

Because raw throughput without architectural discipline is just theoretical capacity.

Fogo forces the harder conversation.

It makes performance a design problem, not just a runtime claim.

And that’s why I find it more interesting than most “fast chain” discussions.

Not because it promises speed.

But because it demands you deserve it.