Every time you start talking to an AI assistant it is like meeting a stranger who has never seen you before. The AI assistant does not remember that week you were working on a payments integration. It does not know what programming language you like to use, how your project is set up or that you spent a lot of time figuring out the tone of your brand voice. You have to explain everything over again every single time. This is a problem with AI and it is very frustrating.

Traditional AI systems try to solve this problem by using something called "session memory". This is like a patchwork of conversation logs, database lookups and context windows. It works to some extent. It has a lot of limitations. As AI becomes more important these limitations start to feel like a wall.

How AI Systems Handle Memory

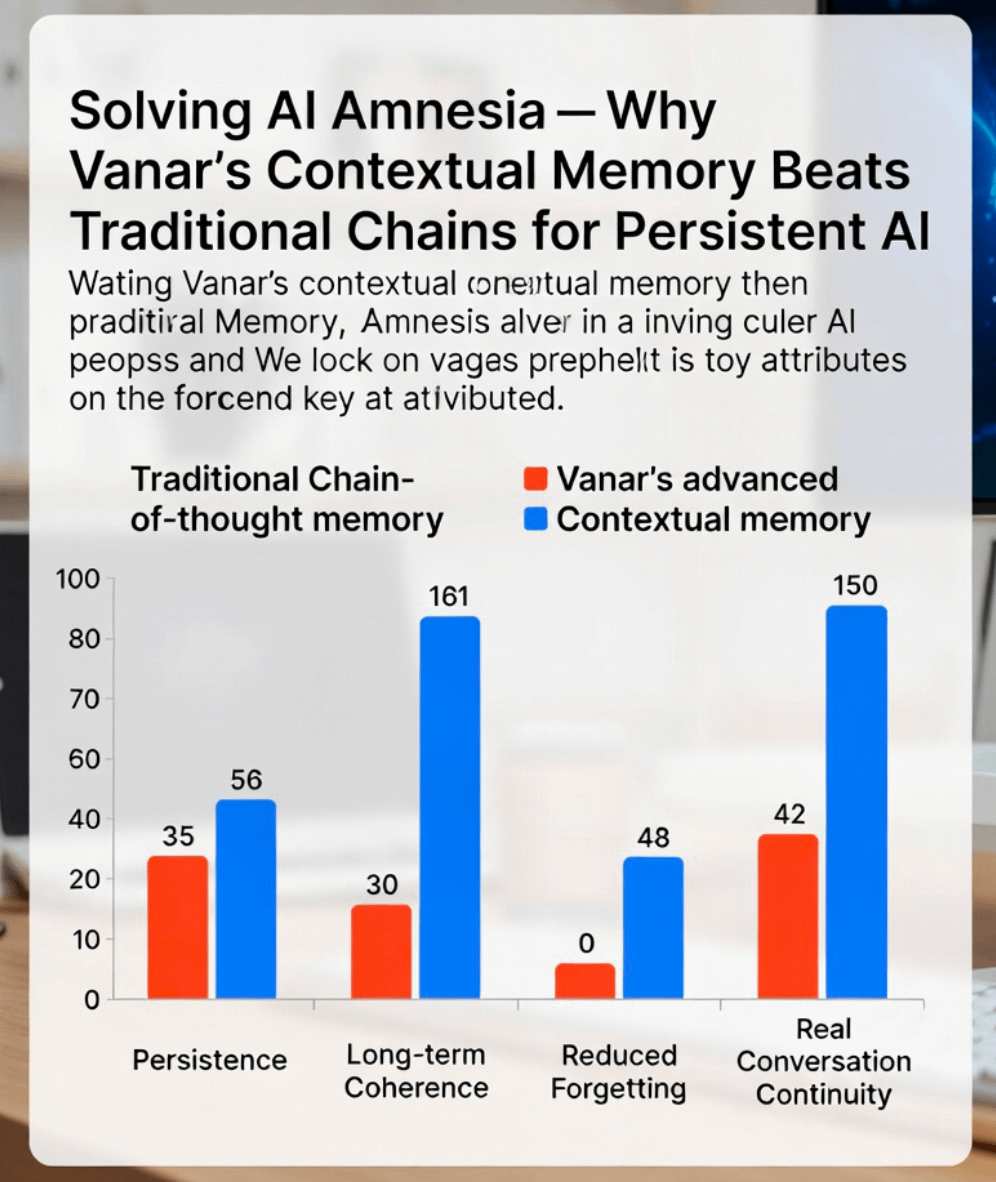

Traditional AI systems store context in one of three ways: they either put prior exchanges into the prompt query a database or summarize long conversations into shorter summaries. Each approach has its problems. If you put much information into the prompt it can get too long and the model gets confused. Querying a database can be slow and noisy. Summarizing conversations can also be problematic because once you summarize something you lose the details.

The main issue is that traditional AI systems treat memory as an add-on not as a part of the system. Every time the AI system retrieves information it is like a gamble. Every time you start a session the AI system may forget what you talked about before. This is not good enough for applications where AI agents need to understand a user or a team over a period of time.

The Vanar Approach: Memory as a Fundamental Part

Vanars approach to memory is different. Of asking how to give a stateless model access to past data Vanar asks how to build a system where context is continuously maintained and structured. This may sound like a difference but it has significant engineering consequences.

Vanar uses a memory graph, which is a dynamic and structured representation of everything the system has learned about a user or a project. This is not a log or a database but a relational and evolving knowledge structure that the AI can reason over. When you return to a session Vanar does not perform a keyword search to find history. It reconstructs your context from a living model of who you're what you have been working on.

Why This Matters for AI Applications

The implications of Vanars approach are significant. For AI agents traditional systems struggle with long-term task execution. An AI agent running a -day research project or managing a customer relationship cannot afford to forget its prior decisions. Vanars memory graph lets agents track goals, sub-goals, dependencies and outcomes persistently.

For developer tooling Vanars approach means that an AI coding assistant can genuinely know your codebase over time. It does not re-read your files every session. It has internalized the architecture, your conventions and your past bugs and fixes.

For enterprise deployments the benefits of Vanars approach are significant. Because Vanars memory is. Inspectable organizations can see exactly what the AI knows about a user or process update it correct it or remove it.

The Efficiency Advantage

There is also an efficiency argument. Traditional retrieval approaches often pad context windows aggressively to avoid missing information. This is expensive, slow and noisy. Vanars structured memory means that the system can be surgical about what context it injects surfacing only what is genuinely relevant to the task.

This translates to latency, lower inference costs and better model performance. A model reasoning over a well-structured context window consistently outperforms one drowning in retrieved chunks that may or may not be relevant.

The Road to Truly Persistent AI

The promise of AI is not a search engine that forgets you after every session. It is a collaborator that grows more useful the longer you work with it. Vanars approach to memory is a step in that direction. It builds persistence into the architecture from the ground up than bolting memory onto a stateless model. AI amnesia is not a limitation of large language models. It is an engineering problem and Vanar is one of the most compelling answers, to it yet.@Vanarchain $VANRY #Vanar