I keep coming back to one quiet truth in Web3: we can scale execution all day, but if the data layer is brittle, the whole stack still collapses under real usage. Not “testnet vibes” usage—real usage: match footage libraries, identity credentials, agent memory, media, datasets, app state, proofs, archives. The kind of data that can’t disappear, can’t be silently edited, and can’t be held hostage by a single platform decision.

That’s the lens I use when I look at #Walrus . Not as “another decentralized storage narrative,” but as infrastructure for a world where data is verifiable, programmable, and monetizable—without needing a single operator to be trusted forever.

I’m watching: data becomes composable the moment it becomes verifiable

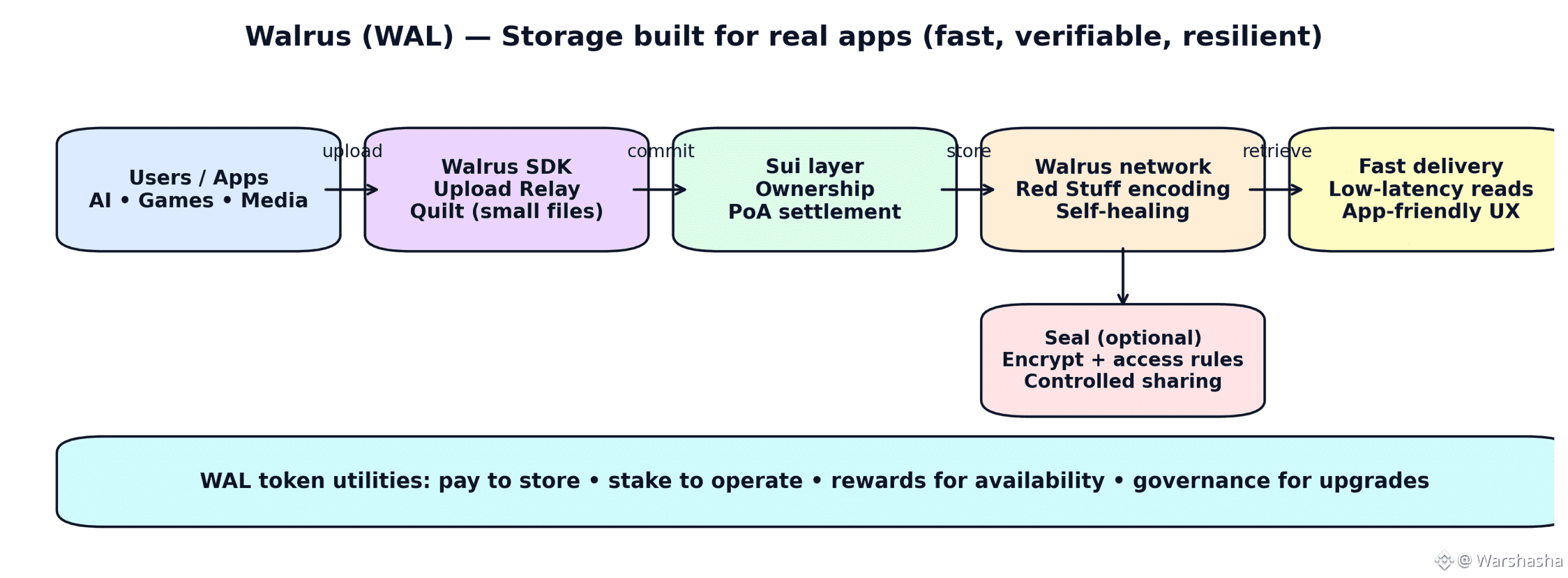

Most storage systems (even many decentralized ones) still treat files like passive objects: upload → host → hope it stays there. Walrus is pushing a different model: data as a first-class onchain resource.

What hit me most recently is how Walrus frames this in practical terms: every file can be referenced by a verifiable identity, and the chain can track its storage history—so provenance isn’t a “promise,” it’s a property. That’s the difference between “I think this dataset is clean” and “I can prove where it came from, when it changed, and what version trained the model.”

Decentralization that doesn’t quietly decay as it grows

Here’s the uncomfortable reality: lots of networks start decentralized and then centralize by accident—because scale rewards whoever can accumulate stake, bandwidth, or operational dominance. Walrus basically calls this out and designs against it: delegated stake spreads power across independent operators, rewards are tied to verifiable performance, and there are penalties that discourage coordinated “stake games” that can tilt governance or censorship outcomes.

That matters more than people admit—because if your data layer becomes a handful of “reliable providers,” you’re right back to the same single points of failure Web3 claims to avoid.

The adoption signals that feel real (not just loud)

The easiest way to spot serious infrastructure is to watch who trusts it with irreversible scale.

250TB isn’t a pilot — it’s a commitment

Walrus announced Team Liquid migrating 250TB of match footage and brand content, framing it as the largest single dataset entrusted to the protocol at the time—and what’s interesting is why: global access, fewer silos, no single point of failure, and turning “archives” into onchain-compatible assets that can later support new fan access + monetization models without re-migrating everything again.

That’s not a marketing integration. That’s operational dependency.

Prediction markets where the “data layer” is part of the product

Myriad integrated Walrus as its trusted data layer, explicitly replacing centralized/IPFS storage to get tamper-proof, auditable provenance—and they mention $5M+ in total onchain prediction transactions since launch. That’s the kind of use case where integrity is the product, not a bonus.

AI agents don’t just need compute — they need memory that can be proven

Walrus becoming the default memory layer in elizaOS V2 is one of those developments that looks “technical” but has big downstream implications: agent memory, datasets, and shared workflows anchored with proof-of-availability on Sui for auditability and provenance.

If 2026 really is an “agent economy” year, this is the kind of integration that quietly compounds.

The upgrades that changed what Walrus can actually support at scale

Real applications don’t look like “one giant file.” They look like thousands of small files, messy user uploads, mobile connections, private data, and high-speed retrieval demands. Walrus spent 2025 solving the boring parts—the parts that decide adoption.

Seal pushed privacy into the native stack: encryption + onchain access control so builders can define who sees what without building custom security layers.

Quilt tackled small-file efficiency: a native API that can group up to 660 small files into one unit, and Walrus says it saved partners 3M+ WAL.

Upload Relay reduced the “client-side pain” of distributing data across many storage nodes, improving reliability (especially on mobile / unstable connections).

Pipe Network partnership made retrieval latency and bandwidth first-class: Walrus cites Pipe’s 280K+ community-run PoP nodes and targets sub-50ms retrieval latency at the edge.

This is the pattern I respect: not just “we’re decentralized,” but “we’re operationally usable.”

$WAL isn’t just a ticker — it’s the incentive spine that makes “unstoppable” sustainable

I like when token utility reads like an engineering requirement, not a vibe.

Walrus describes WAL economics as a system designed for competitive pricing and minimizing adversarial behavior. WAL is used to pay for storage, with a mechanism designed to keep storage costs stable in fiat terms. Users pay upfront for a fixed storage time, and that WAL is distributed over time to storage nodes and stakers—so “keep it available” is financially aligned, not assumed.

Then you get the security layer: delegated staking where nodes compete for stake, and (when enabled) slashing aligns operators + delegators to performance. Governance also runs through WAL stake-weighted decisions for key parameters.

And on the supply side, Walrus frames WAL as deflationary with burn mechanics tied to behavior (e.g., penalties around short-term stake shifts and slashing-related burns). They also state 5B max supply and that 60%+ is allocated to the community via airdrops, subsidies, and a community reserve.

Market accessibility: distribution matters when the goal is “default infrastructure”

One underrated ingredient for infrastructure tokens is access—because staking participation and network decentralization benefit from broad ownership and easy onboarding.

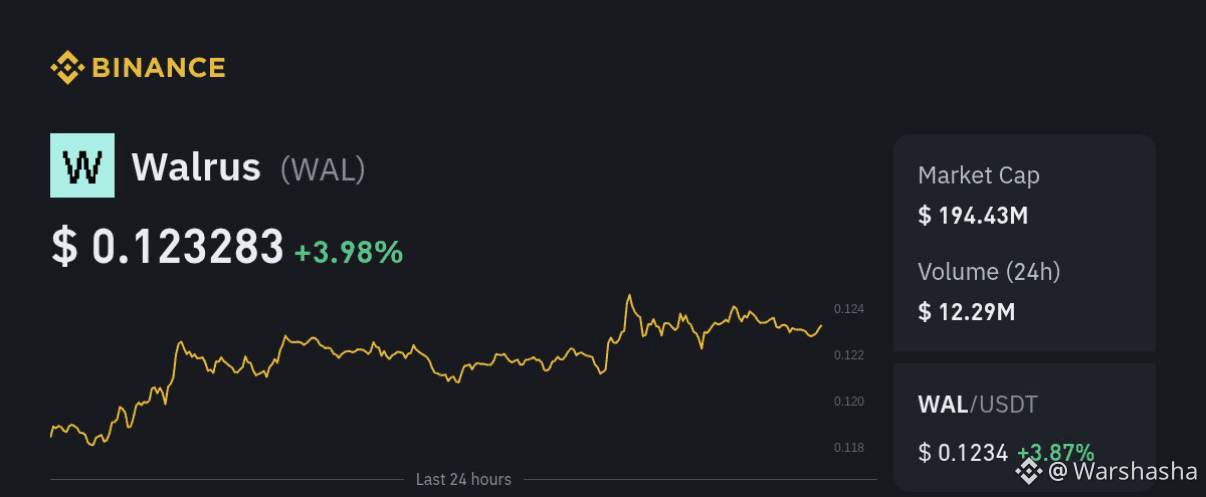

Walrus highlighted WAL being tradable on Binance Alpha/Spot, positioning it as part of the project’s post-mainnet momentum and broader ecosystem expansion.

Again: not the core story, but it helps the core story scale.

What I’m watching next (the parts that will decide whether Walrus becomes “default”)

If Walrus is trying to become the data layer apps stop mentioning (because it’s simply assumed), then the next phase is about proving consistency over time. These are my personal checkpoints:

Decentralization depth over hype: operator diversity + stake distribution staying healthy as usage grows.

Privacy becoming normal, not niche: more apps using Seal for real access control flows, not just demos.

High-value datasets moving in: more “Team Liquid style” migrations where organizations commit serious archives and use them as programmable assets.

Agent + AI workflows scaling: more integrations like elizaOS where Walrus is the default memory/provenance layer, not an optional plugin.

Closing thought

#Walrus feels like it’s aiming for a specific kind of inevitability: make data ownable, provable, and programmable, while staying fast enough that normal users don’t feel punished for choosing decentralization.

When a protocol can talk about decentralization as an economic design problem, privacy as a default requirement, and adoption as “who trusts us with irreversible scale,” it usually means it’s moving from narrative to infrastructure.

And infrastructure—quietly—tends to be where the deepest value accumulates.