Verifiable data availability stands out as Walrus's core strength, especially after 2025's real-world adoption spikes. Tracking on-chain metrics, I noticed how it solves silent data loss issues central systems ignore.

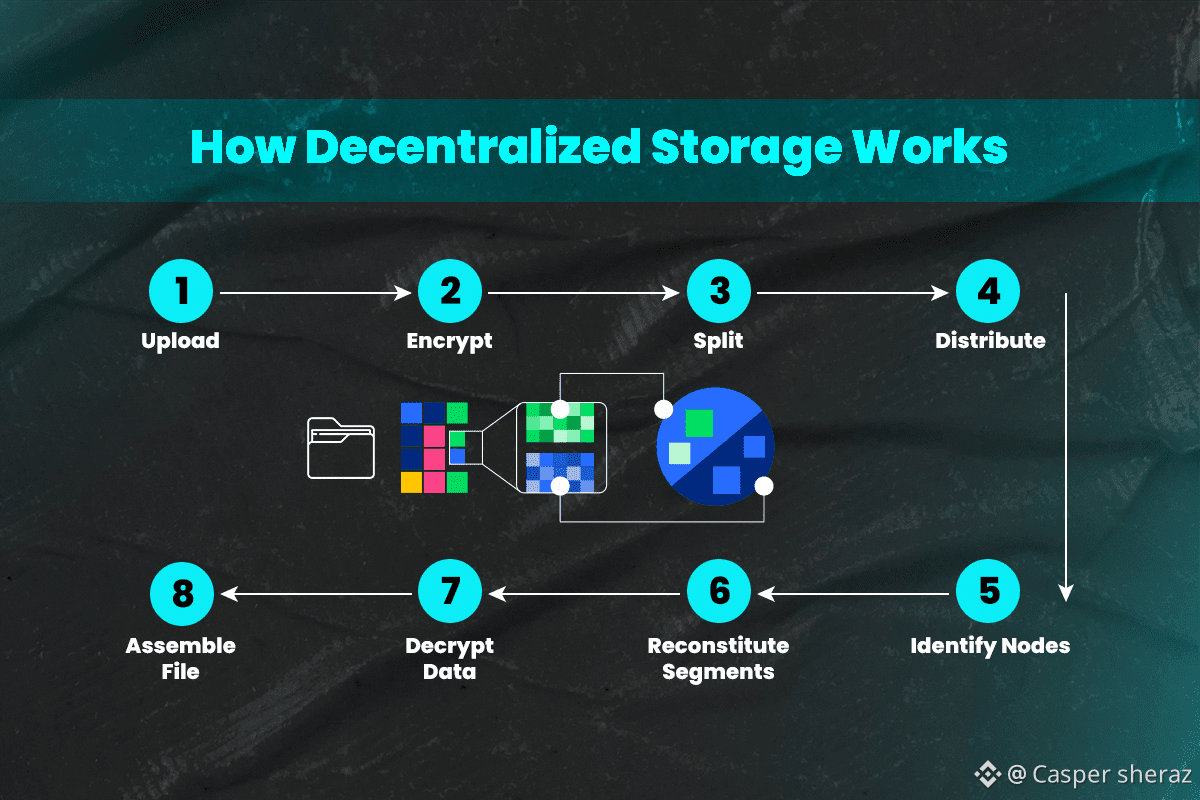

The process starts with blobs. Large files split into fragments distributed across nodes, with built-in redundancy via erasure coding.

Nodes must submit cryptographic proofs each epoch confirming they retain pieces-failure means penalties, enforcing honesty.

This on-chain anchoring makes everything auditable. Anyone can check availability without trusting intermediaries.

Community discussions highlight efficiency. Retrieving data pulls minimal needed parts, keeping costs low even for terabyte-scale storage.

Builders integrate this seamlessly. With hundreds of TB already stored, tools support AI datasets and dApps reliably.

Privacy integrates deeply. Distribution avoids concentrated vulnerabilities, crucial as data demands grow.

Staking $WAL ties into availability. Rewards incentivize reliable nodes, creating a self-sustaining loop.

Looking to 2026, tighter Sui interoperability will amplify this-perhaps enabling new use cases like programmable data markets.

Walrus makes availability a guarantee, not a hope. Stay connected via @Walrus 🦭/acc and $WAL .