Most blockchain discussions still start from the chain. Which L1 is faster. Which ecosystem is bigger. Which network has more activity today. But builders do not think in chains. Builders think in problems. Where their users already are. Where their tools live. Where deployment friction is lowest. Infrastructure that ignores this usually ends up fighting developers instead of supporting them.

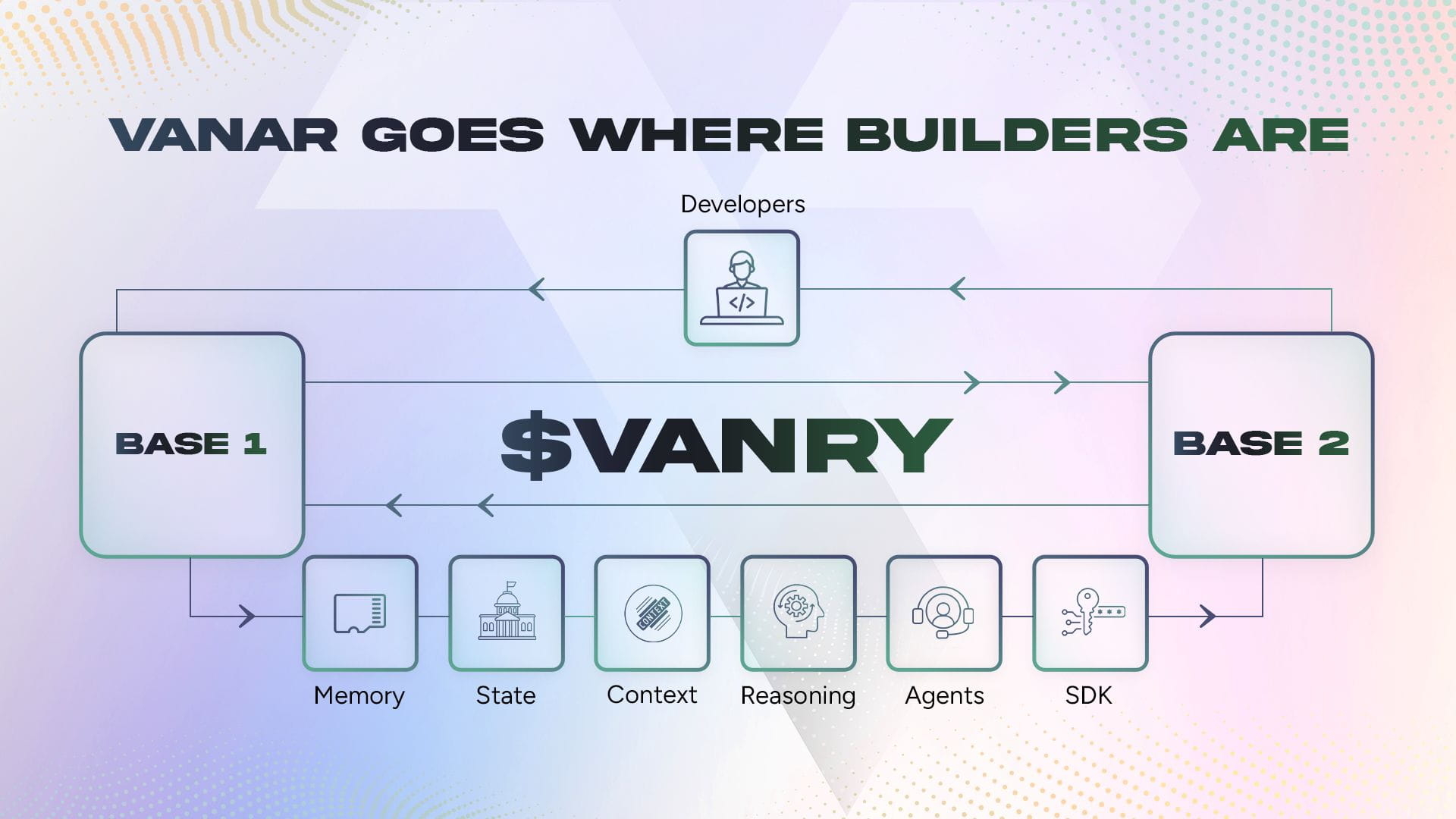

This is why Vanar's approach of meeting builders where they already work matters. AI systems are not static contracts deployed once and forgotten. They grow through repeated interactions. They rely on context. They improve through memory. That kind of system cannot live inside a single execution environment. It needs continuity even when execution happens across different networks.

At a technical level, this is where Neutron fits. Neutron is positioned as infrastructure beneath applications, not a front-facing feature. It supports memory, reasoning, and explain-ability so systems do not reset their understanding after every action. The visible layer is where agents and workflows run. The less visible layer is what allows those agents to behave consistently over time. This aligns with how Vanar @Vanarchain describe AI readiness in its official materials, focusing on continuity rather than raw speed.

Builders do not interact with this complexity directly. They access it through SDKs. That matters. SDKs turn infrastructure into something usable without forcing developers to rethink their entire stack. Instead of migrating everything to a new chain, builders can integrate memory, context, and reasoning into systems they are already building. This is how advanced infrastructure becomes practical rather than theoretical.

For everyday users, the impact shows up quietly. An AI assistant that remembers prior choices does not need repeated instructions. A workflow that understands past constraints makes fewer mistakes. A system that can explain why something happened feels more trustworthy than one that only shows the result. These are small differences that decide whether a tool is tested once or used daily.

By extending availability into environments like Base, Vanar @Vanarchain reduces friction even further. AI infrastructure cannot stay isolated if it wants real usage. Builders follow users, not narratives. Making AI primitives accessible where developers already deploy increases experimentation while keeping underlying logic consistent. This is how scale happens without forcing habit changes.

$VANRY sits at the center of this as coordination rather than decoration. When agents act, when workflow execute, and when value settles, there needs to be an economic anchor. Instead of relying on short-term activity spikes, $VANRY aligns with sustained usage across intelligent systems. This supports long-term value driven by real activity, not demos.

In my view, this is a quieter but more realistic strategy. Many networks compete on performance claims. Vanar focuses on reducing cognitive load. Make systems easier to reason about. Make behavior predictable. Make intelligence continuous instead of fragmented. As AI moves form experiments into daily tools, this matters more than benchmarks.

If AI infrastructure must follow how humans build and think rather than forcing migration and resets, will builders keep choosing chains that forget context after every action, or will they prefer systems like Vanar where Neutron, SDK access, and $VANRY support memory, reasoning, and trust wherever real works is already happening?