A few months ago, I was tinkering with an AI agent for a small trading bot. Nothing impressive. Just something to watch positions and react to on-chain signals. I’ve built similar setups before and spent enough time trading infrastructure tokens to know what usually breaks first. This time, though, the friction felt different. The agent worked, technically, but every interaction was disposable. It could process data in the moment, but the second it stopped, all context was gone. Next run, it was starting blind again. Costs crept up from constant queries, and performance dipped whenever the network got busy. It wasn’t really about speed, or even fees on their own. It was the absence of memory. No native way for the system to remember, reason, or build continuity without leaning on off-chain services. That’s what made me stop and think. If agents are supposed to operate on their own, the base layer can’t just execute code. It has to support intelligence that persists.

The bigger issue is that most blockchains are still built for applications, not agents. They’re good at settling transactions and running scripts, but they fall apart when you ask them to support long-lived context. Anything resembling memory or reasoning usually gets pushed off-chain, which adds latency, cost, and fragility. From the user side, this shows up as agents that forget what they just did, decisions that feel disconnected, and fees that spike because every action is treated as a fresh computation. It’s not just inefficient, it’s mismatched. Agents are meant to handle ongoing tasks, not one-off calls. As long as intelligence lives outside the chain, adoption stays limited to people willing to tolerate duct tape. Everyday use, like agents managing finances or data flows continuously, never quite clicks.

I keep coming back to a simple comparison. A notebook can store information, but you still have to reread everything every time you want to act. A brain doesn’t work that way. It carries context forward. Most chains today are notebooks. Reliable records, yes, but terrible at recall. Agents need something closer to the second.

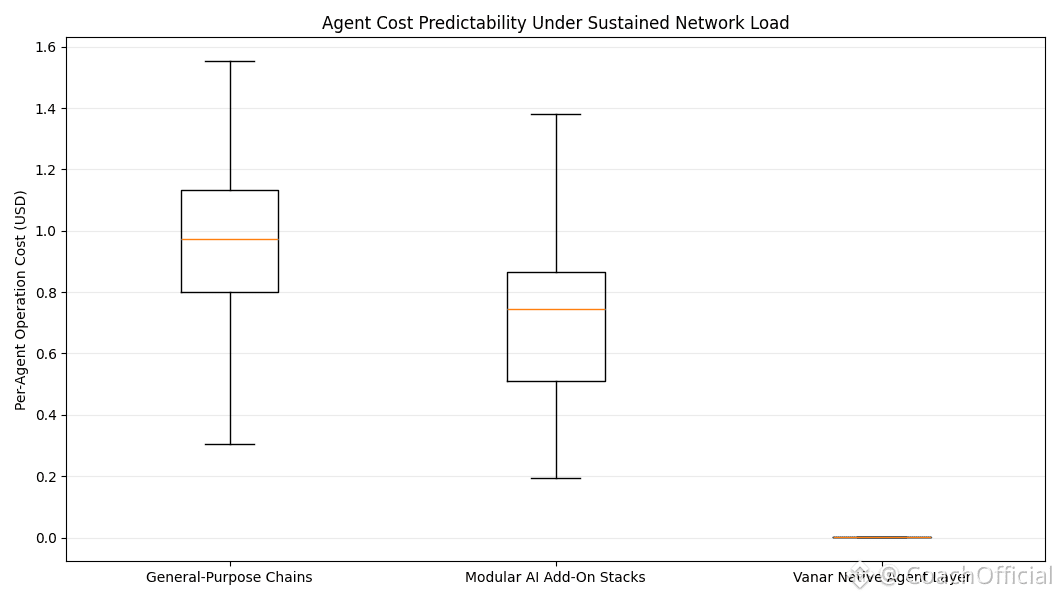

That’s where this project takes a different starting point. Instead of treating intelligence as an optional layer, it treats it as the foundation. It keeps EVM compatibility so developers don’t have to relearn everything, but it builds AI primitives directly into the protocol. With the public rollout of its AI stack in early January 2026, the network began supporting on-chain memory and reasoning without forcing agents to rely on external systems. The scope is intentional. It doesn’t try to host every possible app. It prioritizes agents, even if that means saying no to other use cases. Some execution paths are constrained on purpose to favor data-heavy workflows, which keeps fees stable and agents responsive. In the late-2025 bi-weekly recap, Ankr joining as a validator stood out, not as marketing, but because it strengthened decentralization while keeping block times under three seconds. Right now, average fees sit around $0.0005, even during spikes. The January 15, 2026 post about intelligence being “no longer optional” made the direction clear. Execution is cheap everywhere now. Context isn’t.

Under the hood, a few design choices explain how this actually works. The Neutron module, updated in Q1 2026, compresses raw data into what the network calls “Seeds.” These aren’t just smaller files. They preserve meaning. Agents can store full context on-chain and query it later without reprocessing everything, cutting storage needs by up to 90%. That matters at scale. With more than 50 validators active after Ankr came on, the network handles roughly 200 TPS in tests, while real usage sits closer to 50–100 TPS during peaks. That ceiling isn’t accidental. The Proof of Reputation model weights validators by historical performance, which slows raw expansion but keeps bad actors from overwhelming the system. Then there’s Kayon, refreshed in November 2025. It runs lightweight reasoning directly on-chain, separate from standard execution. This limits contract complexity, but it also prevents AI workloads from clogging the network. According to internal benchmarks shared after the December 2025 payments hire, Kayon-driven automation improved agent-based stablecoin flows by about 30%, simply by removing external checks.

The VANRY token plays a functional role and not much more. It pays transaction fees, with a burn mechanism adjusted in 2025 to smooth supply as AI usage increased. Validators are nominated through staking under the in practice, Proof of Reputation model, earning inflation capped at 5%, with reductions scheduled after 2026 milestones. About 40% of supply is currently staked. VANRY also settles agent-triggered actions, like payouts generated through Kayon, and governs upgrades. The subscription model introduced in November 2025 routes AI tooling fees back into the protocol in VANRY. No gimmicks. It’s infrastructure plumbing.

From a market perspective, the project sits around a $22 million capitalization. Daily volume briefly spiked to roughly $50 million on January 19, 2026, right as the AI integrations went live. It drew attention, but it didn’t turn into a frenzy.

Short-term trading here is mostly narrative-driven. Volume jumps around blog releases or AI headlines, like the mid-January intelligence series. I’ve traded similar setups before. You catch a 20–30% move, then it cools off just as fast. Long-term value depends on something else entirely. Habit. If persistent intelligence turns agents into daily tools, fees and staking demand follow naturally. The subscription rollout for Neutron and Kayon, combined with validators like Ankr committing resources, points in that direction. Usage from things like the October 2025 Pilot Agent, which enabled natural-language transactions, doesn’t look flashy, but it compounds quietly.

There are real risks. Chains like Fetch.ai and Ethereum’s AI-focused layers have larger ecosystems and more visibility. If adoption here stalls, developers may drift back to familiar ground. Regulation is another wildcard, especially with AI touching payments. One scenario I keep in mind is a failure in Neutron’s semantic compression during a heavy agent surge. A malformed Seed in a financial workflow could ripple outward, delaying settlements and damaging trust, the same way a bad cache can poison an entire system. And there’s still the open question of ecosystem buy-in. If major AI players stay off-chain, the intelligence-first approach could remain niche.

Stepping back, agent-centric infrastructure feels like one of those ideas that takes time to settle. It doesn’t explode overnight. It shows up when users stop noticing the system and just rely on it. Whether starting from intelligence instead of apps becomes the right call will be clear later, not in announcements, but in repeated use.

@Vanarchain #Vanar $VANRY