Storage Is Infrastructure, Not a Side Feature

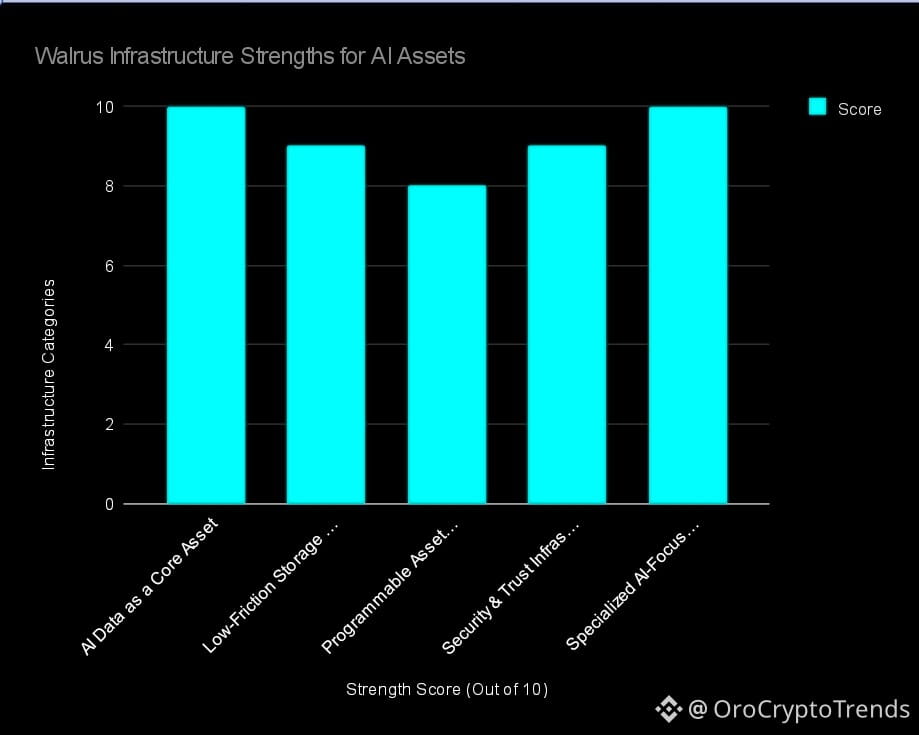

While many blockchain projects compete to become the ultimate, all-in-one execution environment—a “world computer” for every possible use case—Walrus deliberately chooses a different path. Rather than stretching itself thin, Walrus concentrates on a foundational need that’s often overlooked in the AI landscape: robust, scalable, and trust-minimized data availability and storage. In a world where AI-generated assets such as model checkpoints, training datasets, embeddings, and outputs are ballooning in size and value, the backbone of future digital economies won’t be the blockchains that run computation, but the networks that make data accessible, verifiable, and persistent.

Walrus recognizes that in practical AI deployments, data storage isn’t an afterthought or a minor feature tacked onto a bigger chain. Instead, it’s the critical infrastructure—the digital equivalent of roads and power lines—without which the entire ecosystem grinds to a halt. By narrowing its focus, Walrus aims to excel where it matters most: providing a storage and retrieval network that can handle massive, persistent assets, ensuring they’re always available, tamper-proof, and easy to access when needed.

AI Data as a First-Class Internet Asset

In a traditional internet paradigm, files are often mere attachments—static, hidden away on proprietary cloud servers, their provenance and status opaque. Walrus upends this by treating AI data not as expendable byproducts, but as first-class economic assets. Model weights, inference results, curated training corpora, and even intermediate artifacts become digital goods with identifiable value, history, and utility. Their storage location is no longer a black box; it’s a transparent, auditable, and programmable part of the network.

This shift is profound for builders and enterprises alike. When data is stored on a decentralized, verifiable network, it becomes portable and composable. Model outputs can be traded on open marketplaces, licensed across organizational boundaries, or bundled into new services—all with cryptographic assurance of integrity and origin. The network’s architecture transforms static files into programmable primitives for a new breed of digital commerce. In effect, AI-generated data is elevated from a passive storage concern to an active participant in the digital economy.

Removing Friction from Data Operations

Any developer who’s tried to use a legacy blockchain for storage knows the pain: prohibitive fees, unpredictable costs, and complex workarounds. The process is slow, expensive, and riddled with compromises. Walrus tackles these hurdles head-on by decoupling heavy data storage from execution, allowing the network to scale for real-world AI demands without the bottlenecks of traditional chains.

This design philosophy matters. Developers and AI teams can focus on throughput, reliability, and user experience, confident that their data operations won’t be derailed by gas spikes or punitive file-size penalties. The result is a smoother development lifecycle and the freedom to build ambitious, data-heavy applications without constantly second-guessing the underlying infrastructure.

Moreover, reducing operational friction means that organizations can experiment, iterate, and deploy at the speed required by AI innovation. No more trade-offs between cost and capacity, or kludgy off-chain solutions that introduce new risks. Walrus lowers the cognitive overhead, enabling teams to extract more value from their data and models.

From Static Files to Programmable Assets

Walrus isn’t just a passive storage locker. By integrating with smart contract platforms like Sui, it unlocks the next evolution of digital assets: files that are not only stored, but also programmable, referenceable, and enforceable by code. This is a game-changer for AI workflows and data-driven business models.

Imagine being able to encode complex access permissions, revenue sharing agreements, or usage limits directly into the digital asset itself. Model checkpoints can be licensed for inference with automated payments; datasets can be shared under specific terms, with attribution and auditability built in from day one. The boundaries between “sending a file” and “transacting an asset” dissolve—every AI output can be governed, monetized, and updated on-chain, in real-time.

This programmability brings new economic primitives to the table. Data creators and owners can enforce royalties, restrict usage to compliant parties, or even track downstream derivatives through composable contracts. For enterprises, this means greater control, transparency, and monetization potential. For the AI community, it’s a path to fairer, more open collaboration and innovation.

Neutral Infrastructure for Valuable Data

As AI-produced data accumulates value and legal significance, its storage needs to be bulletproof—not just technologically, but also institutionally. Centralized solutions, no matter how convenient, carry risks: a single cloud provider can delete, censor, or tamper with files, whether by accident, policy, or external pressure.

Walrus addresses these vulnerabilities by relying on a decentralized validator set and cryptographic proofs of data availability and integrity. The network’s neutrality is more than a technical feature; it’s a trust anchor for an emerging digital economy. No single actor can unilaterally alter or erase a model or dataset. This is vital for intellectual property protection, regulatory compliance, and open collaboration across borders.

As legal frameworks evolve to address AI data—especially around privacy, copyright, and provenance—Walrus’s infrastructure is well-positioned to provide the transparency and auditability that regulators and enterprises will demand. By guaranteeing that data is both available and immutable, it provides a stable foundation for everything from scientific reproducibility to cross-border licensing.

The Coordinator, Not the Product

Walrus’s native token isn’t designed for speculation or hype. Its purpose is utilitarian: to align incentives across storage providers, validators, and users so that data remains durable, performant, and available. The token acts as the network’s coordinator, compensating nodes for their work and ensuring the system remains robust even as it scales.

This mechanism is critical for long-term sustainability. Rather than relying on external subsidies or extractive fees, Walrus creates a self-sustaining marketplace where resources are allocated where they’re needed most. Users pay for actual storage and retrieval, while providers are rewarded for reliability and uptime. The result is an infrastructure layer that is resilient, adaptable, and free from the distortions of short-term speculation.

Signs of Serious Adoption

The real test for Walrus is not hype cycles or token price, but deep integration into the workflows of AI builders, data marketplaces, and enterprise platforms. When model providers, inference platforms, or large-scale data brokers rely on Walrus for storage and settlement, it’s a clear sign that the network’s value proposition is resonating.

Adoption at this level means Walrus isn’t just another blockchain project vying for attention—it’s the invisible plumbing that underpins mission-critical operations. This kind of adoption is sticky: once data and business processes are anchored on a reliable, neutral network, switching costs are high, and trust compounds over time. As the generative AI landscape matures, infrastructure that delivers real value—security, portability, and programmability—will become indispensable.

Where Things Could Go Wrong

However, the path isn’t without obstacles. Centralized cloud providers remain the default for many organizations, offering unmatched speed, convenience, and a mature ecosystem. Walrus must continually innovate to match or surpass these incumbents on cost, performance, and user experience. If it can’t, inertia will keep teams locked into the status quo.

Legal and regulatory environments are also evolving rapidly. As governments and industry bodies clamp down on AI data—especially regarding privacy, copyright, and sensitive content—decentralized networks face unique challenges. Walrus will need to develop sophisticated compliance tools and granular access controls to stay ahead of these shifting requirements.

Finally, the decentralized storage space is crowded, with established players and new entrants alike. Walrus’s focus on AI-centric features needs to translate into tangible advantages, not just marketing. Its success will hinge on demonstrating that tailored infrastructure for AI workloads is not a niche, but a growing necessity as data volumes and economic stakes soar.

Conclusion

Walrus isn’t seeking to be everything to everyone. By zeroing in on trustworthy, verifiable data infrastructure for AI, it aims to solve the problems that matter most to builders, enterprises, and the broader digital economy. As generative AI continues to produce increasingly valuable digital assets, the need for neutral, auditable, and programmable storage rails becomes ever more urgent.

For those building the future of AI, the true breakthrough may not be a newer, smarter model, but the infrastructure that makes their outputs secure, portable, and operational at scale.

Walrus stands poised to be that backbone: the dependable, transparent, and future-proof layer that enables AI innovations to become real-world assets—movable, tradable, and trusted for years to come.