Cryptocurrency users are all about speed. Faster blocks. Faster trades. Faster execution. However, there is the lesser known force behind any successful protocol and it does not always get the limelight: "data quality"

Cryptocurrency users are all about speed. Faster blocks. Faster trades. Faster execution. However, there is the lesser known force behind any successful protocol and it does not always get the limelight: "data quality"

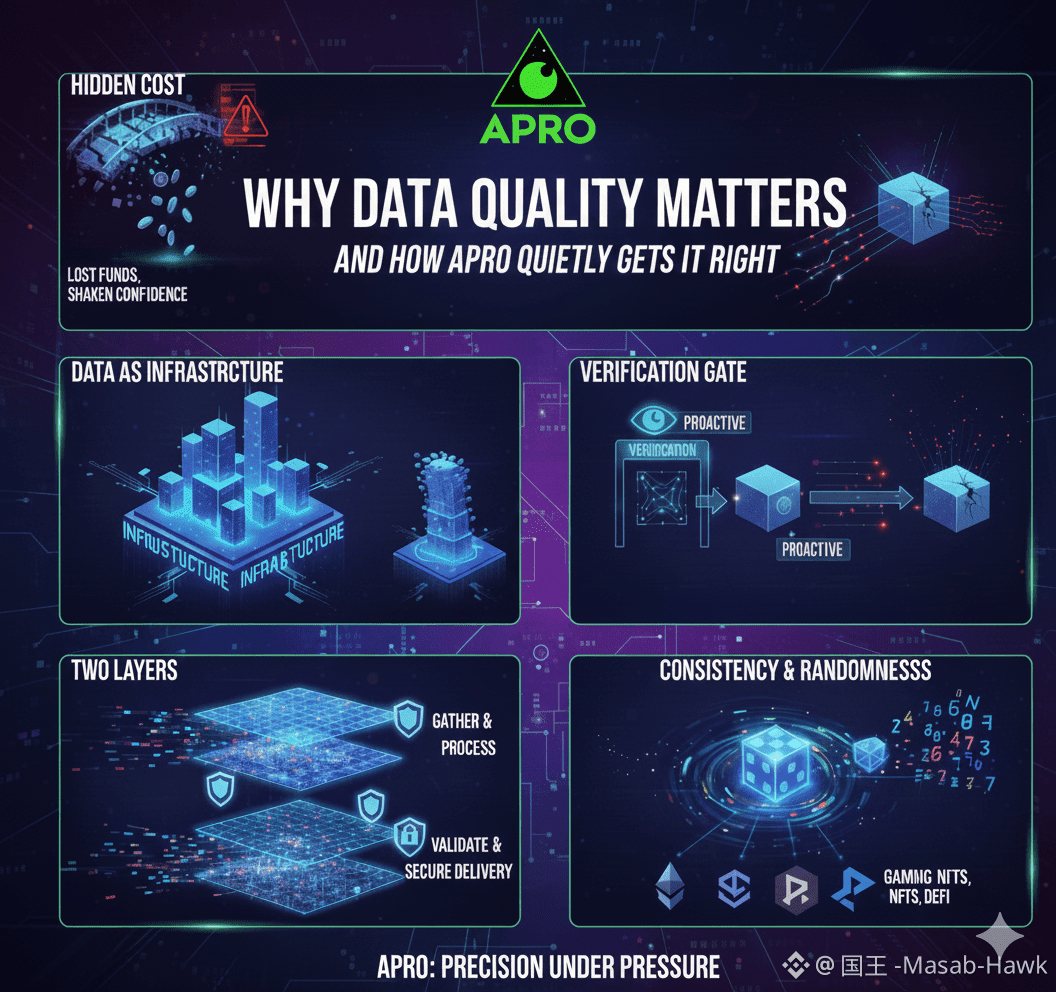

Bad data doesn’t fail loudly. It fails subtly. A slightly wrong price. A delayed update. Not on the random a occasion And then all at once everything turns to liquidation, games are not fair, trust is being eaten away. The error remains unknown to most of the users but the effects are felt by them.

This is the challenge that APRO was trying to solve.

Not by being more vocal than other oracles. Not by promising miracles.

However when more authentic and mature question rises :what is reliable data in a decentralized world ?

The Real Price of Good Enough Data:

In old systems, central authority has been enforcing data quality. In Web3, there is none of that authority and this is the beauty, and the risk.

Most applications on blockchain continue to be based on data that is:

1)Fast, but not properly verified.

2)Decentralized, though sporadic.

3)vulnerable at stress, yet cheap.

These defects conceal themselves particularly when markets are peaceful. However, during peak volatility or traffic times, bad data quality is very expensive. Not theoretically,but financially, protocols violated and confidence lost.

APRO addresses this as a structural problem and not a temporary inconvenience.

The Core Belief of APRO: Data Is Infrastructure:

Data is not perceived as a mere input at APRO.Data is seen as a basic blueprint of structure,just like roads across cities or power stations keep the economies going

When the foundation is not strong, then all things erected on top are destabilized.

That philosophy modifies the design of its system by APRO.

Unlike other who keep improving speed,APRO try to keep improving on precision under heavy load. It is not about delivering data fast, but data that, when required, will be there.

Checking without being late:

The least considered issue in oracle design is the verification timing. Lots of systems verify data when it is too late. APRO flips that logic.

Through offering off-chain intelligence and on-chain validation, APRO validates data prior to its reaching application. This will minimize the threat of infected inputs to smart contracts in the real time.

AI checks do not substitute the decentralization, instead, they strengthen it.Early warning can be serve in a way of pattern recognition ,anomaly detection and cross -source comparison.

.Mysterious, silent and efficient.

This is where the quality of data is proactive rather than reactive.

Two Layers, One Standard:

There is only one reason why APRO has the two-layer network architecture: separation of the responsibility.

One layer is concerned with the data collection and processing. The other is concerned with authenticating, verifying and safely transmitting that data to the chain. Quick updates without proper testing are avoided through proper screening and design eradicate the point of failure.It’s not flashy. But it’s resilient.

APRO is created to work in worst-case scenarios where many systems are created to work in best-case scenarios.

Randomness tells Its hiding Something:

Prices are not the only issue of data quality. It is also about the unpredictability.

Randomness most have some pattern and can be understandable to be provesble but not asserted fully in matters of gaming NFTs and more complex DeFi plans

The method of APRO allows verifiable randomness and makes sure that the results are not manipulative, biased, or quietly predictable.

Users are convinced with the system when they are convinced with the randomness. And trust is the beautiful thing of crypto.

Excellency Between Chains and Use Cases:

Another Big enemy of data that kill it, is Fragmentation

Different chains. Different standards. Various levels of reliability.

This is addressed with APRO that offers consistent data quality across over 40 blockchain networks. It can be crypto assets or real markets or even the gaming data, the verification standards are universal.

That consistency is important than one would guess.It give developer the opportunity to doubledown with reconsidering trust assumptions

It enables the user to navigate between ecosystems without doubting the information under the experience.

This Is the Issue Biggest To Ever Exists:

With maturity of Web3, hype will not result in the next wave of adoption. It will be of systems that just work,noiselessly, dependably, and in a stressful environment.

APRO does not attempt to re-invent decentralization. It’s refining it.

Taking the quality of data as a first-class concern, APRO fosters innovation in which innovation need not be afraid of its underpinnings. Where constructors are able to concentrate on concepts, rather than destruction management. And where users engage in applications that are stable, fair and reliable.

Final Thought:

Good information does not need to be noticed. It earns trust.

APRO has no strong points on promises: it has to do with stern performance. A market where people are obsessed with speed makes us remember that it is accuracy that endures.

And quality never dilutes, in the long run.

@APRO Oracle $AT #APRO