In most conversations about oracles, speed gets treated as the main benchmark.

Faster updates. Lower latency. Quicker reactions.

On the surface, this makes sense. Smart contracts react to data, and delays feel risky. But this framing hides a deeper issue: speed only matters if the system delivering the data can be trusted when conditions are no longer normal.

This is where oracle architecture becomes more important than raw data speed.

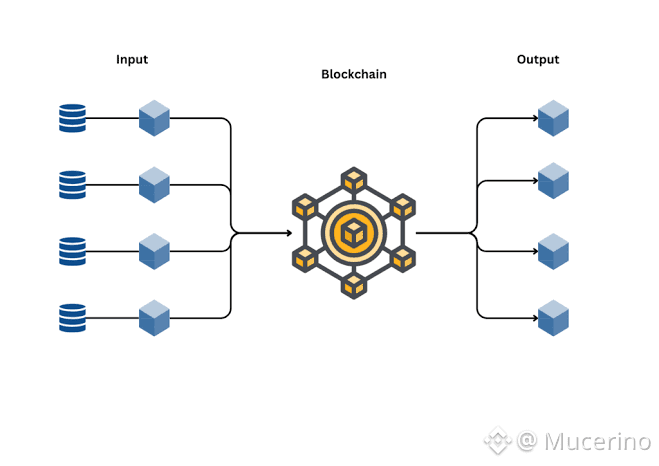

Oracles exist because blockchains are closed systems. They cannot see prices, events, or real-world states on their own. Once an oracle provides data, a smart contract may liquidate positions, settle derivatives, mint or burn assets, or trigger automated decisions that cannot be reversed. At that point, the cost of being wrong is often higher than the cost of being late by a few seconds.

Architecture determines whether an oracle can survive these moments.

A speed-first oracle optimizes for delivery time. But if that speed comes from relying on limited sources, weak aggregation logic, or fragile trust assumptions, it creates a system that performs well only in calm conditions. When markets move aggressively, when liquidity thins, or when incentives turn adversarial, that same system becomes a liability.

APRO approaches this problem from a different angle.

Instead of treating oracle output as “a number to be delivered fast,” APRO treats it as a decision pipeline. Data moves through stages: off-chain collection, multi-source aggregation, anomaly filtering, and verification before it ever becomes final on-chain truth. This design accepts a simple reality — real-world data is messy, probabilistic, and sometimes manipulated.

Architecture is what allows APRO to handle that complexity.

By separating off-chain intelligence from on-chain finality, APRO reduces unnecessary blockchain load while preserving cryptographic accountability. Validators are not just messengers; they are economically responsible participants. When data is finalized, it carries not just a timestamp, but a cost to dishonesty.

This matters because most oracle failures are not caused by slow updates. They are caused by bad assumptions — assumptions that one source is enough, that speed is safety, or that trust does not need enforcement.

In reality, speed can amplify mistakes.

A fast but fragile oracle propagates errors instantly. A slower but well-designed oracle absorbs noise, filters manipulation, and produces outputs that systems can rely on even when pressure is highest.

APRO’s architecture reflects this philosophy. Its support for different data delivery models, its layered validation process, and its incentive-driven security model are not optimizations for marketing metrics. They are design choices rooted in how systems fail in the real world.

As Web3 expands into areas like real-world assets, AI agents, prediction markets, and cross-chain coordination, the cost of incorrect data grows. These systems don’t just need information quickly — they need defensible truth.

In that context, oracle architecture becomes the foundation. Speed is just one parameter within it.

The long-term reliability of decentralized systems will not be decided by who delivers data first, but by who delivers data that holds up when conditions are worst.

That’s why oracle architecture matters more than data speed.