Traditional financial systems have clear concepts of who can own assets and make transactions. Individuals have bank accounts. Corporations have treasury accounts. Governments have central bank accounts. Each entity type has specific rules and permissions. AI agents don't fit into any of these categories.

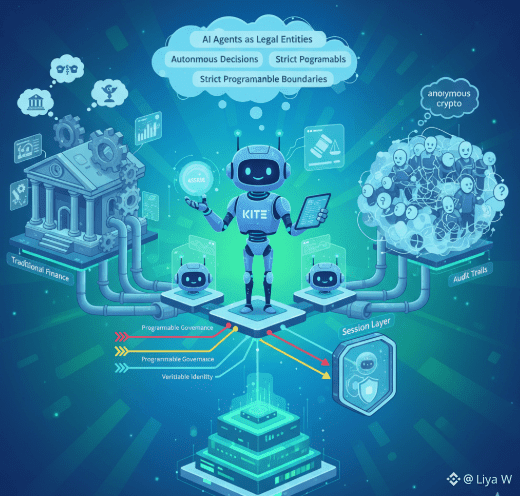

@GoKiteAI essentially created a fourth category: AI agents as economic participants with their own rights and restrictions. An agent on Kite can hold assets in its own wallet, make autonomous decisions about spending, earn compensation for services provided. But unlike humans who have unlimited autonomy, agents operate within strict programmable boundaries set by their deployers.

The identity structure on Kite mirrors legal frameworks around authority and liability. The user layer is like the principal in a legal relationship, the agent layer is like a limited power of attorney, sessions are like specific authorized transactions. Each layer has defined scope of authority that can be verified and enforced programmatically.

Consider a real-world parallel: when you hire a lawyer, you grant them power of attorney to act on your behalf within specific domains. They can sign certain documents for you, make certain decisions, but they can't sell your house or empty your bank account. Kite's agent permissions work similarly but enforced by code rather than legal system.

This matters because AI agents will increasingly need to operate in regulated environments. A healthcare AI managing patient appointments needs to comply with HIPAA. A financial AI executing trades needs to follow securities regulations. Kite's identity system creates audit trails showing exactly what each agent did and under whose authority.

$KITE token governance will likely evolve rules about agent behavior as the ecosystem matures. What activities require human approval? What transaction sizes can agents handle autonomously? How should agents identify themselves in interactions? These governance decisions shape the norms for AI agent economic activity.

Programmable governance on Kite is like regulatory frameworks for agents. Just as corporations must follow corporate law and individuals must follow civil law, AI agents on Kite must follow programmed rules defined by their deployers and network governance. That structure creates order in what could otherwise be chaotic autonomous agent activity.

The session layer is particularly clever from a liability perspective. Each agent interaction creates a limited session with specific permissions. If something goes wrong, the liability is contained to that session scope. Similar to how limited liability corporations protect shareholders from company debts beyond their investment.

Verifiable identity creates the accountability necessary for agents to participate in serious economic activity. In traditional finance, every transaction is traceable to a legal entity. In anonymous crypto, that accountability doesn't exist. Kite provides accountability through identity verification while maintaining the efficiency and autonomy benefits of blockchain transactions.

Future legal frameworks around AI will probably need to address questions of agency, liability, and authority. Kite is building technical infrastructure that could become template for how AI agents operate legally. Creating the norms and systems now through working technology rather than waiting for slow-moving legal systems to catch up. #KITE @KITE AI $KITE #Kite