Most people still imagine decentralized storage the same way they did years ago:

a swarm of anonymous nodes, a token fee, and a vague hope that your data comes back when you ask for it.

That picture is incomplete and honestly, outdated.

Walrus is quietly building something far closer to how the real internet actually works. Not node-to-node chaos, but a layered system of services, operators, and incentives that normal applications can rely on without giving up verifiability.

This is the underrated idea: the service layer.

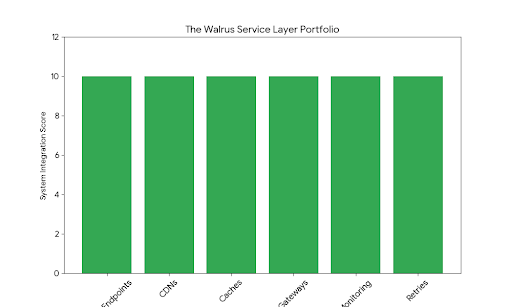

The internet is not peer-to-peer. It never was.

Your phone doesn’t talk directly to a raw server somewhere in isolation.

It talks to services.

Upload endpoints.

CDNs.

Caches.

Gateways.

Retries.

Monitoring.

People whose job it is to make sure things don’t break at 3 a.m.

Web2 feels fast and reliable because it’s wrapped in layers of professional operators who absorb complexity and surface simplicity.

Walrus doesn’t pretend this reality doesn’t exist.

It embraces it.

Web2 ease. Web3 truth.

Walrus is designed so applications don’t need to coordinate with dozens of storage nodes, manage encoding pipelines, or juggle certificates.

Instead, it relies on a permissionless operator market:

Publishers for ingestion

Aggregators for reconstruction

Caches for speed and scale

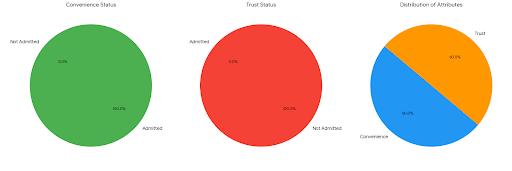

Apps get a familiar experience upload, done while users retain cryptographic proof that the job was actually completed correctly.

That tradeoff is the mark of adult infrastructure design.

Convenience is admitted.

Trust is not.

Publishers: professional uploads, verifiable results

A publisher in Walrus isn’t a trusted middleman.

It’s a specialist.

Publishers handle high-throughput uploads using normal Web2 tooling like HTTP, encryption, and batching. They fragment data, collect signatures from storage nodes, assemble certificates, and push the necessary commitments on-chain.

Here’s the key:

the user doesn’t have to trust the publisher’s word.

On-chain evidence proves whether the publisher did its job — and whether the data can be correctly retrieved later.

This matters because most real products don’t want users wrestling with complex storage flows in a browser. They want simplicity. Walrus gives them that without abandoning verification.

Experts do the hard work.

Evidence stays public.

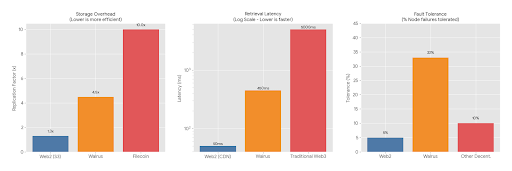

Aggregators and caches: a CDN with receipts

Reading from decentralized storage is not free in effort. Someone has to collect enough fragments, reconstruct the blob, and deliver it in a way applications understand.

That’s the role of aggregators.

They reassemble data and serve it over standard interfaces like HTTP. On top of that, caches act like a decentralized CDN — reducing latency, spreading reconstruction cost across many users, and protecting storage nodes from unnecessary load.

What makes this Walrus instead of Web2 cosplay is simple:

You can always verify the result.

Fast reads and cryptographic correctness are not mutually exclusive here. The cache can lie — but it can also be caught.

That changes everything.

From protocol to operator economy

Zoom out, and you see what Walrus is really doing.

It’s not just storing data.

It’s enabling businesses.

Ingest publishers specializing in regional throughput

Cache operators optimizing low-latency media delivery

Aggregators offering simple APIs for teams that don’t want to reassemble blobs themselves

Walrus doesn’t leave this vague. These roles are explicitly defined in the architecture.

That’s what makes something infrastructure.

Infrastructure has roles.

Roles have incentives.

Incentives create uptime.

And when uptime becomes someone’s profession, adoption stops being theoretical.

A normal developer experience on purpose

Walrus openly supports Web2 interfaces.

Its documentation describes HTTP APIs for storing and reading data, quilt management, and publicly accessible endpoints. You can curl it. You can monitor it. You can ship with it quickly.

This is huge.

Developers trust what they can test. A visible, instrumentable HTTP endpoint lowers the psychological barrier more than any whitepaper ever could.

Decentralization here is not a tax on usability.

It’s embedded into a workflow developers already understand.

Trust doesn’t stop at storage nodes

One of the more subtle and impressive aspects of Walrus is that it acknowledges reality:

clients can fail too.

Encoding might be done by end users, publishers, aggregators, or caches. Any of them can make mistakes accidentally or otherwise.

Walrus doesn’t assume perfect actors. It designs for imperfect ones.

That’s systems thinking.

That’s the difference between a protocol that demos well and one that survives contact with the real world.

Observability means seriousness

Real infrastructure lives or dies by monitoring.

Walrus already treats observability as a first-class concern: live network visualizations, node health, operator monitoring, community-visible tooling.

This isn’t hype.

This is how systems are actually run.

When monitoring becomes a shared responsibility, a network stops being “technology” and starts being operated.

And operated systems are the ones that last.

The quiet thesis

Walrus is not just decentralizing disk space.

It’s decentralizing the cloud pattern itself uploads, reads, caching, APIs, monitoring, and the people who run them while keeping verifiability as the anchor.

That combination is rare.

Some projects stay pure and unusable.

Others become usable and lose truth.

Walrus is trying to keep both.

That’s why optimism here isn’t about scale or hype.

It’s about intent designing for how the internet really works.

Services.

Operators.

Performance.

Accountability.

That’s not generic.

That’s infrastructure.