Every smart contract is deterministic by design, but the world it tries to model is not. Prices move, events unfold, assets change hands, games resolve outcomes, and markets react in real time. None of this information exists natively on-chain. Blockchains can execute logic flawlessly, but they cannot observe reality on their own. That gap between deterministic code and unpredictable reality is where the oracle problem lives. And historically, it has been one of the most fragile points in the entire Web3 stack.

Every smart contract is deterministic by design, but the world it tries to model is not. Prices move, events unfold, assets change hands, games resolve outcomes, and markets react in real time. None of this information exists natively on-chain. Blockchains can execute logic flawlessly, but they cannot observe reality on their own. That gap between deterministic code and unpredictable reality is where the oracle problem lives. And historically, it has been one of the most fragile points in the entire Web3 stack.

The failure modes are well known. A delayed price update liquidates healthy positions. A manipulated data feed drains a protocol. A centralized oracle becomes a single point of failure for billions in value. These are not edge cases; they are structural weaknesses. Oracles are not just data providers. They are the trust translators between the off-chain world and on-chain execution. When they fail, decentralization becomes irrelevant, because the system is already compromised at the input layer.

APRO approaches this problem from a fundamentally different angle. Instead of treating oracle design as a narrow technical problem “how do we fetch prices cheaply?” it treats it as a systems problem. How do you turn messy, probabilistic, real-world information into something that smart contracts can rely on with confidence? How do you do that at scale, across chains, asset classes, and use cases, without introducing new trust assumptions? APRO’s architecture is shaped by those questions, not by a single use case.

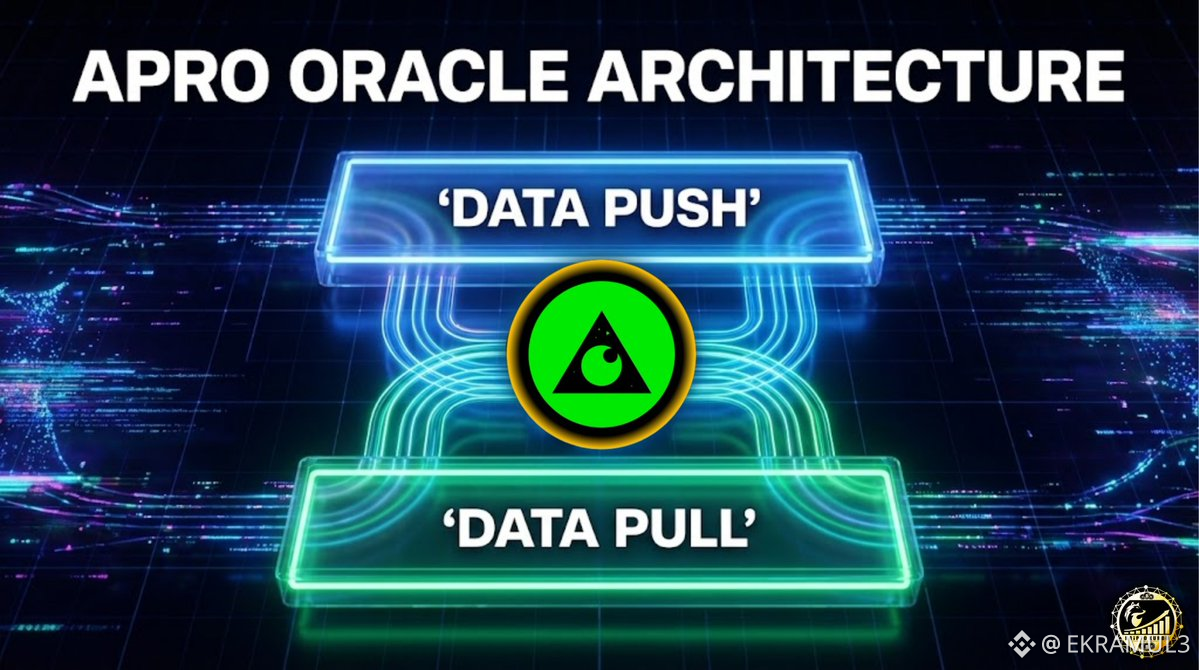

One of the most important insights behind APRO is that oracle workloads are not uniform. Some applications need constant updates. Others only need data at the moment of execution. Forcing all of them into the same delivery model creates unnecessary cost, latency, or risk. APRO solves this by natively supporting both push-based and pull-based data flows. In environments like DeFi trading or derivatives, data can be streamed continuously. In environments like insurance claims, governance decisions, or settlement logic, data can be requested only when required. This flexibility is not a feature add-on it is core to how APRO reduces inefficiency without sacrificing correctness.

APRO does things differently from most oracle networks. It doesn’t just lump data collection and data verification together. First, off-chain systems gather data from all over, clean it up, and weed out the junk. Then, once that’s done, the on-chain side steps in to check, lock in, and share the results for everyone to see and trust. Splitting the work like this actually matters a lot. Off-chain, you get speed and cheap computation. On-chain, you get the real muscle: trust and security, right where it counts.

But honestly, what really puts APRO in its own league is the way it handles data quality. Instead of pretending every piece of incoming data is trustworthy, APRO uses AI to actually check it spotting weird patterns, catching mistakes, and flagging suspicious stuff before it ever touches a smart contract. This isn’t just a small upgrade. It flips oracle security on its head. APRO isn’t just sitting around waiting for bad data to cause trouble. It’s working to stop bad data from getting in at all. That matters more and more as oracles start dealing with way more complicated information than just prices.

Randomness is another area where this philosophy shows. Many applications require outcomes that are both unpredictable and provably fair gaming, lotteries, NFT minting, and selection mechanisms among them. Centralized randomness breaks trust. Naive on-chain randomness breaks security. APRO provides verifiable randomness that can be audited after the fact without revealing the outcome in advance. This turns randomness into an enforceable primitive rather than a hand-waved assumption.

APRO gets that Web3 isn’t living on just one chain anymore. These days, protocols jump between dozens of networks, sometimes all at once. Keeping data straight across all those places? That’s a big deal. APRO already works with tons of chains, so developers don’t have to mess with patching together a bunch of clunky, separate tools. It keeps things simple and cuts down on the headaches and risks that come with juggling data across different chains. That’s becoming more important as everything in Web3 gets more connected.

But APRO isn’t just about crypto data. It also brings in stuff like equity prices, real estate signals, gaming stats, and other real-world info. Tokenization is pulling more of this into Web3, and APRO’s ready for it. This isn’t just ticking boxes for coverage it’s about making sure the oracle layer can really handle what’s coming next. As more real-world value moves on-chain, oracles need to do more than fetch numbers they have to manage tricky details, verify sources, and understand context. APRO’s built for all that.

APRO compelling long term is not any single feature, but the coherence of its design. Flexibility without chaos. Verification without bottlenecks. Decentralization without blind trust. The protocol is not optimized for headlines or short-term narratives. It is optimized for being correct under stress when markets move fast, when value is high, and when mistakes are expensive.

In the end, the oracle problem is not solved by being faster, cheaper, or more decentralized in isolation. It is solved by making trust measurable, enforceable, and adaptable to different realities. APRO represents a step in that direction. By treating data as infrastructure rather than an afterthought, it helps smart contracts move from guessing about the world to actually knowing enough to act safely within it.

As Web3 matures, applications will not fail because their logic is wrong. They will fail because their inputs are. Or they will succeed because those inputs are finally worthy of the value they secure. In that future, oracle networks like APRO will not be optional components at the edge of the stack. They will be part of its foundation.