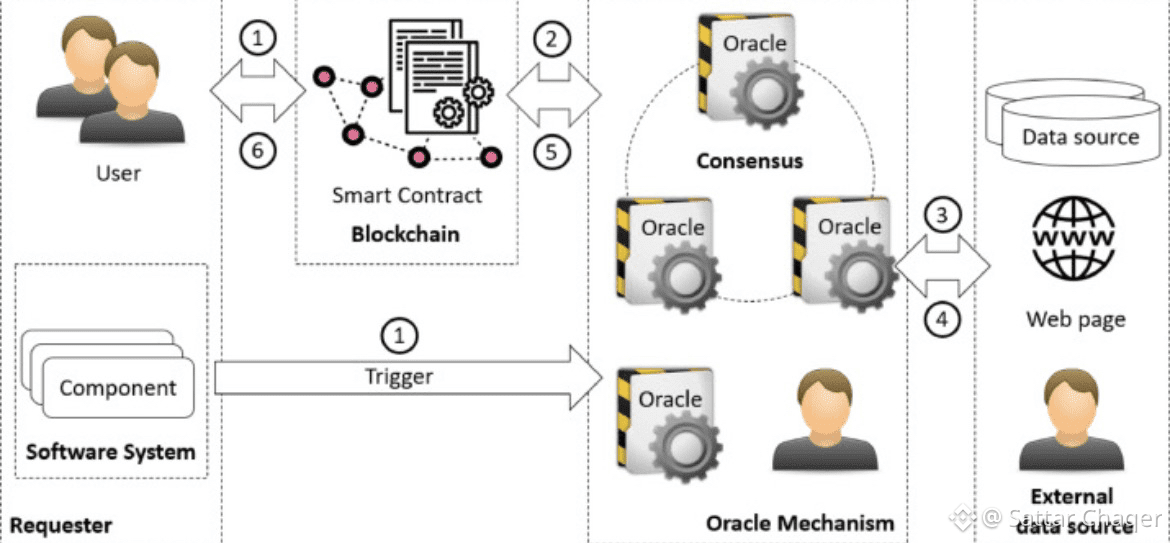

Oracles are usually discussed as technical components. They deliver prices, metrics, or external data to smart contracts, and their quality is often measured by speed or uptime. When failures occur, attention turns to feeds, latency, or infrastructure performance. This focus misses the deeper issue.

Oracle reliability is not only about data quality. It is about governance.

Most oracle failures do not happen because data is unavailable. They happen because decision authority is unclear. Which sources matter during stress. When updates should be delayed. How conflicting signals are resolved. These questions are not technical in nature. They are governance questions expressed through code.

During calm market conditions, governance weaknesses remain invisible. Data arrives on time. Aggregation works. Contracts execute as expected. Under stress, however, the absence of explicit decision rules becomes costly. Systems continue to operate, but on assumptions that no longer hold.

Reliable oracle systems define not only how data is fetched, but how it is evaluated. They acknowledge that correctness is contextual. A price that is accurate in isolation may be dangerous if delivered without confidence bounds or verification under abnormal conditions.

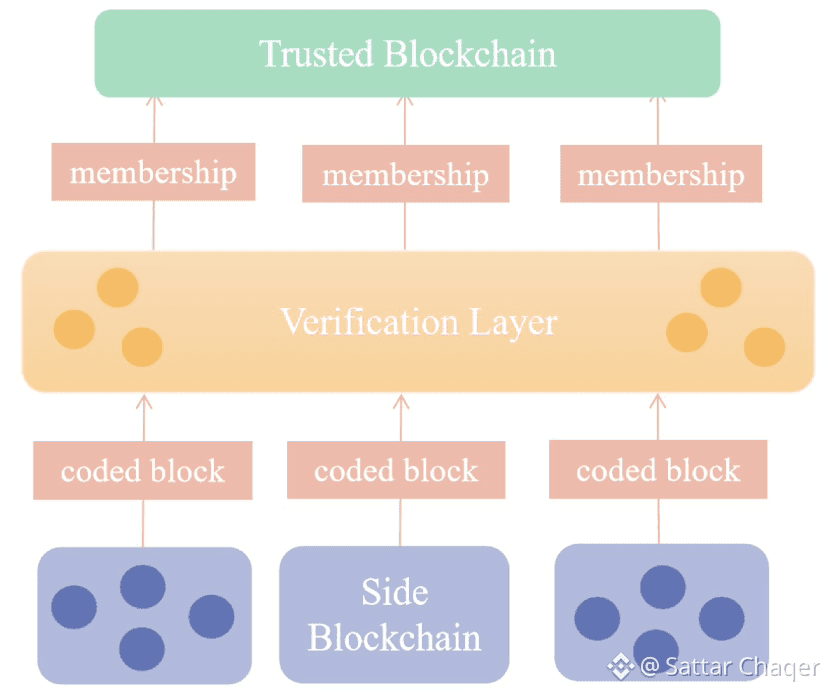

This is where APRO treats oracle design as a governance surface. Verification layers, redundancy, and decision logic are used to control how data influences execution, not just how quickly it arrives. Data is filtered through structure before it becomes actionable.

Speed is easy to optimize. Judgment is harder. Oracles that survive volatile conditions are not the fastest ones, but the ones with rules that remain enforceable when assumptions break.

Data reliability emerges from governance clarity, not raw throughput.