After深入研究了APRO(AT)的技术架构、市场博弈和生态潜力后,我意识到一个最根本、却常被市场叙事所掩盖的问题:一切预言机的终极价值,不取决于其传输数据的速度或链的多少,而取决于它所输送的“数据源”本身是否可信、抗攻击且可持续。

After深入研究了APRO(AT)的技术架构、市场博弈和生态潜力后,我意识到一个最根本、却常被市场叙事所掩盖的问题:一切预言机的终极价值,不取决于其传输数据的速度或链的多少,而取决于它所输送的“数据源”本身是否可信、抗攻击且可持续。

Today, I want to shift my perspective from the protocol layer downwards, deeply exploring the data source network that APRO is trying to build, analyzing its layout, challenges, and true moats on this hidden battlefield.

1. The Dilemma of Data Sources: The 'Achilles' Heel' of the Oracle Track

Oracles are often hailed as the 'data bridges' of blockchain, but the starting point of this bridge—the data source—is often the weakest link. Traditional oracle networks heavily rely on a limited number of centralized high-end data suppliers (such as Bloomberg, Reuters) or APIs from mainstream exchanges. This brings triple risks:

1. Single Point of Failure Risk: If a core data source is tampered with or interrupted, it may contaminate the entire network.

2. Cost and Licensing Risks: Professional data is expensive, and suppliers may change licensing terms or prohibit cryptocurrency-related use at any time.

3. Limited Coverage: Traditional data sources do not sufficiently cover long-tail, non-standard information such as company financial reports, legal documents, and IoT sensor data required for RWA.

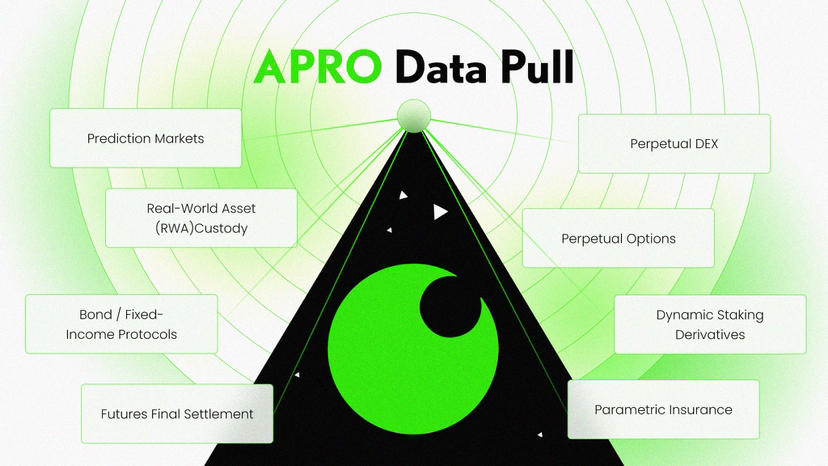

APRO claims to have integrated over 1,400 data sources, and its ambition is clearly to build a more redundant and decentralized data source matrix. But this raises a core question: How is the quality, degree of decentralization, and resistance to manipulation of these data sources?

2. APRO's Solution: Mixed Data Sources and AI-Augmented Verification

According to its technical documentation, APRO has not completely overturned the traditional; rather, it has adopted a pragmatic 'hybrid enhancement' strategy.

First Layer: Broad Coverage and Cost Optimization. By aggregating a large number of publicly available, low-cost data sources (such as prices from major exchanges, publicly available government data, satellite imagery, etc.), a foundational real-time information network is constructed. Its 'pull model' allows data to be obtained on demand, aiming to reduce the cost of using long-tail data.

Second Layer: AI-Powered Quality Filtering and Generation. This is APRO's key innovation. Its AI oracle engine not only transmits data but also takes on the role of 'primary validator'. For example:

Consistency Verification: When a Twitter account claims that a significant event has occurred at a company, AI can quickly scrape and cross-reference multiple independent sources such as news websites, official announcements, and stock price movements to assess the credibility of that information before submitting it to the node network for final consensus.

Unstructured Data Parsing: For legal contracts in RWA scenarios, AI conducts preliminary clause extraction and digitization of key information (such as amounts, dates, parties), transforming difficult-to-handle 'documents' into verifiable 'structured data points' on the blockchain.

The goal of this mechanism is to transform the risk of data sources from 'the credibility of a single supplier' to 'multi-source cross-validation and algorithm reliability'. The AT token incentivizes two key roles: data source nodes (providing raw data) and verification nodes (running AI models and participating in consensus).

3. The Hidden Battlefield: The Paradox of Decentralization of Data Sources and Token Distribution

However, there exists a profound paradox here, which is a core challenge that APRO must face. A truly robust decentralized oracle requires its underlying data source providers to be as decentralized as possible. However, the current distribution of AT tokens is highly concentrated, with the top five addresses controlling up to 73.5% of the circulating supply. This centralization may erode the decentralization of data sources in two ways:

1. Concentrated Governance Power: If future key governance decisions regarding which data sources to add or remove, how to adjust AI model parameters, etc., are voted on by AT holders, then the will of the whales will dominate the composition of data sources, potentially introducing bias or creating single points of failure.

2. Centralization Risk of Nodes: Running data source nodes and AI verification nodes requires technical capability and capital. Whales have the ability and motivation to operate large-scale node clusters, which may lead to the network being controlled by a few entities, reverting to the old path of centralized data suppliers, just with a layer of blockchain skin.

Although the project has received endorsements from top institutions such as Polychain Capital and Franklin Templeton, proving capital's recognition of its technology, it does not directly address the decentralization challenges at the data source layer. An anonymous team may help focus on development in the early stages, but in the long run, the lack of accountable entities to address legal and compliance disputes arising from data sources may also become a hidden danger.

4. Future Outlook: Building an Unreplicable Data Ecosystem

Therefore, APRO's long-term moat is not about connecting to 40 chains, but whether it can build a unique, hard-to-replicate, and sustainably growing ecosystem of data sources. I believe its future development will revolve around the following axes:

Vertical Deep Dives: In the two core tracks of RWA and AI, establish direct strategic cooperation with authoritative data providers (such as accounting firms, law firms, and official institutions), even incorporating these traditional 'trust anchors' into the network through node incentives to obtain exclusive or high-fidelity data channels.

Incentivizing Innovation: Design more refined token economic models that not only reward verification nodes but also heavily incentivize those contributors who provide unique, high-value, resistance-to-manipulation data sources. This allows nodes providing pricing data for agricultural products in remote areas or specific supply chain logistics information to also receive generous rewards.

Community Governance Evolution: Mechanisms must be designed (such as gradually releasing governance power to a broader range of node operators, introducing reputation systems, etc.) to break the monopoly of token holding concentration on data source governance, which is key to achieving its vision of 'trusted data democratization'.

Summary

For me, the ultimate question in evaluating APRO (AT) is no longer 'Is its technology advanced?', but rather 'Is its network of data sources robust, diverse, and decentralized enough?'

Data sources are the 'water sources' of the digital world; controlling the water source means controlling all downstream applications. The value of AT will ultimately anchor on the uniqueness and immutability of the sum of its connected data sources.

Investors should closely monitor not just how many blockchains it has integrated, but also what high-quality data partners it has added, whether the number of data source nodes and their geographical distribution are decentralized, and whether killer applications based on APRO exclusive data sources have emerged.

If APRO can successfully weave together hundreds of dispersed, trustworthy data sources into an indestructible network, then AT will become the ultimate bearer of value growth in this network.

Otherwise, it may just be another transmission channel, caught in a red ocean amid fierce competition. This battle for data sources is the secret main battlefield that determines the life and death of oracle projects.