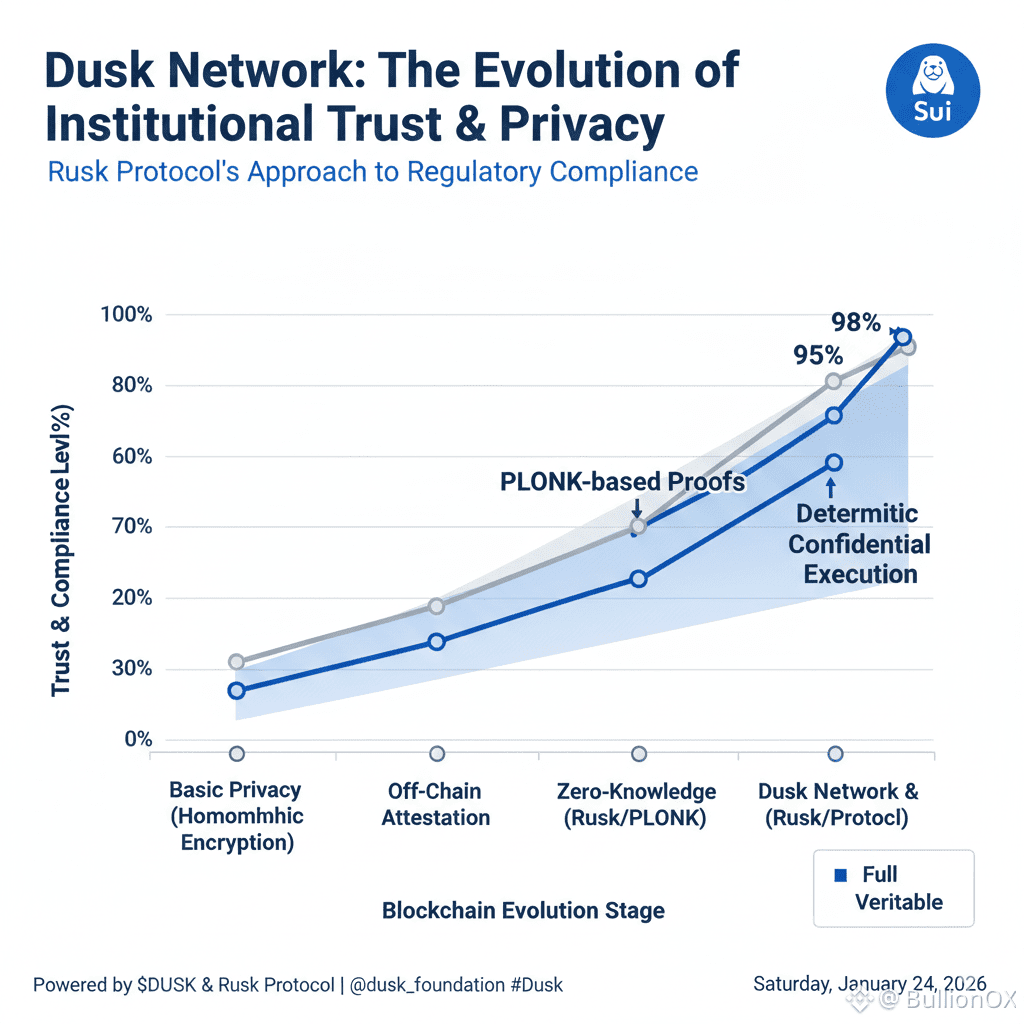

The Rusk protocol of @Dusk struck me as a compromise between regulation and privacy when I first read about the way blockchains can achieve institutional demands. The state transition function of the network is called Rusk, which follows smart contracts and transactions in a manner that prevents data privacy and allows verifiable checks. Privacy in regulated finance ensures sensitive data such as trade strategy is not exposed, compliance must be checked, Rusk resolves this by checking with zero knowledge proofs that everything is correct but nothing is revealed.

Rusk makes sure that state transitions are deterministic, i.e. logic executes in a consistent and even confidential manner. In the case of regulators, it implies that they can request evidences that transactions were conducted in accordance with such rules as KYC or limits, without seeing the entire data. The design orientation is aimed at the long term usefulness of institutions, in which privacy is the guarantee against leaks, and verification is the source of trust.

A Mechanism of Confidential Execution by Rusk.

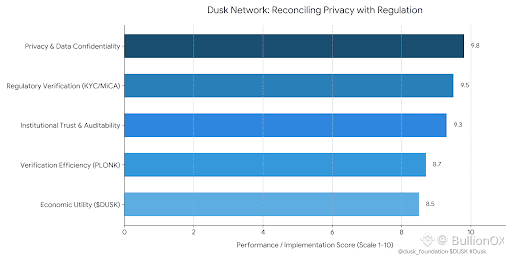

Rusk interprets transactions with a modular runtime that has confidential smart contracts. It manages state transitions by obscuring inputs and outputs and has PLONK based proofs to assert that it has been executed correctly. This enables Dusk to balance a privacy and verification: institutions do private processing, yet Rusk creates succinct proofs to auditors, showing that no rules were violated without stating balances or identities.

Ideally, Rusk trades off efficiency, with the generation of proof remaining lightweight to scale. This is important in the regulatory environments, which can not be entangled with regular checks. The deterministic quality of the protocol makes any recovery or audit controlled and balances between the requirement of privacy and the requirement of on demand transparency.

Tradeoffs between Privacy and Verification by Proofs.

Rusk changes the paradigm of complete disclosure into verification by proof, which makes it possible to reconcile in controlled settings. It executes cryptographic commitments to obscure states, but produces proofs that can be verified by regulators with respect to standards such as MiCA. This implies default of privacy, but can always be verified perfect in case of RWAs where details of assets remain confidential, yet can be verified in terms of compliance.

This balance can be achieved without performance hits by Rusk integrating with Dusk consensus. Validators authenticate evidence, rather than information, arbitrate the requirement of decentralized security with institutional demands of privacy. The economic layer, the $DUSK token, supplies Rusk with the gas used in these proof generations, and makes the use of Rusk compliant with incentives.

Applicability to Real World in Institutional Setting.

Rusk makes the design of Dusk an institutionally useful tool (e.g. tokenized securities) by permitting untrusted but verifiable workflows. It balances privacy (secrecy of competitive information) and verification (giving evidence to audit) and this renders Dusk appropriate to be used in the tradfi. This is considerate and highlights long term utility in the Web3 compliance trends whereby Rusk is keen on ensuring that these systems can be updated so that neither privacy is compromised nor the needs of regulations.

What do you consider is your privacy verification balance with chains?

What can Rusk do to enhance institutional adoption?