Walrus Protocol sits at an interesting intersection that traders and builders have been watching closely lately: data availability and blockchain security finally being treated as first-class problems instead of afterthoughts. If you’ve been around long enough, you know how many promising chains hit performance walls not because of consensus, but because data simply couldn’t move or be verified efficiently at scale. Walrus is a response to that bottleneck, and it’s one of the more practical ones we’ve seen emerge over the past year.

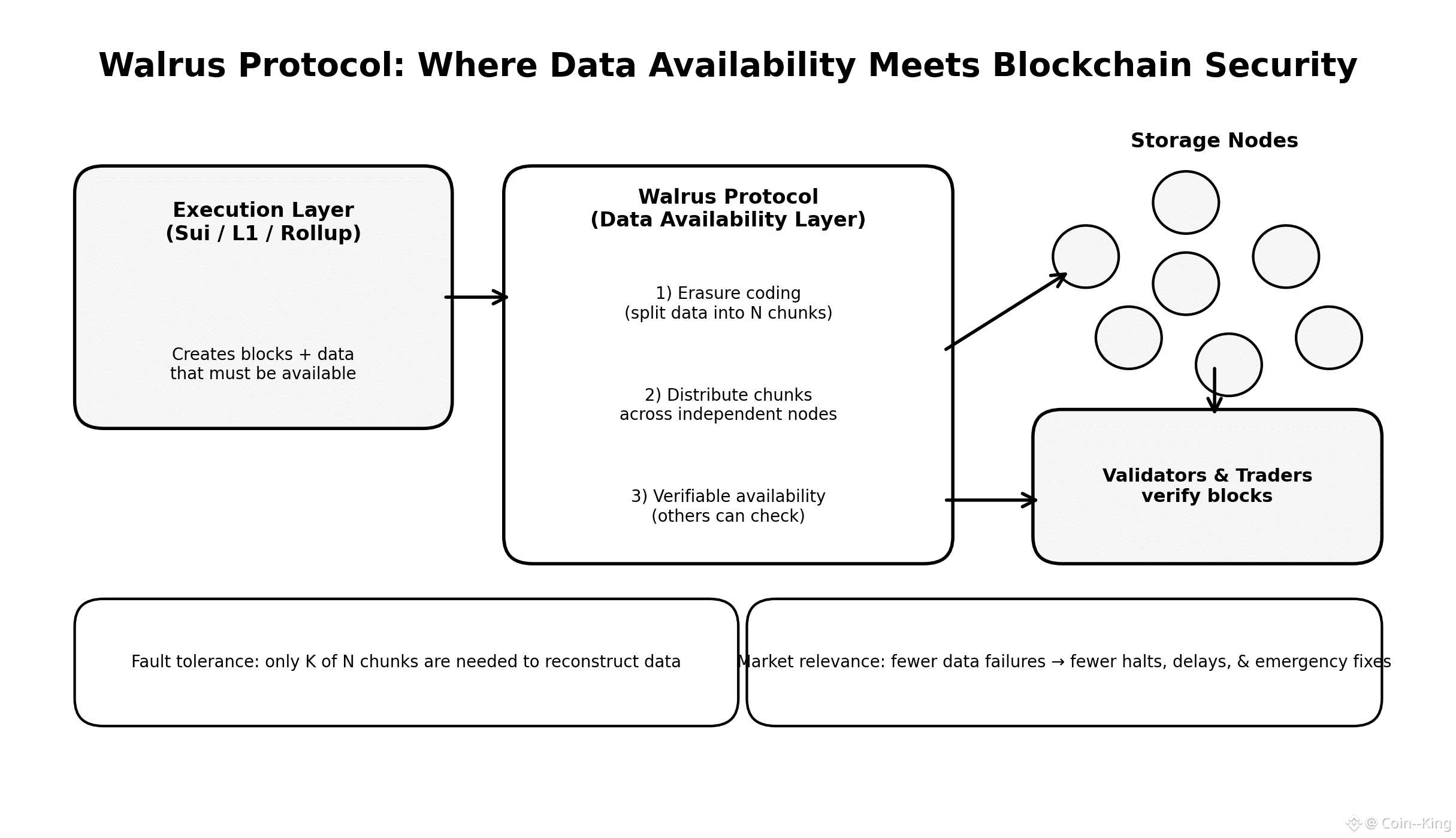

At its core, Walrus Protocol is a decentralized data availability and storage layer designed to work natively with blockchains, most notably the Sui ecosystem. Data availability sounds abstract until you simplify it. When a blockchain produces blocks, the data inside those blocks must be accessible to everyone who wants to verify them. If validators can’t easily access the full data, the security assumptions start to crack. Walrus focuses on making sure that data is not just stored, but provably available, even under adversarial conditions.

What makes Walrus stand out is how it approaches this problem technically without drowning developers in complexity. Instead of replicating full datasets everywhere, Walrus uses erasure coding to split data into chunks. Only a subset of those chunks is required to reconstruct the original data. In simple terms, you don’t need every storage node to be honest for the system to work. As long as enough of them are, the data remains available and verifiable. For traders, this matters because availability failures often show up as halted chains, delayed withdrawals, or emergency governance actions. Those are the moments when volatility spikes for all the wrong reasons.

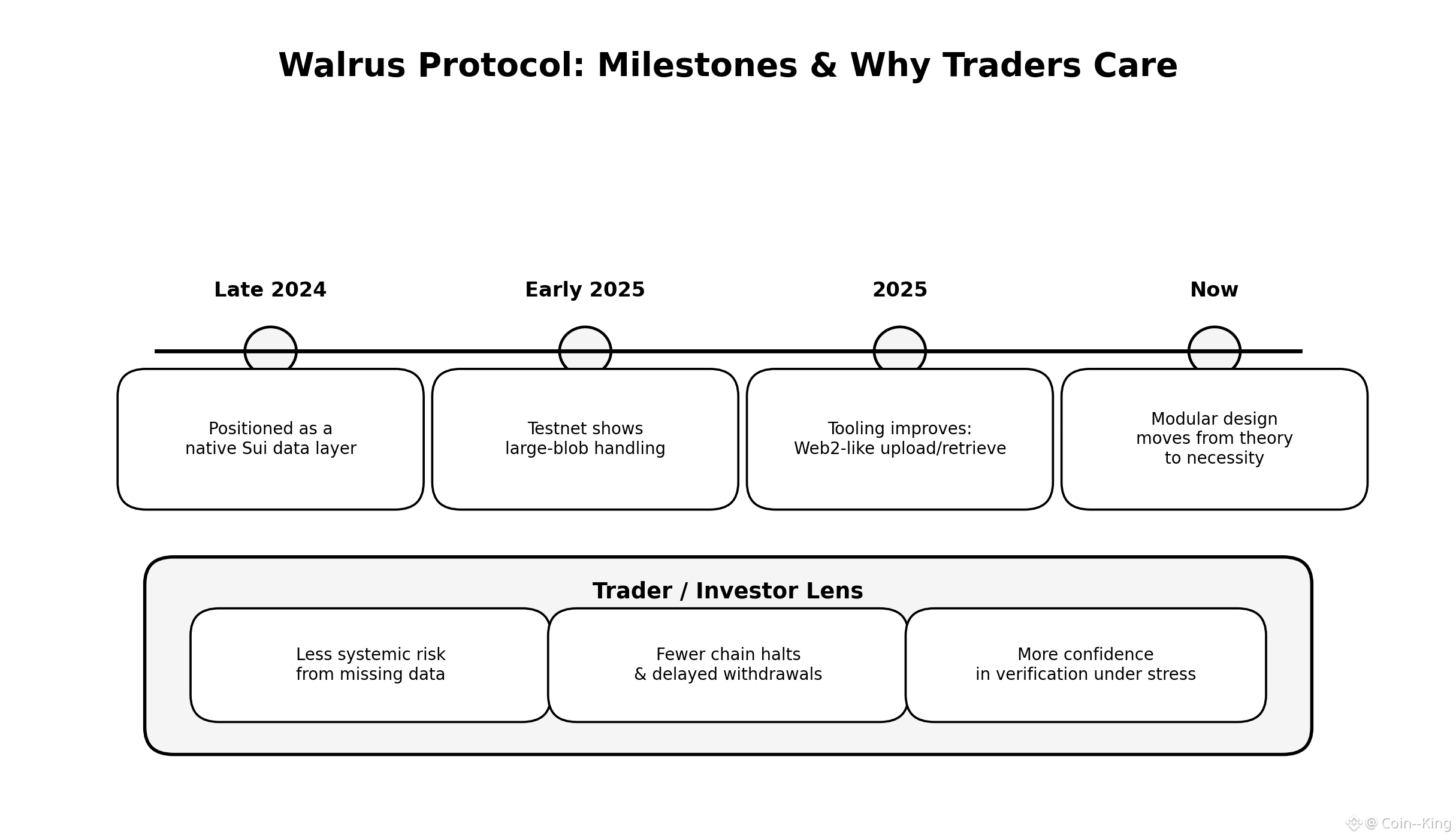

Walrus started gaining attention in late 2024, when Mysten Labs and Sui contributors began positioning it as a native data layer rather than an external add-on. By early 2025, testnet deployments demonstrated that Walrus could handle large blobs of data with lower overhead than traditional on-chain storage, while still preserving cryptographic guarantees. That’s a big deal in a market where rollups, gaming chains, and DeFi protocols are all pushing throughput limits.

The reason it’s trending now is timing. Modular blockchain design has moved from theory to necessity. Ethereum rollups, app-specific chains, and high-performance L1s all need somewhere reliable to put data without bloating their base layer. Data availability solutions like Celestia opened the door, but Walrus is carving out a niche by being tightly integrated with an execution-focused ecosystem. That tight coupling can be a strength when latency and security assumptions need to be aligned.

From a trader’s perspective, I always ask a boring question first: does this reduce systemic risk? In my view, yes, at least incrementally. Chains that rely on fragile data pipelines tend to break under stress, and stress is guaranteed in crypto. By pushing data availability into a specialized layer with clear incentives and verifiability, Walrus reduces the chance of silent failures. You might not notice it on a green day, but you’ll feel it when markets turn ugly.

There’s also steady progress on the developer side. In 2025, Walrus tooling improved to make uploading and retrieving data feel closer to Web2 cloud storage, without sacrificing decentralization. That’s important because adoption doesn’t come from whitepapers, it comes from builders not fighting the stack every day. When developers trust the data layer, users indirectly benefit through faster apps and fewer outages.

Walrus Protocol isn’t a silver bullet, and it doesn’t need to be. It’s one piece of infrastructure quietly solving a problem that most people only notice when it goes wrong. For investors and developers who think in terms of long-term resilience rather than short-term hype, that’s exactly the kind of trend worth paying attention to.