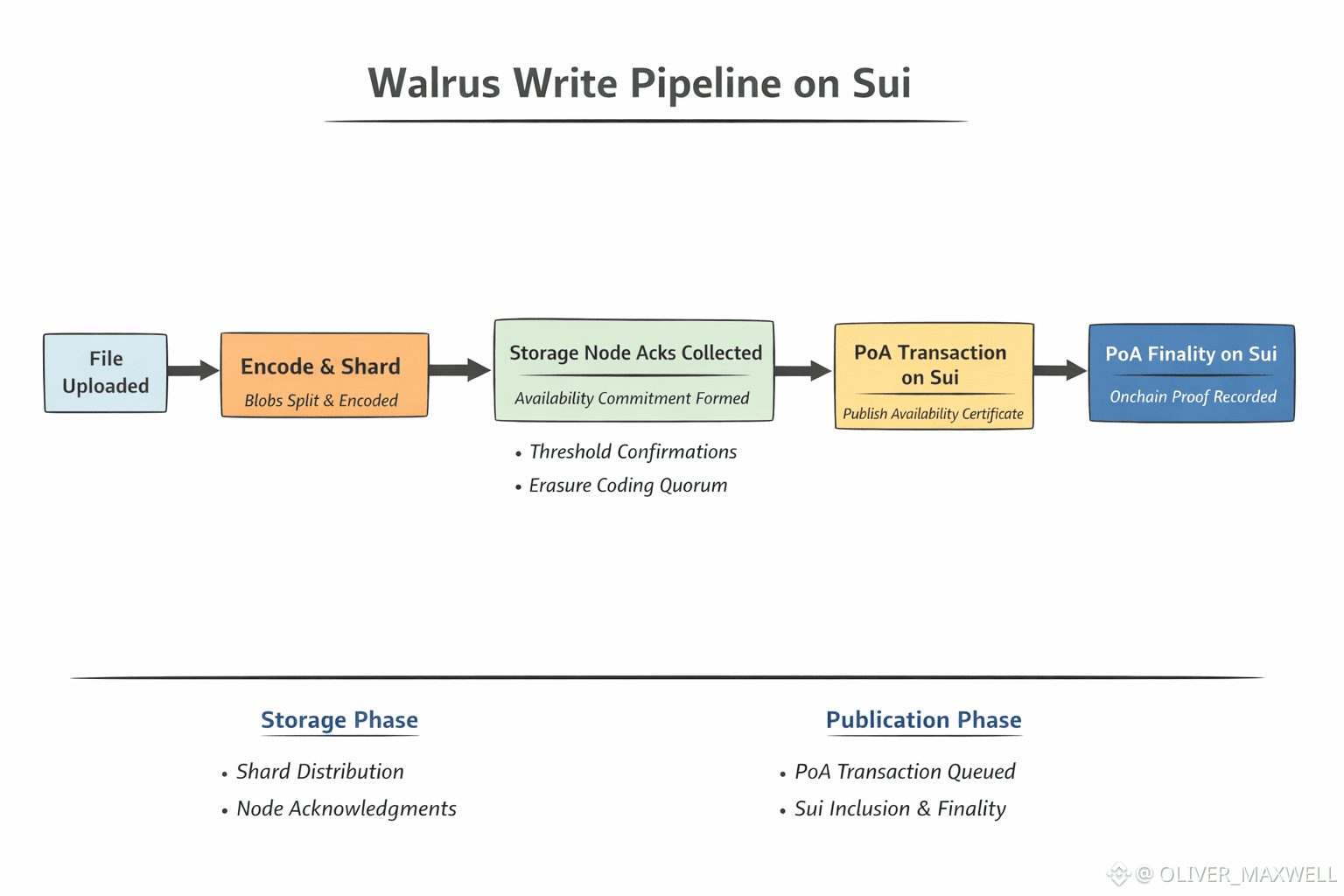

Sui blockspace tightens and Walrus writes start finishing late, even when the client has already pushed the last erasure coded piece to Walrus storage nodes. That lag is not mysterious inside Walrus decentralized blob storage. Walrus only treats a blob as committed when the Proof of Availability (PoA) publication on the Sui blockchain is included and reaches finality, because Walrus uses that onchain record as the durable reference to the write.

A Walrus write first turns the file into a content addressed blob and slices it into coded pieces so retrievability survives missing pieces. Walrus then fans those pieces out to multiple storage nodes, which is where round trips, retries, and slow responders stretch the tail. Walrus still cannot end the write at “sent,” because Walrus has to assemble a verifiable availability commitment for that exact blob before it can publish PoA on Sui. A PoA payload on Sui only carries weight when it is backed by sufficient storage side corroboration for that blob under verification.

The PoA publication step shifts the time budget away from bytes and toward metadata and inclusion. Walrus has to package the blob identifier and an availability commitment into a Sui transaction that competes with other activity, and Walrus then waits for Sui finality before applications can rely on the write. Walrus can therefore show a fast distribution phase and a slow publication phase in the same operation, with the second phase dominating when Sui ordering and finality slow down. Walrus write latency is shaped as much by the path from storage confirmations to a publishable PoA payload as it is by the network path from client to nodes.

Walrus performance tuning shows up at the joints between storage acknowledgments and commitment assembly, and between commitment assembly and Sui finality. Walrus can reduce storage side tail time by widening initial node fanout, enforcing timeouts for lagging nodes, and using the erasure coding rule to avoid waiting for every responder before the availability commitment is assembled. Walrus can reduce publication side exposure by controlling how many PoA publications it forces onto Sui, which makes blob sizing a direct input into PoA transaction count, mempool contention, and fees. Walrus then pays a clear trade off: fewer, larger blobs reduce PoA transaction frequency on Sui but increase distribution work per write and raise the impact of one slow node, while many small blobs lighten distribution per blob but multiply the Sui publication and finality waits.

Walrus also faces edge cases that sit between the phases, and those edge cases show up as latency. Walrus can stream encoding while sending early pieces, and Walrus still has to ensure the final availability commitment matches the blob identifier that the PoA transaction anchors on Sui. If Walrus has to reassign missing pieces to different storage nodes after timeouts, Walrus must keep the commitment consistent with the blob that is being published, or the write becomes hard to reason about even if pieces exist. Walrus pipeline design therefore spends real time on making metadata tight enough that Sui verification can treat the PoA record as binding to the intended blob.

Walrus application behavior ends up following the Sui publication step, not the upload moment. A Sui indexer or a Move based flow that tracks Walrus blob identifiers can only treat the write as committed when the PoA publication is finalized on Sui, because that is when Walrus exposes the onchain anchor that other components can reference. I keep coming back to a strict operational rule for Walrus: a blob becomes dependable when the PoA publication is final on Sui. For Walrus and $WAL, the latency target is PoA finality that turns a blob into an onchain reference applications can safely build on.