Recently, there has been a lot of discussion about the combination of AI and Crypto. This trend is more intense than the DeFi Summer of previous years. As a researcher who has been struggling in the infrastructure layer for many years, the current situation I see through code and architecture diagrams is extremely fragmented: on one side are PPTs that not only haven't landed but are also flying everywhere, while on the other side are hardcore attempts to truly solve the bottlenecks of computing power and data verification. Over the past few days, I have spent a lot of time immersed in Vanar's testnet and documentation, even turning their Creatorpad upside down, trying to clarify a core proposition: in a world already filled with EVM chains, is Vanar's so-called 'AI-ready' just marketing language, or has there really been fundamental changes at the underlying architecture level?

To clarify this, we need to dismantle the misused concept of "AI-ready." Currently, almost every project claims to embrace AI, as if simply connecting to a ChatGPT API equates to the fusion of Web3 and AI—this is an insult to technological logic. For Layer 1 blockchains, true AI readiness means resolving three critical issues: extremely high-frequency microtransaction processing capabilities, on-chain data verifiability, and a computing environment capable of supporting complex inference models.

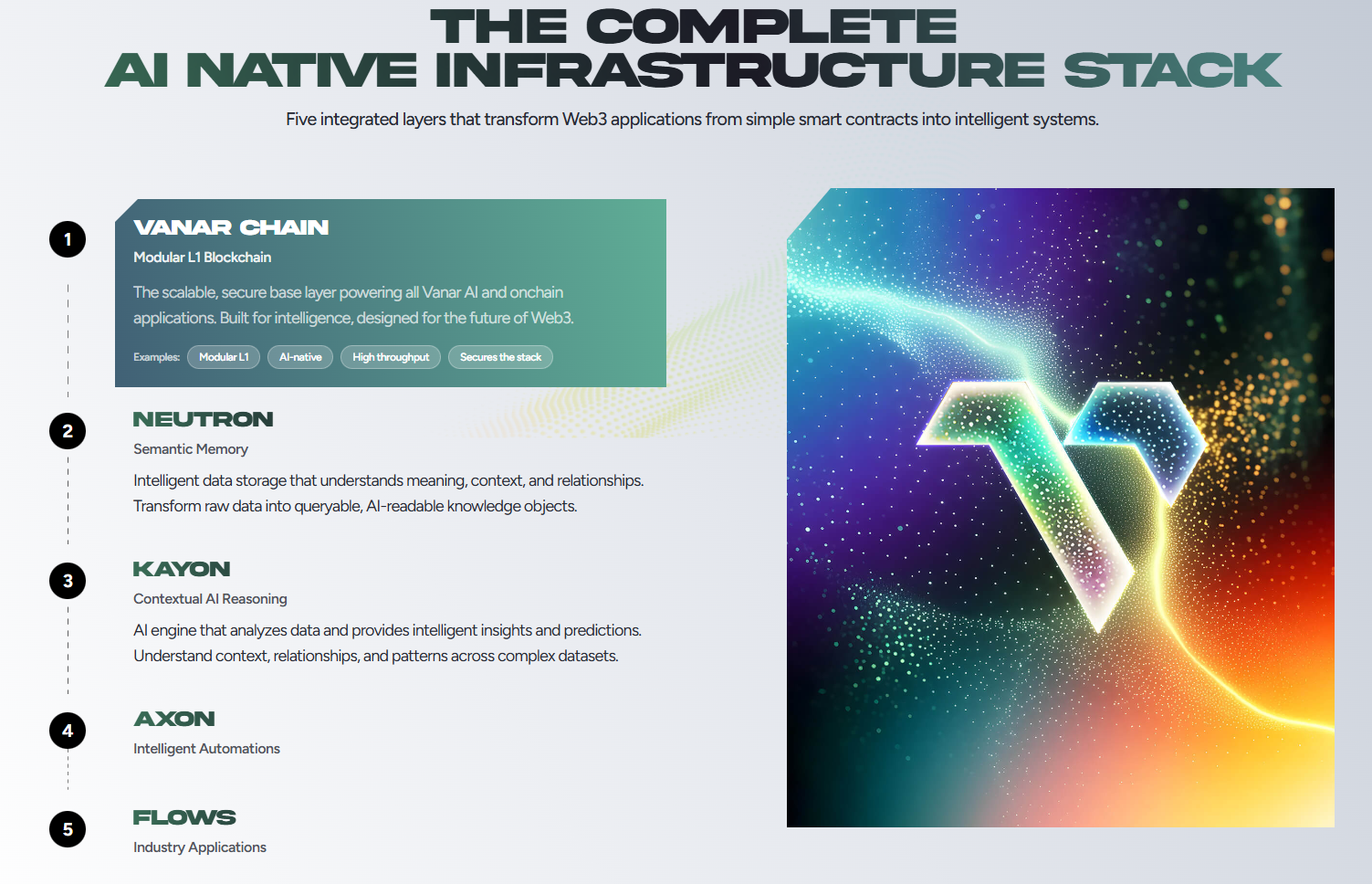

My biggest takeaway from testing Vanar was its attempt to simplify. The current public blockchain landscape is too crowded. Solana is focusing on TPS, Near is working on sharding, but Vanar gives me the impression that it clearly understands its ecosystem positioning—it doesn't want to be a large, all-encompassing general-purpose computing platform, but rather a dedicated high-speed channel for entertainment and AI data streams. This is evident in their deep integration with Google Cloud; it's not just about putting up a logo. I've seen many projects boast about partners, but there's no real technical integration. Vanar runs its validator nodes on Google Cloud infrastructure, which means that from the beginning, it's demonstrating a stance to the Web2 giants: we're not here to disrupt your cloud services, but to create an immutable settlement layer on top of cloud services.

This strategy is actually very clever, but it's also fraught with risks. I noticed an interesting phenomenon when comparing Vanar with other competitors. Take Render Network (RNDR) for example: it focuses on decentralized leasing of GPU computing power, making it a pure resource layer; while Fetch.ai focuses on intelligent agent interaction, making it an application layer. Vanar occupies a delicate position; it's more like a customized underlying highway designed to support these high-throughput applications. During my interaction tests on the Vanar chain, I specifically observed the fluctuations in gas fees. It's well known that the frequency of AI model training or inference on-chain, even just uploading hash values, is unparalleled by DeFi applications. If the gas mechanism isn't specifically optimized, running a model for two days could bankrupt a project. Vanar is quite aggressive in this regard; the extremely low interaction cost is clearly intended to leave a backdoor for high-frequency AI data.

Speaking of user experience, I have to mention Creatorpad. The biggest problem with many infrastructure projects is being "naked"—they only have the blockchain but no entry point. Vanar clearly aims to fill this gap with its toolchain. I tried their tools, and while the process was smooth, it also exposed some common problems of early-stage ecosystems. The UI design is very cool, with a futuristic Web3 feel, but some interaction logic is still a bit clunky. For example, the confirmation time for cross-chain assets occasionally shows a delay in the front-end display, even though the confirmation has already occurred on the blockchain. This reminds me of when Polygon first came out; they were building bridges and paving roads simultaneously. This "half-finished" feel, in a way, makes it feel more real. If a newly launched project had a smooth experience like Alipay, I'd suspect a centralized database was deceiving me.

In the competitor comparison section, it would be unfair to omit Polygon and BNB Chain. Both possess strong enterprise-level resources. Polygon has invested heavily in ZooKeeper technology, aiming to solve scalability issues, which essentially paves the way for large-scale AI applications. In contrast, Vanar hasn't focused on hardcore cryptography like ZooKeeper, but instead opted for a path more geared towards "application-side optimization." It supports Solidity and is fully compatible with the EVM, meaning developers don't need to learn Rust or Move to migrate their Ethereum AI DApps. This "borrowing" approach might not be appealing to tech purists, but it's absolutely sound from a business perspective. I've seen too many so-called high-performance new public chains become ghost towns because of high language barriers. Vanar directly reuses the existing developer ecosystem and leverages the stability of Google Cloud—a very pragmatic approach.

However, we also need to discuss the potential risks. Will over-reliance on the infrastructure of Web2 giants sacrifice a degree of decentralization? This is a question I've been pondering while studying the Vanar architecture. While the chain's consensus mechanism is decentralized, if core verification nodes are too concentrated in the hands of certain cloud service providers, then censorship resistance becomes a topic worth exploring. Of course, for scenarios like gaming, entertainment, and AI inference data on-chain, pure decentralization might not be the top priority; performance and stability are. This is the trade-off Vanar is making, and it's where I understand its technological philosophy.

While researching further, I discovered they're heavily promoting the "Vanar Vanguard" concept. This isn't just a validator program; it's more like building an ecosystem moat. Many L1 blockchains fail because they only have miners without applications, or applications without asset accumulation. Vanar clearly aims to solve the cold start problem by bringing in strong partners. But this also presents a challenge: how to get community developers to commit? Partnerships with large companies sound appealing, but the vitality of blockchain ultimately lies with the geeks coding in their basements. While the buzz I've seen on GitHub and in developer communities is rising, it hasn't reached the fervor of the Solana hackathon. This is perhaps where the project team needs to focus their efforts next.

Another intriguing technical detail is Vanar's handling of NFTs and metaverse assets. While the concept of the metaverse has somewhat faded, Vanar's predecessor (Terra Virtua) has deep expertise in this area. In today's explosion of AI-generated content (AIGC), establishing ownership is a major pain point. Who owns the images generated by Midjourney and the videos generated by Sora? Vanar seems to be leveraging its NFT expertise, combined with AI technology, to create a platform for establishing and circulating AIGC assets. If this works, its valuation logic will be completely different. It will no longer be just an L1 platform, but a copyright bureau for the AI era.

This led me to reflect on the entire industry. L1 cryptocurrencies are all searching for new narratives—first DeFi, then NFTs, now AI. But most projects are just reskinned versions. I carefully audited some of Vanar's contract interfaces and found some interesting extensions to their metadata standards, seemingly to accommodate more complex data structures. This indicates the team is seriously considering putting AI data on-chain, rather than just tweeting slogans. These underlying, micro-innovations often determine a project's survival more than grandiose white papers.

Of course, no project is perfect. While using Vanar, I also encountered some frustrating little bugs. For example, the browser's indexing speed sometimes couldn't keep up with the block generation speed, causing me to have to refresh the page several times to check transactions. Refining these infrastructure details often requires time and experience. Moreover, as a latecomer, Vanar faces enormous liquidity pressure. Currently, funds are concentrated on top L1 platforms and a few popular L2 platforms. For Vanar to break through, besides strong technology, it needs extremely robust operational strategies.

I'm also pondering whether Vanar's emphasis on environmental protection and carbon neutrality is a false proposition in a bull market. While ESG (Environmental, Social, and Governance) is a ticket for traditional financial institutions, in the nakedly profit-driven world of crypto, do people really care about carbon emissions? Perhaps in the short term it's just a plus, but in the long run, if regulatory crackdowns come, those energy-intensive PoW chains may face compliance risks, while chains like Vanar, which focus on green energy and efficient consensus, may become a safe haven for compliant funds. This is a long-term logic, betting that crypto will eventually become mainstream.

Looking back, Vanar is trying to build not just a blockchain, but a "brand." In this respect, it's similar to Near, both attempting to downplay the geeky nature of blockchain itself, making it seamless for Web2 users. But Near implemented its abstract account earlier; can Vanar catch up? I think the opportunity lies in the explosion of niche markets. If Vanar can capitalize on one or two blockbuster AI games or AIGC applications, it has a real chance to overtake its competitors.

Let's talk about the economics of tokens. While I don't predict prices, from a mechanism design perspective, a token's utility must be strongly tied to network usage. Vanar using tokens as gas and governance tools is standard practice, but I'm more excited to see their application in the AI computing power market or data market. If in the future developers can use tokens to purchase validated AI datasets or pay for model inference, then this flywheel effect will kick in. Currently, this functionality is still in the planning stage; we need to keep an eye on the project team's Git commits to see if they are actually writing this code.

During my few days on the testnet, I noticed an interesting detail: their community atmosphere. Unlike some project groups filled with shouts of "When Binance!", Vanar's Discord did have some discussions about node setup and API interfaces. This gave me a sense of relief. In this volatile market, the fact that people are still discussing the technology itself is itself a form of value.

At this point, I also have to complain a bit. Some documentation is updated really slowly. When I was following the documentation, I found that parameter names had changed, but the documentation hadn't been updated yet. While this disconnect is common in development, it's very discouraging for novice developers. I hope project teams understand that developer experience (DX) is also part of the product, perhaps even the most important part. No developers, no application; no application, no users—that's an ironclad rule.

In summary, my view on Vanar is cautiously optimistic. It's not the kind of groundbreaking, world-changing tech monster that immediately wows you, but it's a pragmatic entity with strong execution capabilities and a precise positioning. When the massive sectors of AI and Web3 collide, what we might need isn't another Ethereum killer, but rather an adapter that can tighten the screws on both sides. Vanar is attempting to be that adapter. Its Google Cloud background gives it a natural advantage in the enterprise market, while EVM compatibility ensures it won't fall behind in the ecosystem. The remaining question is whether it can capitalize on the upcoming surge in application adoption.

For us observers, it's too early to draw conclusions. But I'll continue to monitor its on-chain data, especially changes in contract deployment volume. Because in the world of crypto, prices can lie, market capitalization can lie, but every on-chain interaction is a vote of real money. Vanar currently holds a good hand—a solid deck with clear logic—but whether it can play it well depends on whether those promised features can actually run line by line like code in the next few quarters, rather than remaining just articles on Medium.

After all, in this industry, those who survive are often not the fastest, but the most stable. Vanar seems to want to be that stable player. Whether it can succeed remains to be seen; time will tell, or perhaps the next bear market will provide the answer. We'll wait and see how it proves itself in this noisy market that it's not just a fleeting trend-chaser, but a true infrastructure ready for the AI era.