At three o'clock in the morning, staring at the Gas tracker on the Vanar testnet, the coffee beside me has gone cold. I've been wrestling with a question these past few days: in this era where all public chains are slapping the 'AI' label on themselves, what exactly gives Vanar the right to claim it is 'AI-Ready'? To be honest, I'm tired of these marketing buzzwords, just like two years ago when everyone claimed to be 'metaverse infrastructure'. But when I delved into Vanar's codebase, ran the hyped-up Creatorpad, and even attempted to deploy a few simple logic contracts, I found that things weren't as straightforward as they appeared on the PPT, but of course, they weren't as bad as I had imagined either.

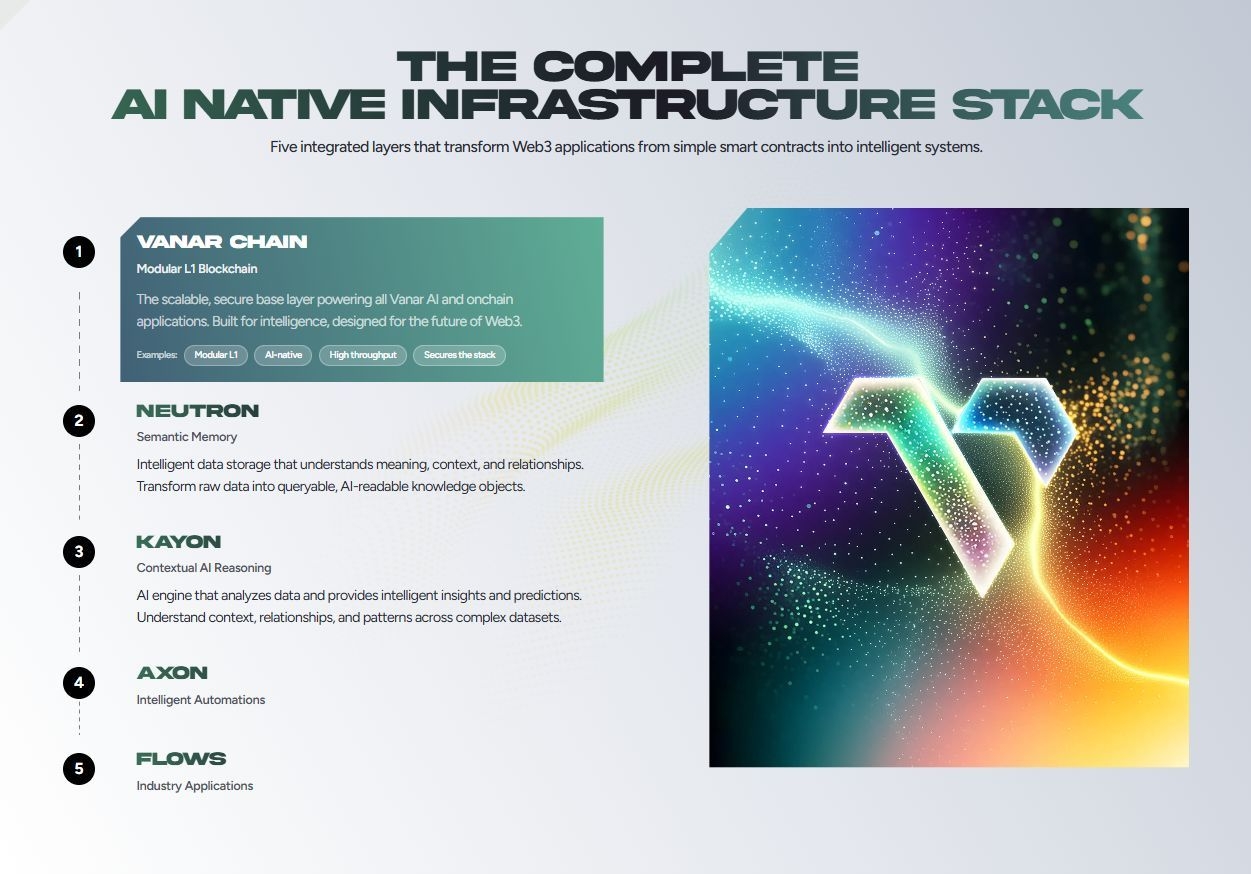

I want to talk about what it means to be truly 'AI-ready.' Many people have a misconception that in order for blockchain to run AI, it means cramming neural networks into smart contracts. This is pure nonsense; the current EVM architecture cannot run complex reasoning models at all. True 'AI-Ready' refers to whether this chain can handle the massive microtransactions generated by AI applications, the need for extremely cheap data verification, and the confirmation of intellectual property (IP). When I was testing Vanar, I deliberately compared it with Solana. Solana is indeed fast, but its history of downtime poses a risk for AI services that require 24/7 availability. Vanar feels more like a streamlined version of an industrial-grade EVM that has removed the gambling vibe of DeFi. It hasn't tried to reinvent the wheel; instead, it directly grafted the stability of Google Cloud onto the chain's validation layer. This approach might not seem 'pure' in the eyes of geeks, feeling insufficiently decentralized, but for those Web2 giants that just want a place to store evidence of their AI model data, it is a significant advantage.

This brings me to my real experience using Creatorpad. To be honest, when I first entered, I almost thought I had gone to the wrong website; the UI design is too much like a Web2 SaaS platform, completely lacking the aloofness of Web3 that 'only hackers can understand.' I attempted to create an NFT series to simulate an AI-generated material library. The whole process was extremely smooth, and even the timing of the wallet signature pop-up was optimized. However, there is one point of criticism that I must mention: the waiting time when uploading large files is a bit puzzling; when the progress bar got stuck at 99%, I thought the front end had crashed. This might be an issue with IPFS node synchronization, but it also exposed the current infrastructure's bottleneck in handling large media data. If future AI-generated videos are to be put on-chain, such delays are absolutely unacceptable. Fortunately, the gas fees are genuinely low, so low that I almost forgot about their existence. This is crucial for high-frequency AI interactions; imagine if your AI agent had to spend $0.5 every time it makes a decision, that business model would explode right there. Vanar has brought costs down to this level, certainly paving the way for large-scale entry of machine agents.

Let's talk about competitors. There are many chains on the market focused on high performance, Flow being one and Near another. Flow has powerful IP resources; NBA Top Shot was also incredibly popular back then, but Flow's Cadence language poses a barrier for developers. You have to relearn a whole set of logic, which is too exhausting for developers who just want to quickly integrate AI models written in Python into the blockchain. Vanar is smart because it has firmly committed to EVM compatibility. I moved a simple copyright distribution contract I ran on Ethereum Goerli to Vanar almost unchanged; I just modified the RPC interface and it worked. This 'painless migration' experience is crucial in the war for developer market share. However, compared to Near's ability to directly build front-end components on-chain, Vanar currently appears more traditional; it is more like a 'fast and cheap ledger' rather than a 'full-stack operating system.'

While delving into Vanar's documentation (some places even had spelling mistakes that weren't corrected; can the team pay a bit more attention?), I found that they have a very clear understanding of the intersection between 'metaverse' and 'AI.' Many projects build chains just to issue tokens, while Vanar gives me the impression that they are building this chain because their IP and brand partners need a decentralized database. This distinction between 'demand-driven' and 'technology-driven' is significant. Technology-driven projects often fall into the trap of excessive performance, achieving tens of thousands of TPS but with no users; demand-driven projects, like Vanar, may not shine in certain parameters, but their API interfaces and SDK toolkits are aimed at solving real business pain points. I looked at the Vanguard program, which pulled in a bunch of validators from Web2 and Web3; this is actually building a trust alliance. For AI data, who validates is more important than the validation itself. If Google Cloud participates as a node in the validation, then enterprise users' concerns about data privacy and security will be greatly reduced.

However, I have also discovered some hidden dangers. The Vanar ecosystem is still too early, a bit too desolate. When I browsed the block explorer, I found that most transactions are still concentrated in a few official contracts and token transfers; there are still not many truly native applications. It's like having built a smooth highway resembling an F1 racetrack, with gas stations (Creatorpad) already set up along the roadside, but there are very few cars running on it. This is a death loop faced by all new L1s: no users means no developers, and no developers means no users. Vanar is trying to break this cycle through brand partnerships, bringing in major IP stakeholders to directly issue assets. This strategy works in a bull market, but in a market with low sentiment, whether users will buy into it is a question. Moreover, while the AI narrative is hot, the truly practical AI DApps are mostly pseudo-demand at present. Whether Vanar can wait for that 'Killer App' to emerge, or whether it can incubate that app, is key to its success or failure.

Another technical detail that impressed me is their dedication to energy consumption. Talking about environmental protection in the crypto circle is often met with ridicule, seen as a narrative of the 'white left.' But if your target customers are Nike, Disney, or large AI companies, ESG metrics are a hard threshold. Training a large model is already not environmentally friendly; if the underlying settlement layer still consumes energy like Bitcoin, the compliance department will definitely not sign off. Vanar has done well in this regard, utilizing PoS and cloud optimization to reduce energy consumption to a very low level. While this may not be a benefit for retail investors, for institutions, it's a ticket to entry. When I was writing scripts to test network stress, I specifically observed the response latency of nodes and found that under high concurrency, the network's stability was quite good, without the 'congestion leads to paralysis' situation seen in some high-performance chains; this is thanks to solid load balancing in its underlying architecture.

What troubles me is its level of decentralization. Does excessive reliance on partners and cloud facilities mean it will compromise on censorship resistance? If one day regulators ask Google to shut down certain nodes, can Vanar's network resilience hold up? This is a place where I couldn't find a clear answer in the white paper. Although technically it is distributed, the concentration of physical infrastructure is a risk point that cannot be ignored. Of course, for entertainment and consumer-level AI applications, this may not be the most core contradiction, as people care more about experience and cost rather than absolute censorship resistance.

During usage, I occasionally encounter some minor bugs, such as delays in transaction status updates on the browser or certain buttons in Creatorpad not responding when clicked. Although these small issues are not fatal, they can be frustrating, especially when you're eager to test a feature. This friction in interaction can make you doubt whether the team is focused on collaboration instead of polishing the product. However, thinking about it, the current Vanar feels like a newly renovated shell of a house; while some corners are still not cleaned up, the structure and foundation are stable.

Looking back at Vanar's overall strategy, it is actually betting on a future: the internet content of the future will mainly be generated by AI, and this vast, fragmented AI content will need a low-cost, high-efficiency layer for rights confirmation and transactions. The current Ethereum is too expensive, Solana has too many outages, and the interoperability of L2s is a tangled mess; Vanar is trying to find its ecological niche in this crevice. It doesn't seem like a revolutionary disruptor aiming to change the world, but rather a shrewd businessman, paving the way and waiting for the gold diggers of the AI era to pass and pay the toll.

For someone like me, who is used to looking at code and candlestick charts, Vanar didn't give me that shocking sense of 'technical explosion,' but provided a solid feeling of 'this thing works.' In this world of Web3, filled with air and bubbles, perhaps pragmatism is the rarest quality. I won't blindly praise it just because it's Vanar; it faces real risks of liquidity exhaustion and ecological cold-start problems. The current on-chain data is indeed growing, but we are far from the eve of an explosion.

After tinkering these days, my conclusion is that Vanar is a chain prepared for 'application layer explosion,' not one that exists just to show off technology. If you're a developer wanting to create an AI-based game or SocialFi, this might be a highly cost-effective choice; but if you expect it to have any groundbreaking innovations in technology like various ZK rollups, you may be disappointed. It is just a useful tool, no more, no less. As for whether it can perform in the next bull market, it depends on whether it can truly channel Web2 traffic smoothly through the AI interface. This is very difficult, extremely difficult, but at least they are trying to do it in a way that aligns with business logic, rather than being purely a Ponzi scheme.

In conclusion, I want to say that the best way to observe a chain is not to look at what favorable news it has posted on Twitter but to use its products, deploy a contract, and experience the delay and gas fees in each interaction. Vanar has submitted a score of about 75 points in this regard, with 25 points deducted for the impoverished ecosystem and occasional minor bugs. However, in the still wild-growing track of AI + Web3, a score of 75 is already enough for it to secure a ticket to the finals.