I used to think decentralized storage was mostly about where data lives. Cheaper, more resilient, less censorable… all true. But the deeper I go into Walrus, the more it feels like something else entirely: a system designed for proving your data is real, unchanged, and still available — at scale — without dragging a blockchain into the heavy lifting.

That difference matters because the next wave of apps won’t be “send tokens from A to B.” They’ll be AI agents making decisions, platforms serving mass media archives, and businesses needing audit trails that don’t depend on a single cloud vendor’s honesty. In that world, storage alone is table stakes. Verifiability is the product.

The Big Shift: Stop Putting Files onchain — Put Proof onchain

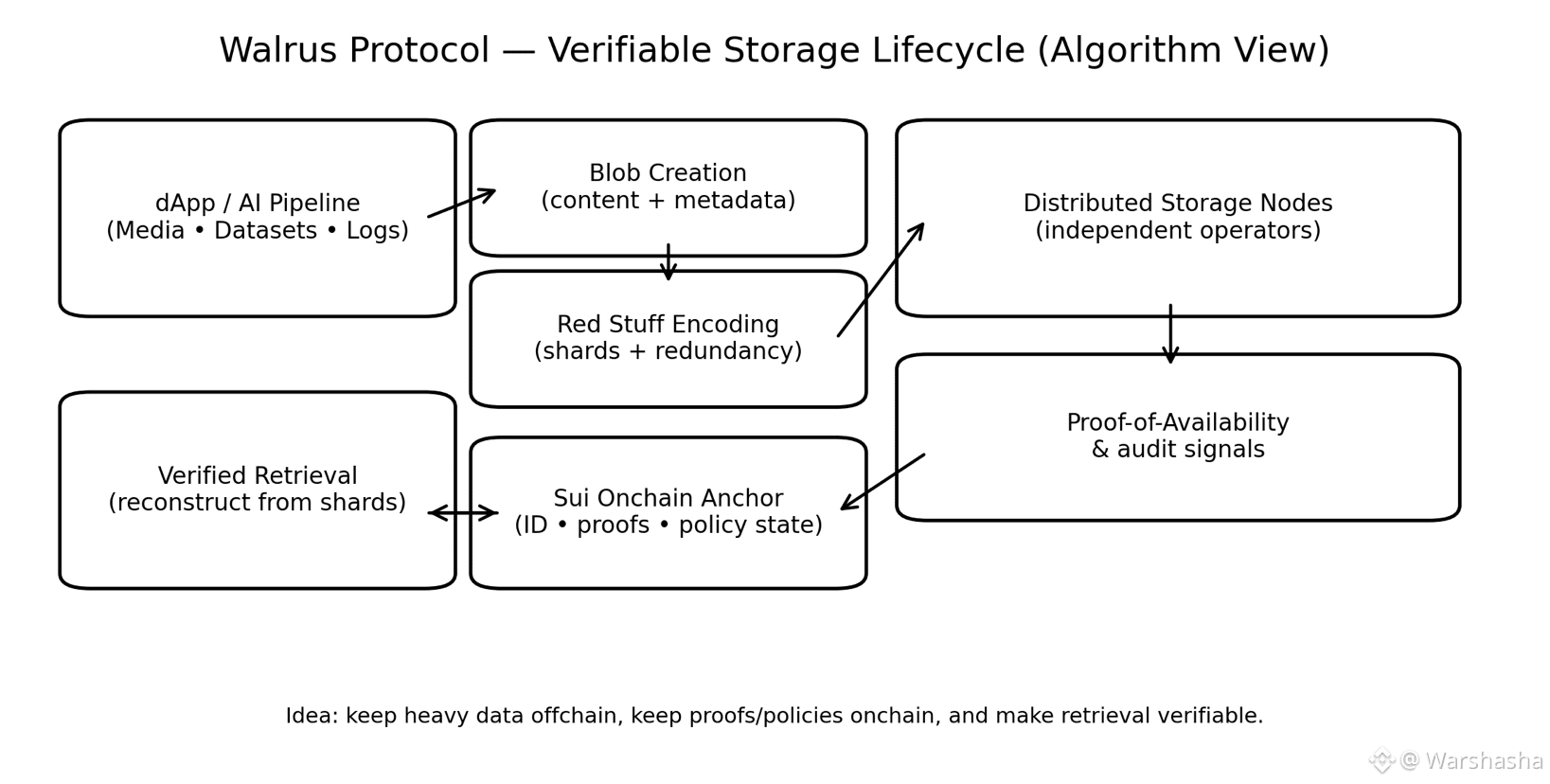

#Walrus is built around a clean separation: keep large unstructured data (datasets, video libraries, logs, research archives) in a decentralized network optimized for blobs, while anchoring cryptographic identity and verification to Sui.

In practice, that means your application can reference a blob like it references an onchain object — but without forcing Sui to carry gigabytes of payload. You get the transparency and programmability of onchain systems, while keeping the cost and performance profile of a storage network built for scale.

This design is why Walrus fits naturally into the Sui ecosystem: Sui stays fast and composable; Walrus becomes the “data layer” that doesn’t compromise those properties.

Red Stuff: The Storage Engine That Makes Failure a Normal Condition

What makes Walrus feel different technically is the assumption that nodes will fail, churn, go offline, or behave unpredictably — and the system should still work without drama.

Instead of classic “copy the whole file to many places,” Walrus uses a two-dimensional erasure coding scheme called Red Stuff. The simple intuition: split data into fragments, add redundancy intelligently, and make reconstruction possible even when a meaningful chunk of the network is unavailable.

That’s not just reliability marketing. It changes what builders can do. You start treating decentralized storage less like a slow backup drive and more like a dependable component of the runtime environment — especially for workloads like AI pipelines and media streaming where availability and retrieval predictability matter more than hype.

Proof-of-Availability: Verifying Access Without Downloading Everything

Here’s the part I think most people underestimate: Walrus is trying to make “data availability” a provable property, not a promise.

Applications can verify that stored data is still retrievable via cryptographic mechanisms that are anchored onchain, instead of downloading the entire blob just to check if it still exists. That makes a huge difference for:

compliance-heavy datasets (where audits are routine),

analytics logs (where history is everything),

AI training corpora (where provenance and integrity decide whether the model is trusted or useless).

So the key shift becomes: don’t trust the storage vendor, verify the storage state.

New 2025–2026 Reality: Walrus Is Moving From “Protocol” to “Production”

New 2025–2026 Reality: Walrus Is Moving From “Protocol” to “Production”

What convinced me this isn’t just theory is how the recent partnerships are framed. They’re not about “we integrated.” They’re about “we migrated real data, at real scale, with real stakes.”

One of the clearest examples is Team Liquid moving a 250TB content archive onto @Walrus 🦭/acc — not as a symbolic NFT drop, but as a core data infrastructure change. And the interesting part isn’t the size flex. It’s what happens next: once that archive becomes onchain-compatible, you can gate access, monetize segments, or build fan experiences without replatforming the entire dataset again. The data becomes future-proofed for new business models.

On the identity side, Humanity Protocol migrating millions of credentials from IPFS to Walrus shows another angle: verifiable identity systems don’t just need privacy — they need a storage layer that can scale credential issuance and support programmable access control when selective disclosure and revocation become the norm.

This is the “quiet” story: Walrus is positioning itself as the default place where data-heavy apps go when they stop experimenting.

Seal + Walrus: The Missing Piece for Private Data in Public Networks

Public storage is open by default, which is great until you deal with anything sensitive: enterprise collaboration, regulated reporting, identity credentials, or user-owned datasets feeding AI agents.

This is where Seal becomes an important layer in the stack: encryption and programmable access control, anchored to onchain policy logic. Walrus + Seal turns “anyone can fetch this blob” into “only someone satisfying this onchain policy can decrypt it,” with optional storage of access logs for auditable trails.

That’s not just privacy. That’s how you unlock real markets: datasets that can be licensed, accessed under conditions, revoked, and audited — without handing everything to a centralized gatekeeper.

The Most Underrated Feature: Turning Data Into a Programmable Asset (Not a Static File)

This is where I think the next “nobody is writing this yet” story sits:

Walrus isn’t just storing content — it’s enabling a new type of data asset lifecycle.

If you can reference blobs programmatically, attach logic to access, and verify provenance and integrity, then data stops being a passive resource and becomes an economic object:

AI datasets that can be monetized with enforceable rules

media archives that can be sliced into rights-managed packages

adtech logs that can be reconciled with cryptographic accountability

research files that carry tamper-evident histories

This is the shift from “storage layer” to data supply chain: ingest → verify → permission → monetize → audit.

And once that exists, it naturally attracts the types of apps that need trust guarantees: AI, advertising verification, compliance systems, identity networks, and tokenized data markets.

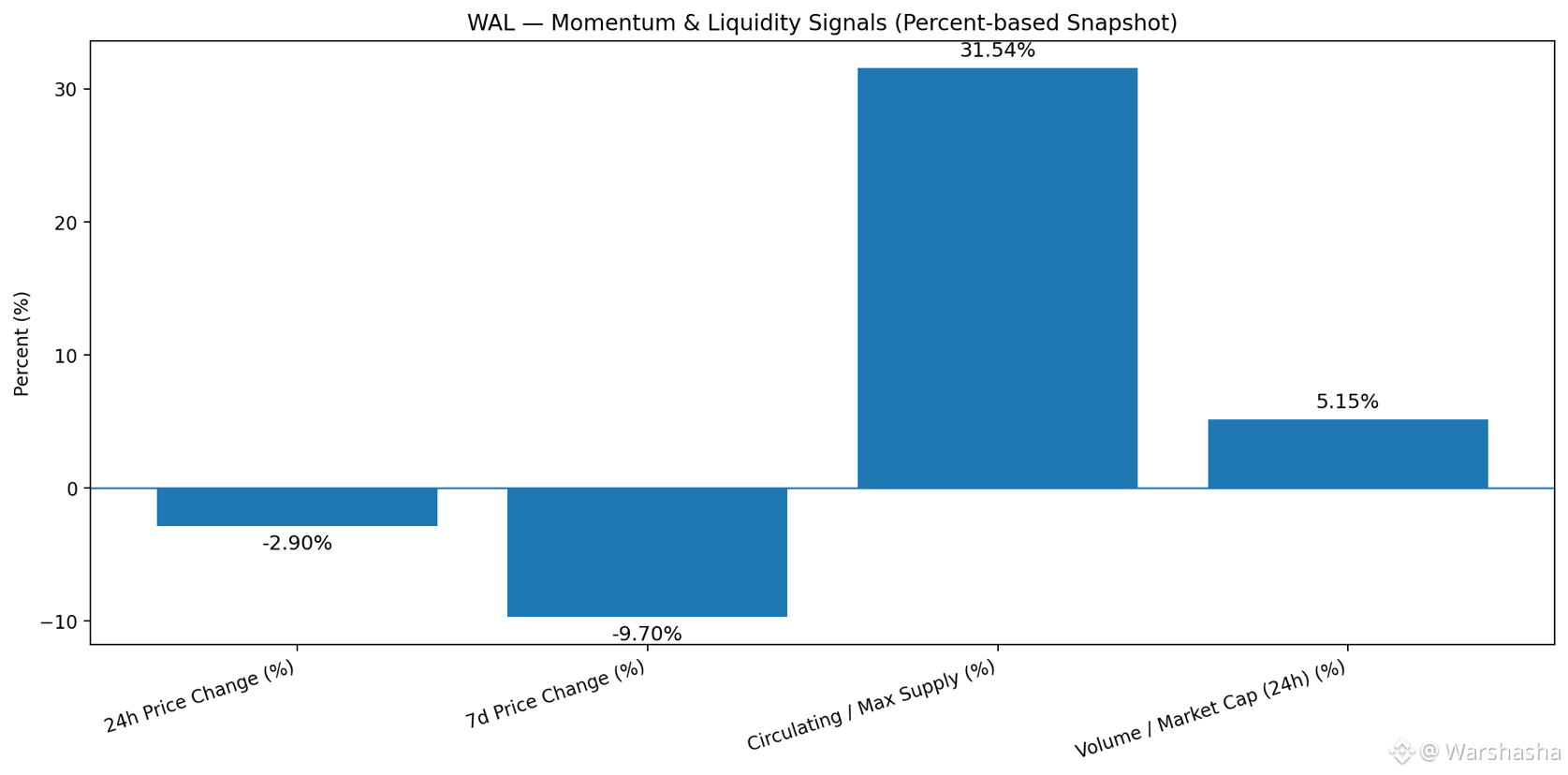

$WAL Token: Incentives That Reward Reliability, Not Just Size

For any decentralized storage network, the economics decide whether decentralization survives growth.

What I like about Walrus’s stated direction is the emphasis on keeping power distributed as the network scales — via delegation dynamics, performance-based rewards tied to verifiable uptime, and penalties for bad behavior. That’s the difference between “decentralized on day one” and “quietly centralized by year two.”

$WAL sits at the center of this incentive loop — powering usage, staking, and governance — with the goal of aligning node operators and users around a single outcome: reliable availability that can be proven, not claimed.

What I’d Watch Next (The Real Bull Case Isn’t Hype — It’s Demand)

If I’m looking at @Walrus 🦭/acc with a serious lens, these are the demand signals that matter more than narratives:

More high-volume migrations (media, enterprise archives, identity credential stores)

Deeper Seal adoption (because access control is where real money and compliance live)

Tooling that reduces friction (SDK maturity, indexing/search layers, “upload relay” style UX)

Expansion of verifiable data use cases (AI provenance, adtech reconciliation, agent memory)

Because when apps become data-intensive, decentralized compute doesn’t matter if the data layer is fragile. Whoever owns verifiable storage becomes part of the base infrastructure.

Closing Thought

#Walrus is shaping up to be one of those protocols that looks “boring” until you realize it’s solving the part that breaks everything: data trust. And in 2026, trust isn’t a philosophy — it’s a requirement. AI systems, identity networks, ad markets, and onchain businesses can’t scale on “just trust us” data pipelines.

Walrus’s bet is simple: make data verifiable, available, and programmable, and the next generation of apps will treat it like default infrastructure.