This article by Azu only discusses one point: @Walrus 🦭/acc is it really 'true ecology'? Don't listen to the narrative, just focus on two things—whether there is a large volume of data being migrated and whether there are a bunch of developers doing their work. The former represents true demand-side use, while the latter represents true supply-side effort. Only when both curves are trending upwards can you talk about 'network effects.'

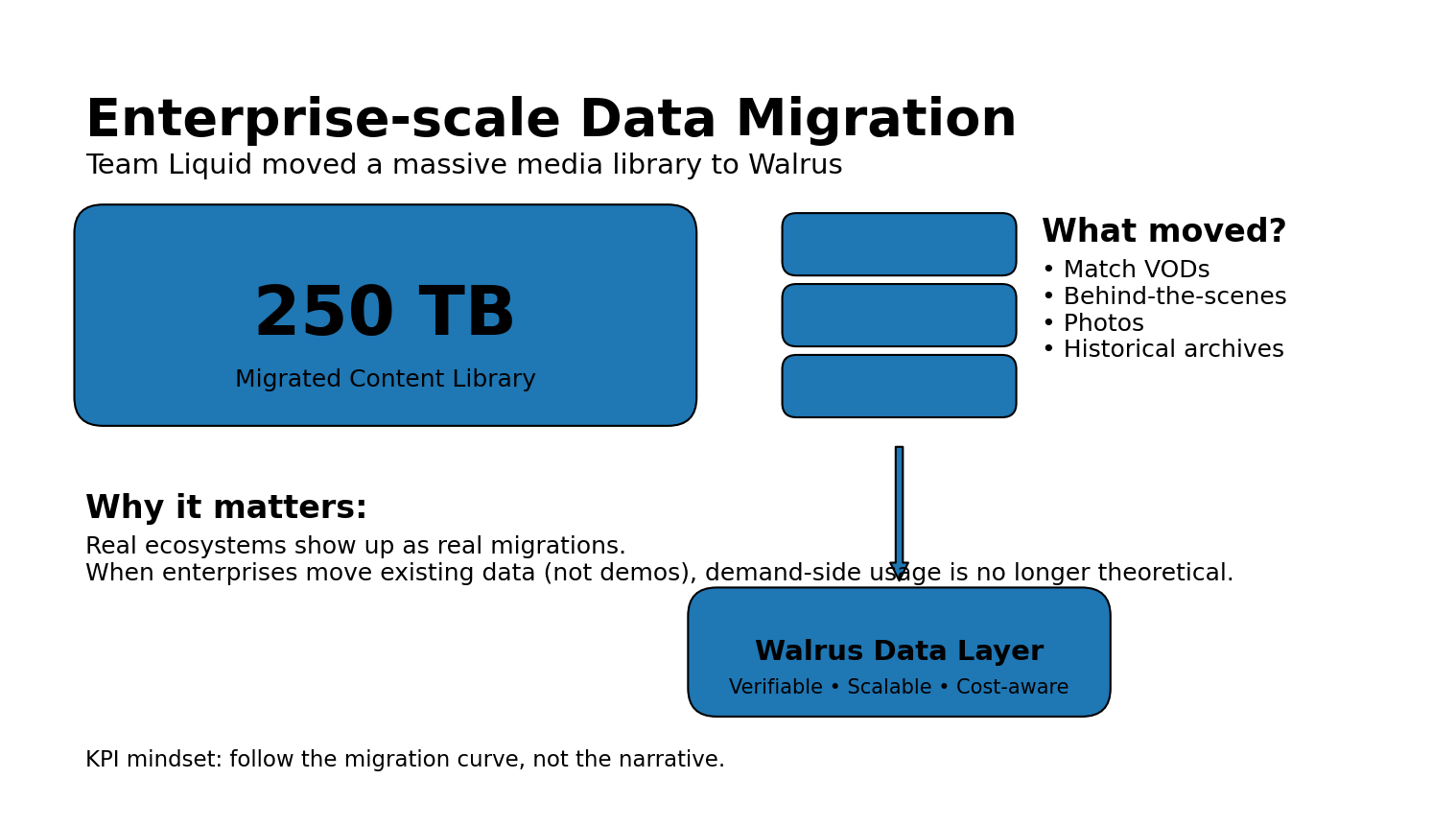

First, look at 'is the data being moved?' The most significant recent example is Team Liquid migrating a 250TB content library to Walrus: match recordings, behind-the-scenes materials, photos, historical media assets—all real 'stock' being moved, not a trial version or a marketing demo. The official blog directly defines this migration as a milestone for Walrus, emphasizing its capability to handle enterprise-level data volume and performance requirements.

You need to understand the value of this matter: The content industry is most afraid not of 'not being able to store', but of 'long-term maintenance costs' and 'single point risks'. A media library of a global team distributed across different regions and teams, centralized storage, once there is a problem, it halts productivity; while moving content to a verifiable, scalable decentralized data layer at least indicates they are seriously betting on 'future sustainable infrastructure', rather than just chasing trends.

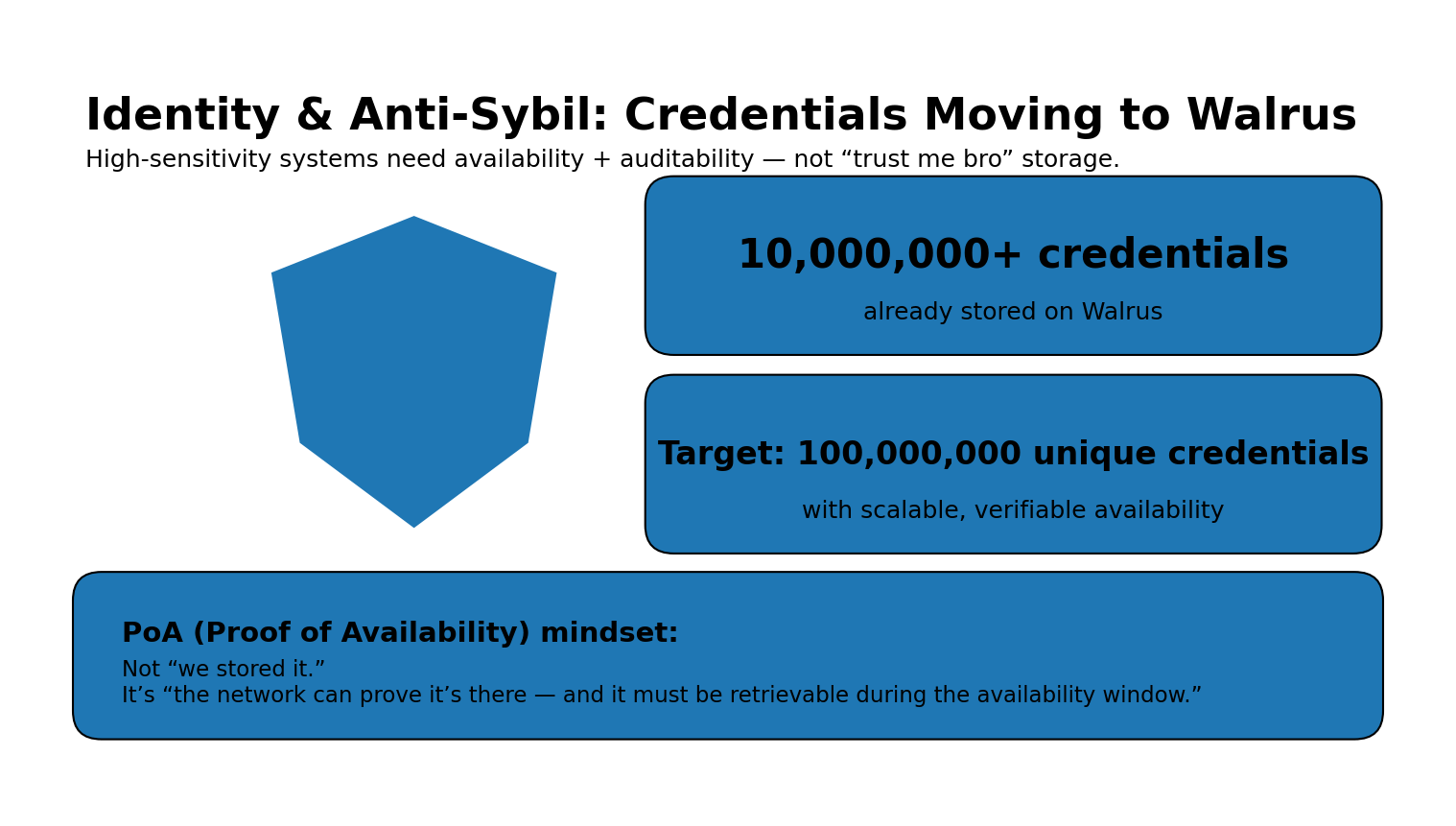

Looking at the 'identity/anti-fraud' track, which is even more intense: Humanity Protocol has migrated identity credentials from IPFS to Walrus, with over 10 million credentials stored, and publicly stated a goal - to reach 100 million unique credentials by the end of 2025, with storage scale exceeding 300GB on Walrus within this year.

Why does Azou think this is more important than 'another collaboration'? Because identity systems are inherently sensitive to 'availability' and 'auditability': you cannot accept 'it can be verified today, but not tomorrow', nor can you accept data being tampered with at will. The essence of Sybil resistance, anti-fraud, and anti-witchcraft in the AI era relies on verifiable data availability - this is exactly the PoA mindset we discussed about Walrus a few days ago: it's not 'I say I have stored it', but 'the chain can prove it is there, and it must be retrievable within the availability period'.

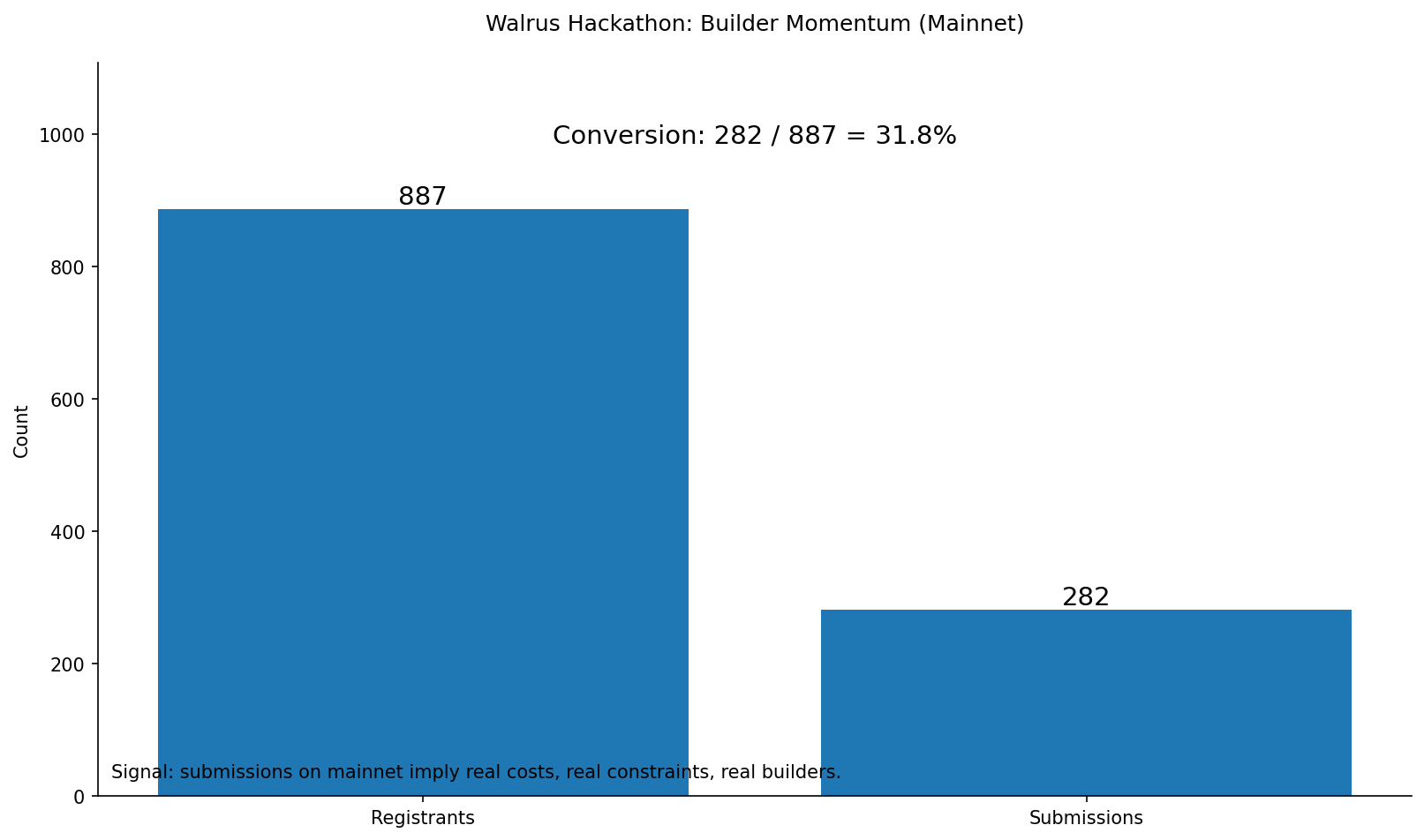

The demand side is there, now looking at the supply side: Is anyone really doing applications? Walrus officially laid out the mainnet hackathon data directly on X: 887 registered, 282 project submissions, covering 12+ countries.

I generally only consider these kinds of numbers as 'references', but they have two values: First, the submission volume is large enough, indicating that it's not just a few teams getting excited; second, the project submissions occur during the mainnet phase, meaning developers have to face real on-chain costs, real experiences, and real users, not just a big pie chart in a PPT. The official blog also mentioned that participants came from multiple countries and the submission volume is in the 'hundreds', which is a typical signal of 'supply side density'.

Connecting these three lines, you will find that Walrus's growth logic is very 'adult-like': The content industry moves 250TB, which is typical enterprise data volume; identity protocol moves 10M+ credentials, which is typical high-sensitivity data and an urgent need for AI anti-fraud; hackathon 887/282 is a typical developer density. Together, they point to one thing: Walrus is not selling the emotion of 'decentralized cloud storage', but building a base that allows data to become assets that can be traded/authorized/subscribed/combined.

So how do ordinary people 'get on board'? I give you two routes, choose one based on your identity - don't get tangled up in which is more advanced, the key is that you can keep going.

If you are an ordinary user/investor, what you need to do is not to chase popularity every day, but to follow 'responsibility and performance': Walrus's security and returns will increasingly be linked to node performance, staking delegation, and future slashing (the significance of such mechanisms is to raise the 'cost of doing nothing'). You can first learn to observe node and network performance, then consider whether to participate in delegated staking - the logic is always: the more real the network, the more it should reward long-term stable supply, rather than short-term emotions. Don't treat $WAL as a lottery, but understand it as a 'ticket and deposit for network operation', and your mindset will be much steadier.

If you are a Builder, I suggest you start with 'the permission model that can be monetized the fastest', rather than writing a super complex protocol first. The simplest MVP formula is actually just one sentence: Walrus is responsible for availability and proof (PoA) + Red Stuff is responsible for cost and fault tolerance + Seal is responsible for encryption and access control, then you write 'permissions' directly into the business model at the application layer: subscription unlocks, pay-per-use, data authorization, token-gated content, dynamic game resources. You can even directly follow the three tracks to choose topics: AI dataset market (selling verifiable data permissions), decentralized content subscription (selling unlock rights), anti-Sybil identity (selling auditability of credential availability).

The comment section gives you a task: What type of application do you most want to see explode on Walrus? AI data market / decentralized content subscription / anti-Sybil identity / game assets and storyline - choose one, and in my next piece, I will elaborate on 'how to do MVP, how to turn permissions into cash flow' based on your highest voted direction.

In the end, I still say the old saying: Don't chase concepts, chase data migration curves. Data is really moving, developers are really submitting work, and only then can we call it an ecosystem.

@Walrus 🦭/acc $WAL #Walrus