Nobody notices the breaking point at first.

Nobody notices the breaking point at first.

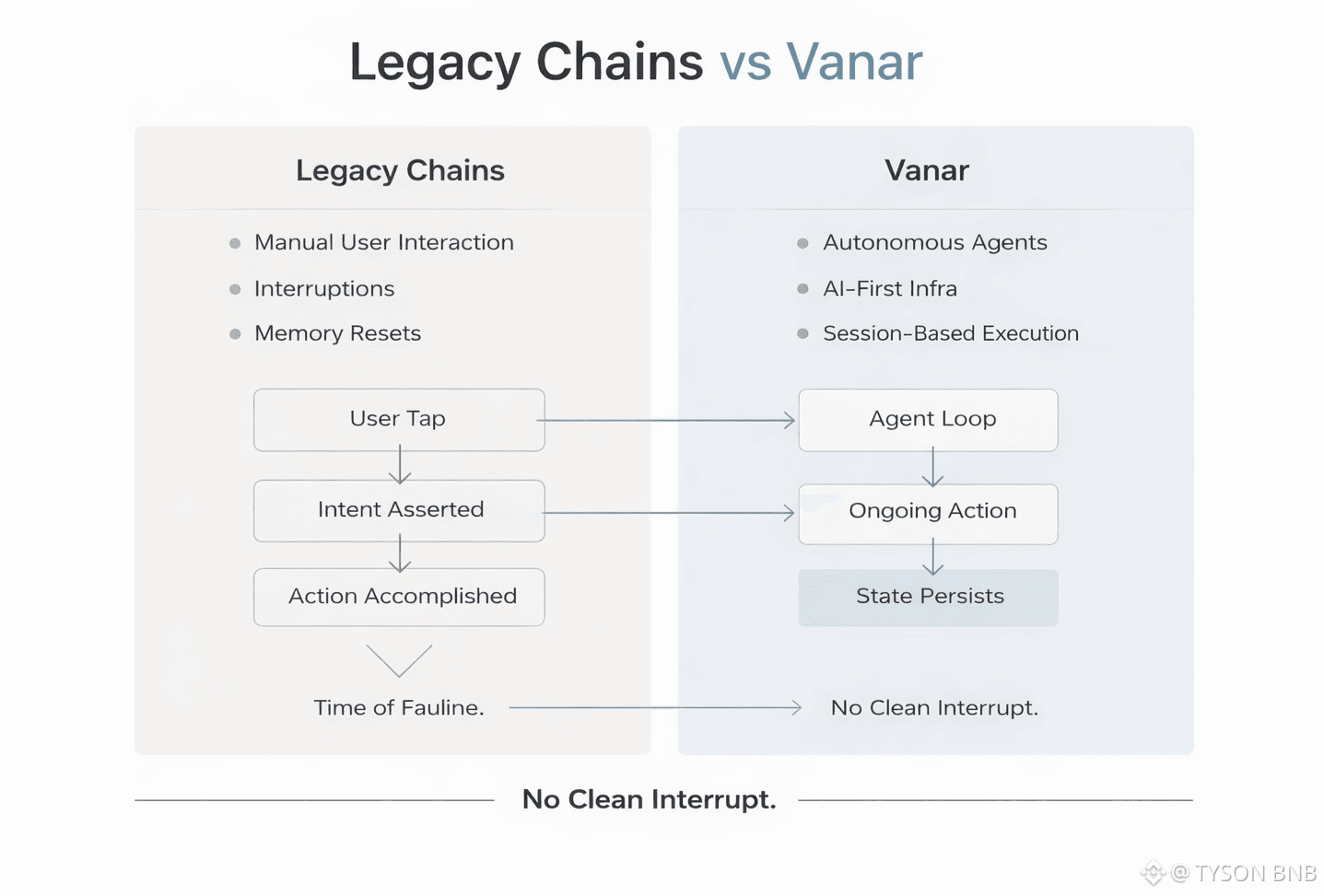

An agent spins up. It’s not a user in the traditional sense. No mouse, no wallet popup, no moment of hesitation. Just a loop that observes, decides, and acts. On legacy chains, that already creates tension. These systems were built assuming someone is always on the other side of the action present, accountable, interruptible.

Autonomous agents don’t fit that shape.

They don’t pause for signatures. They don’t “come back later.” They operate continuously, carrying intent forward without waiting for human confirmation. At small scale, legacy chains appear to handle this fine. Transactions clear. Blocks finalize. Nothing visibly breaks.

That’s the illusion.

Most older chains are optimized around episodic interaction. A transaction happens, context ends. State is updated, memory resets. The system quietly depends on those resets to stay safe. Every new action is supposed to reassert validity: who you are, what you’re allowed to do, whether conditions still hold.

Autonomous agents don’t respect those boundaries.

They don’t reintroduce themselves. They don’t naturally revalidate assumptions. They continue operating across state changes that were never designed to be crossed without interruption. And legacy chains, built around discrete user intent, have no clean way to notice when continuity itself becomes the risk.

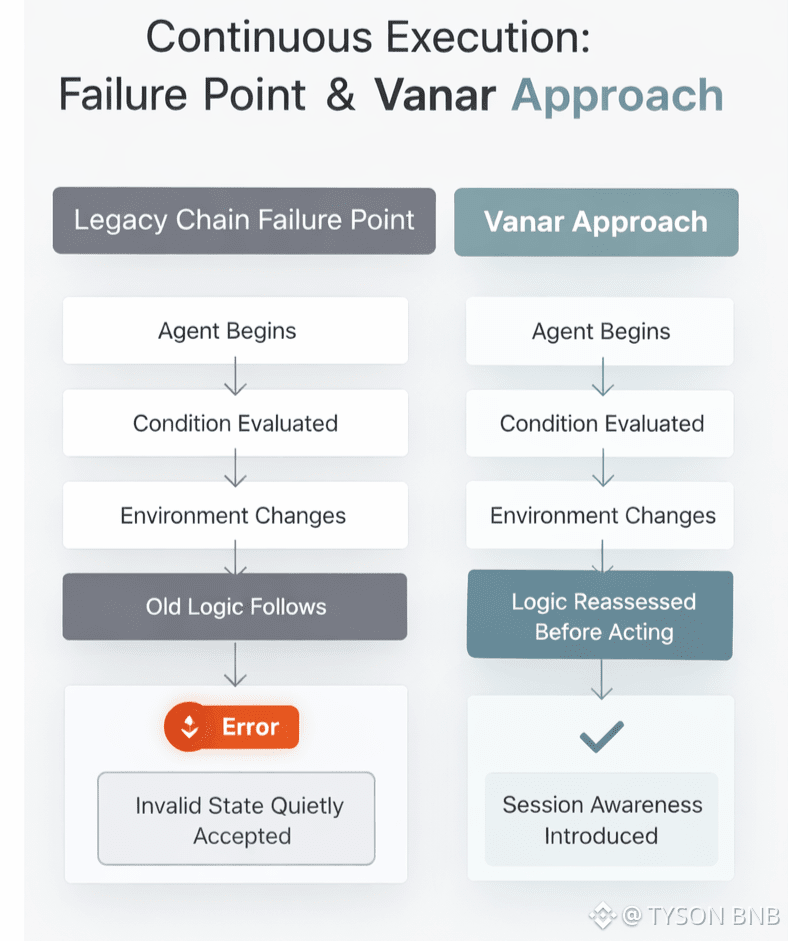

The first failure mode isn’t congestion.

It’s stale logic.

An agent evaluates a condition that was true when its loop began. Mid-execution, the environment shifts pricing changes, permissions update, resource availability moves. The agent keeps going. The chain keeps accepting transactions. There’s no built-in moment where the system insists on a fresh interpretation of reality.

From the outside, everything looks correct.

From the inside, no one can fully explain why the system kept agreeing.

Legacy chains try to patch this with timeouts, rate limits, and forced reauthentication. But those tools were designed for humans who stop and start. Agents don’t. They turn these guardrails into edge cases things to route around, not moments of reassessment.

This is where the architectural mismatch shows.

Chains that treat execution as isolated events struggle when execution becomes continuous. State transitions happen faster than assumptions can be rechecked. Memory leaks into places it was never meant to persist. The system remains technically valid while becoming conceptually indefensible.

Vanar approaches this problem from the opposite direction.

Instead of assuming transactions are the atomic unit, Vanar assumes workloads persist. Autonomous agents, live worlds, AI-driven systems these are treated as first-class citizens, not anomalies. Session continuity isn’t something to bolt on later; it’s the baseline condition.

That changes how failures appear.

On Vanar, the danger isn’t that agents will overwhelm the chain. It’s that they won’t trigger obvious alarms at all. Execution continues smoothly even as the context that justified it quietly drifts. The system doesn’t collapse. It just keeps going.

And that’s the uncomfortable part.

Because failure under autonomous workloads doesn’t look like downtime. It looks like systems that function while slowly losing the ability to explain their own decisions.

Legacy chains fail here not because they’re slow or expensive, but because they rely on interruptions they no longer control. They expect pauses that agents never give. They depend on human-shaped rhythms in a world that no longer runs on them.

Vanar doesn’t pretend this problem disappears.

It accepts that continuity is the new normal and forces teams to confront the real challenge earlier: how long should intent be trusted, when should memory expire, and what it means to say “yes” when no one is there to ask again.

Autonomous agents don’t announce when assumptions expire.

Most chains only realize that after the system has already agreed one time too many.