Walrus Protocol has become the private data layer I rely on when I want my on-chain agents to actually understand me and act on my behalf in early 2026 — without handing my personal info to some centralized server that could leak it or sell insights. I’ve been experimenting with intent-based systems (the kind where you say “book me the cheapest flight to Lviv next Friday” or “rebalance my portfolio if BTC drops below $80k”) and the biggest bottleneck was always memory: agents forget everything after one session unless you feed them the same context over and over. Walrus fixes that by letting agents store and use private, long-term data securely and verifiably.

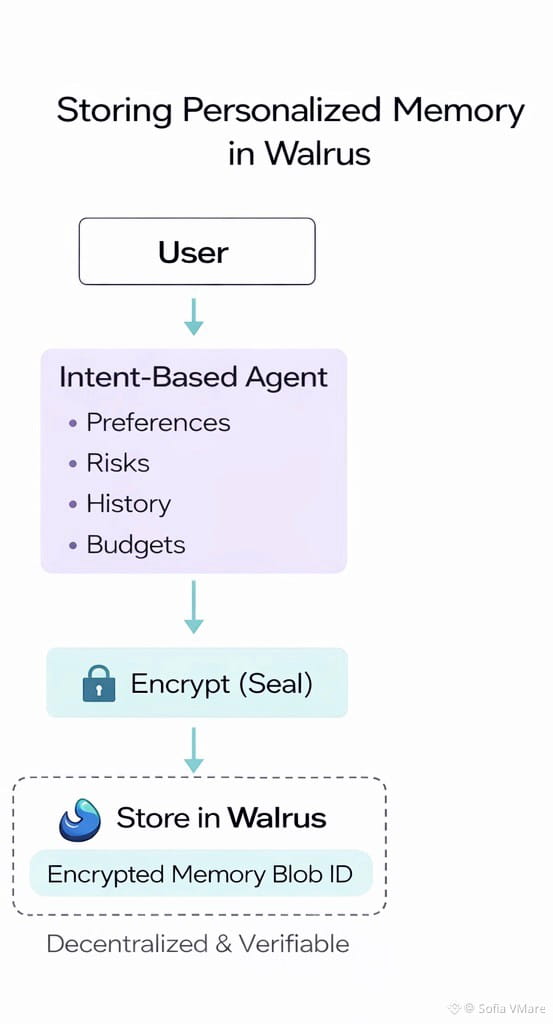

Here’s how it works for me in practice. I have a small personal agent I built on Sui that helps with travel planning and DeFi monitoring. Every time I interact with it — tell it my budget preferences, preferred airlines, dietary restrictions, risk tolerance for trades, favorite hotels — I save the updated context as an encrypted blob on Walrus. Seal encrypts it client-side before upload so no node ever sees my real travel habits or wallet addresses. Red Stuff spreads it with 4–5x replication — my entire agent memory folder (~12 GB after 6 months of use) costs me ~$0.04–$0.07 per month. Stable USD pricing lock-in means I don’t panic if $WAL pumps.

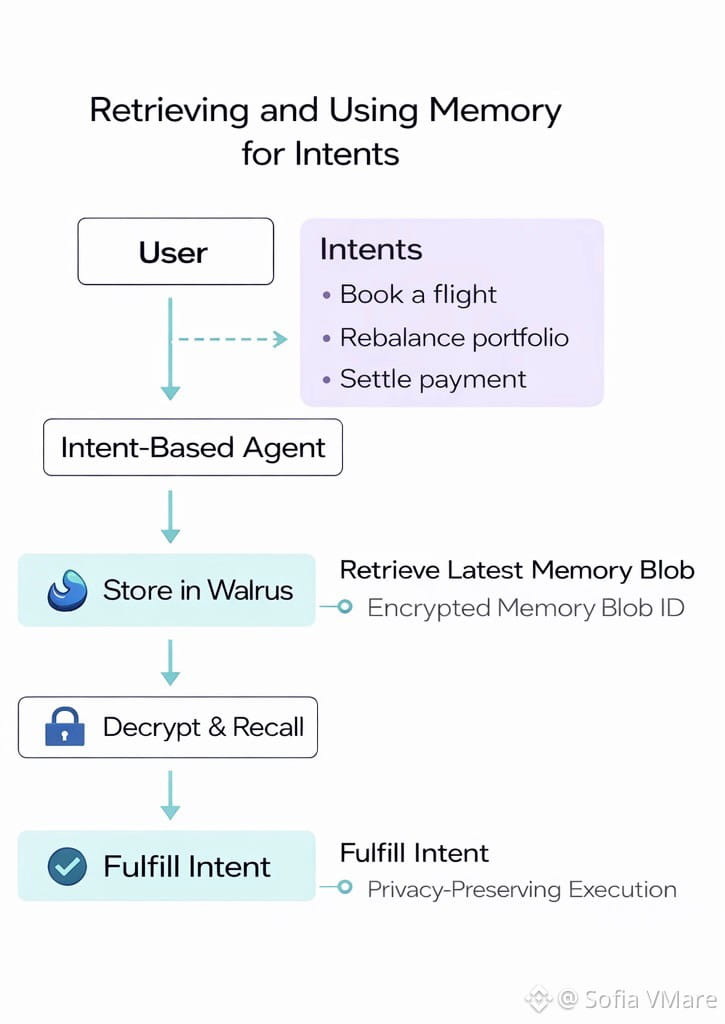

The Proof of Availability on Sui is what makes it reliable. Each memory update gets a PoA certificate: “this exact context blob was available on February 12, 2026.” The agent’s Move contract references the blob ID — when I give it an intent (“find me a flight under $300”), it pulls the latest memory blob, decrypts it locally (using my key), and factors in my preferences without ever sending my private data off-chain. No more “sorry, I forgot you hate early flights” or “remind me your budget again.” It remembers.

The intent engine (I use a simple one built on Anoma-style intents + Sui execution) takes my natural-language request, breaks it into subtasks, and the agent uses Walrus memory to personalize every step: “user prefers window seats and avoids connections longer than 2 hours” → filters flights accordingly. If I update preferences (“now I’m vegetarian”), I push a new blob version — the contract can enforce “always use the latest approved memory.” Versioning keeps old contexts if I ever need to audit “why did it book that hotel last month?”

Seal is essential for privacy. My wallet addresses, exact travel dates, health notes (e.g., “avoid long flights due to back pain”) — all encrypted. Upcoming zk-proofs will let the agent prove “this booking was made using the user’s verified preferences from blob X” without revealing what those preferences are — perfect for compliance or sharing execution proofs without doxxing myself.

I’ve seen other people experimenting too. A DAO member uses Walrus memory for a governance agent — it remembers past proposals, member voting patterns (anonymized), and personal priorities (“always vote against high treasury spend”). The agent drafts votes based on long-term context without leaking individual opinions. A freelancer has an intent agent that remembers client styles, deadlines, and payment terms — pulls from Walrus blobs to auto-generate proposals without re-explaining everything each time.

For anyone using intent-based systems (Anoma, SUAVE, Across, etc.), Walrus solves the memory problem: private, persistent, verifiable context that agents can read/write on-chain without central servers owning your life. Costs are negligible — a busy agent’s monthly memory (10–30 GB) is under $0.10. Pipe Network makes retrieval fast enough for real-time intents even on mobile.

The $WAL token fits naturally: agents pay tiny $WAL fees to update memory blobs, nodes stake $WAL to host high-traffic personal memories and earn more, governance uses $WAL to vote on zk standards or memory pricing curves. As intent agents become everyday tools, $WAL captures value from every personalized action.

The Walrus Foundation RFP is funding intent-specific tools — one grant went to a “Memory Blob SDK” for easy agent integration. Another is building templates for zk-verified intent execution proofs.

In 2026, when agents are supposed to act like extensions of ourselves, having private, persistent memory that lives on-chain feels essential. Walrus isn’t the intent system itself — it’s the data layer making agents actually remember you, act intelligently, and keep your secrets. If you’re playing with intents or building an agent — try giving it a Walrus blob for memory. Upload a small context file, let your agent reference it, see how much smarter and more “you” it becomes.

That’s not hype; it’s just infrastructure letting agents feel personal instead of generic. Walrus is making intent-based systems actually useful, one private, verifiable memory blob at a time.