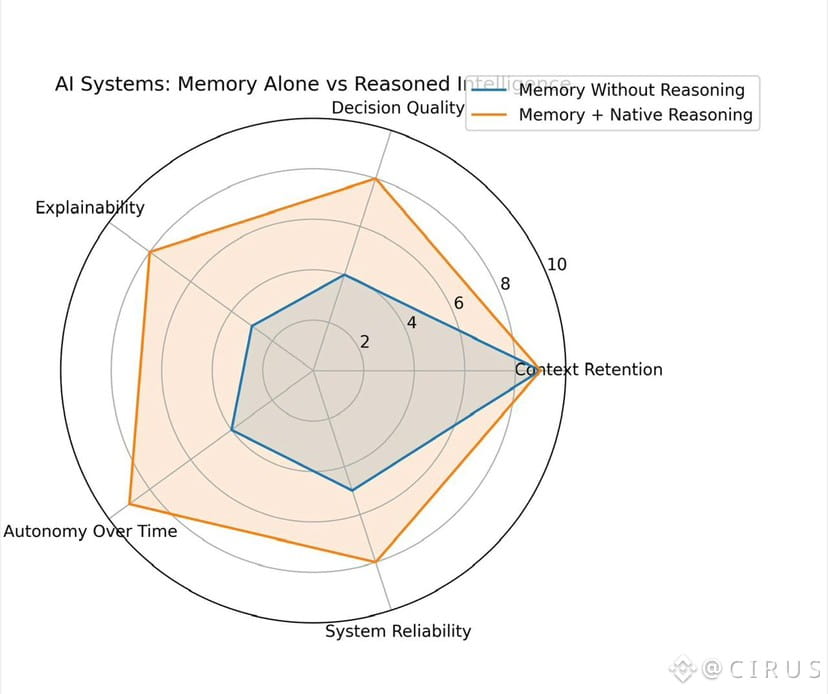

AI systems are often described as intelligent because they remember. They retain context, store past interactions, and reference historical data when responding. Memory has become the headline capability. Vector databases, embeddings, long context windows, and persistent storage are treated as proof that systems are becoming smarter.

But memory alone does not create intelligence.

In fact, when AI has memory without reasoning, it often becomes more dangerous, not more useful. It remembers what happened but cannot reliably explain why it acted, whether it should act differently next time, or how one decision connects to another over time. This gap becomes critical the moment AI systems move from assistance into autonomy.

VANAR exists because this problem is structural, not incremental.

Memory Without Reasoning Creates Illusions of Intelligence

Systems that remember but do not reason can appear capable in controlled environments. They recall facts, repeat patterns, and respond coherently to prompts. However, their behavior degrades the moment context becomes ambiguous or goals evolve.

They cannot distinguish correlation from causation. They cannot weigh competing constraints. They cannot justify trade-offs. They simply react based on stored signals.

In consumer applications, this limitation is inconvenient. In financial, governance, or autonomous systems, it becomes unacceptable.

VANAR starts from the assumption that memory must serve reasoning, not replace it.

Why Memory Alone Fails at Autonomy

Autonomous agents operate across time. They do not complete one task and stop. They continuously observe, decide, act, and adapt.

Memory without reasoning breaks this loop.

An agent might remember previous states but cannot evaluate whether those states were optimal. It might repeat actions that worked once but fail under new conditions. It might escalate behavior without understanding the consequences.

This leads to brittle automation. Systems that function until they encounter novelty, then fail silently or unpredictably.

VANAR’s architecture treats this failure mode as unacceptable by design.

Reasoning as a Native Capability

Most AI systems today outsource reasoning. Decisions happen in opaque models or centralized services, while blockchains merely record outcomes. This separation creates a trust gap.

If reasoning cannot be inspected, it cannot be audited. If it cannot be audited, it cannot be trusted in regulated or high-stakes environments.

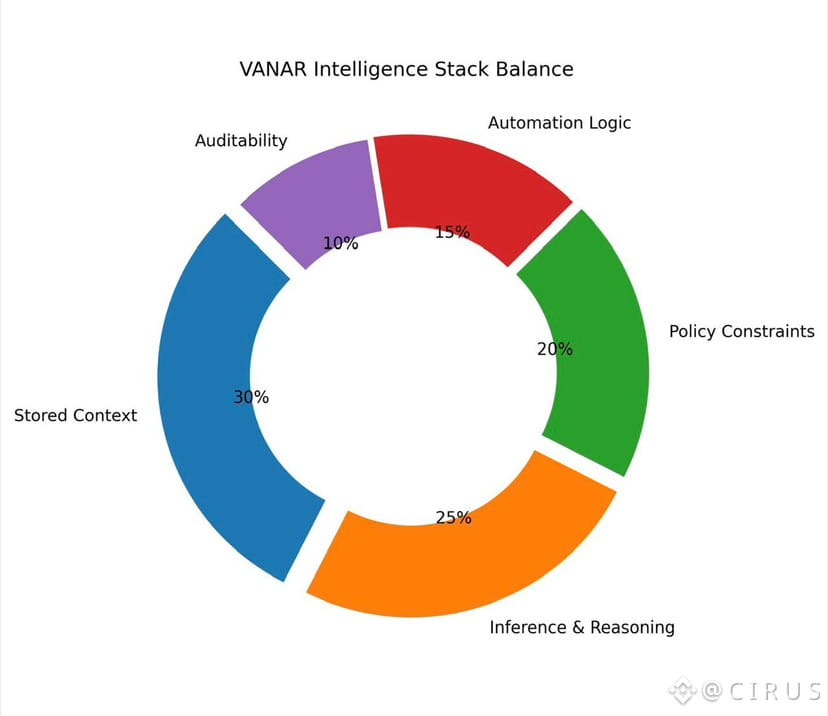

VANAR embeds reasoning into the protocol layer. Inference is not an external service. It is a native capability that interacts directly with stored memory and enforced constraints.

This does not mean every decision is deterministic. It means every decision is accountable.

Memory Gains Meaning Through Reasoning

Stored context becomes valuable only when it can be interpreted.

VANAR’s memory model preserves semantic meaning rather than raw data. Past actions, inputs, and outcomes are not just recorded. They are structured so reasoning processes can evaluate them.

This enables agents to answer questions that matter: Why did I take this action What conditions led to this outcome What changed since the last decision

Without reasoning, memory is just accumulation. With reasoning, it becomes learning.

Enforcement Prevents Runaway Behavior

AI systems without reasoning often rely on post-hoc controls. Developers intervene when something goes wrong.

That approach does not scale.

VANAR moves enforcement into the protocol itself. Policies, constraints, and compliance logic are applied consistently, regardless of agent behavior.

This ensures that even when agents adapt, they remain bounded. Memory cannot be misused to reinforce harmful patterns. Reasoning operates within defined limits.

Why Explainability Matters More Than Performance

In real systems, speed is rarely the primary concern. Understanding is.

When an AI system makes a decision, stakeholders need to know why. Regulators require it. Enterprises demand it. Users expect it when outcomes affect them directly.

Memory-only systems cannot explain themselves. They can reference past data but cannot articulate causal logic.

VANAR prioritizes interpretability by making reasoning observable and reproducible. This is slower to build but essential for trust.

The Difference Between Reaction and Judgment

Memory-driven AI reacts. Reasoning-driven AI judges.

Reaction is fast but shallow. Judgment is slower but durable.

VANAR is designed for judgment.

It assumes that AI systems will increasingly be responsible for actions with real consequences. That responsibility requires more than recall. It requires evaluation, constraint balancing, and accountability.

Why This Matters for Web3

Web3 systems already struggle with trust. Adding AI agents without reasoning only amplifies that problem.

Chains that integrate memory without reasoning will see short-term experimentation but long-term instability. Agents will act, but no one will fully understand why.

VANAR positions itself differently. It assumes AI will become a core participant in Web3 and designs infrastructure accordingly.

My Take

AI with memory but no reasoning is not intelligent. It is reactive.

As AI systems move into autonomous roles, infrastructure must evolve. VANAR’s focus on reasoning, enforcement, and interpretability reflects a deeper understanding of what autonomy actually requires.

Memory is necessary. Reasoning is what makes it safe.